Final Project Milestone #2: Liang

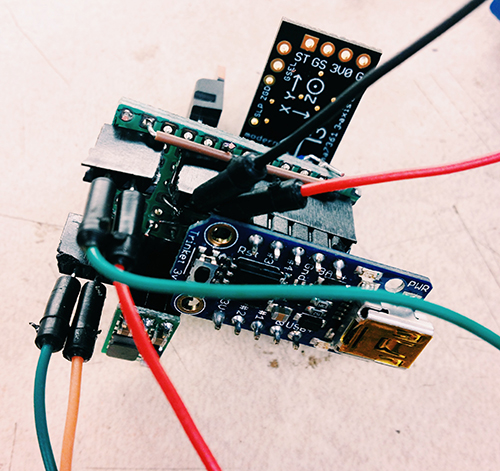

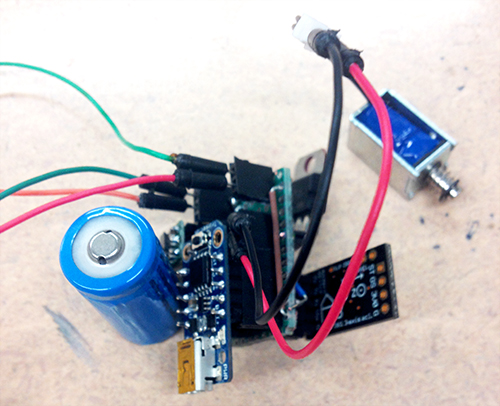

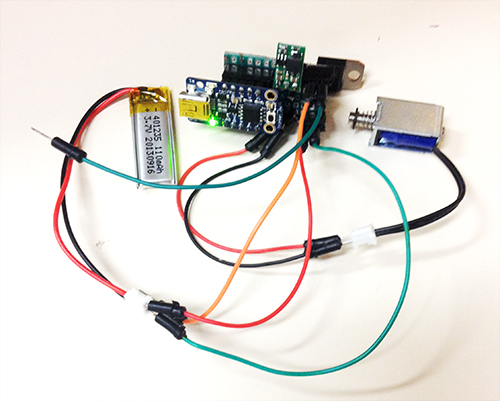

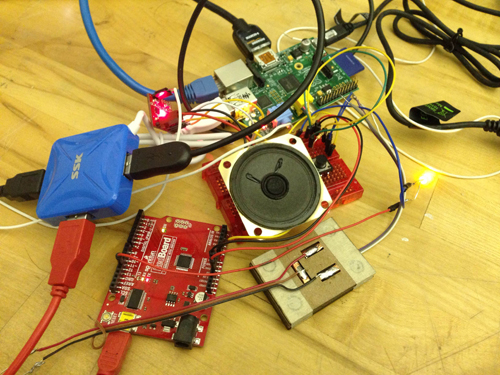

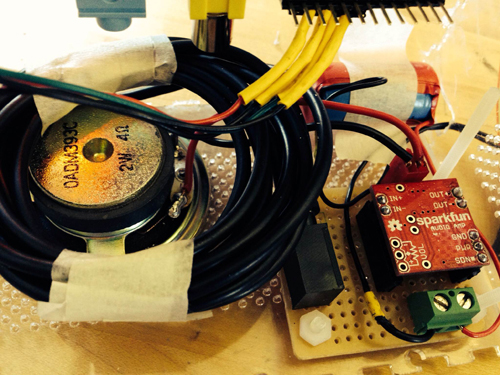

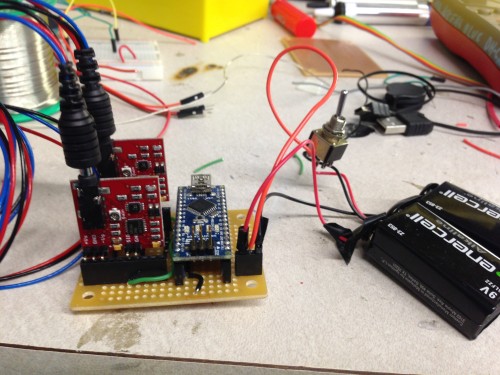

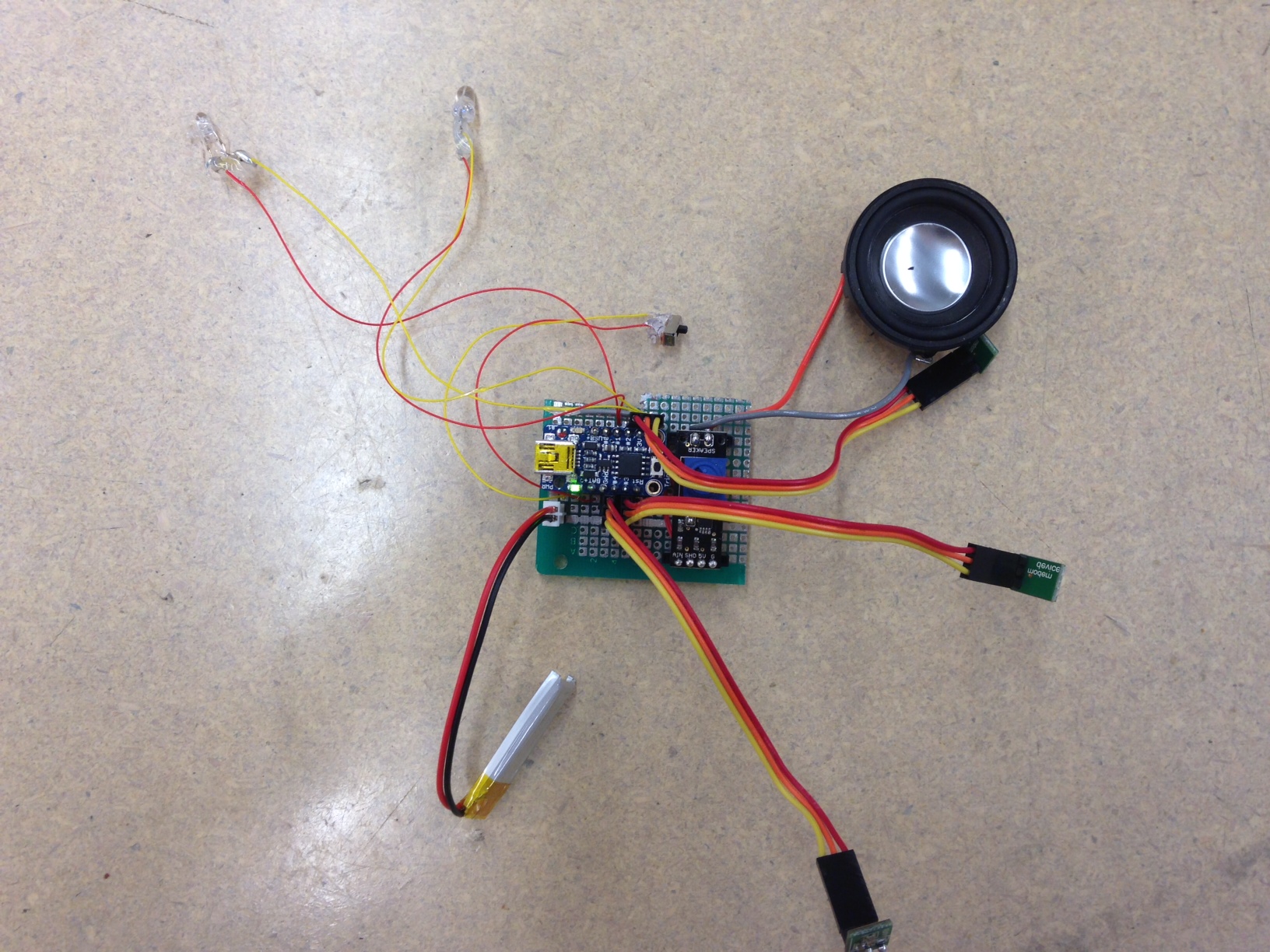

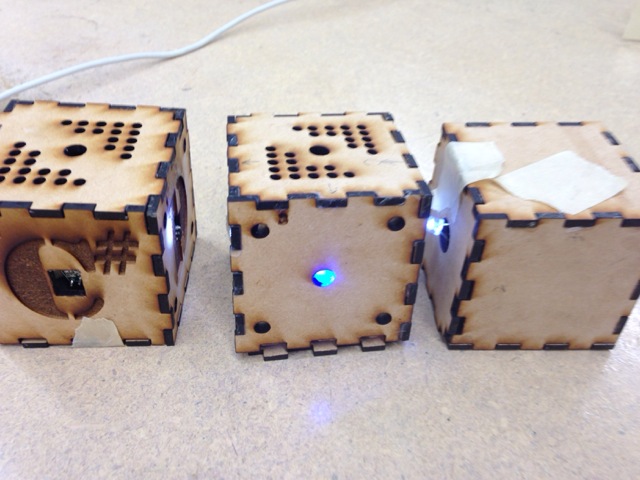

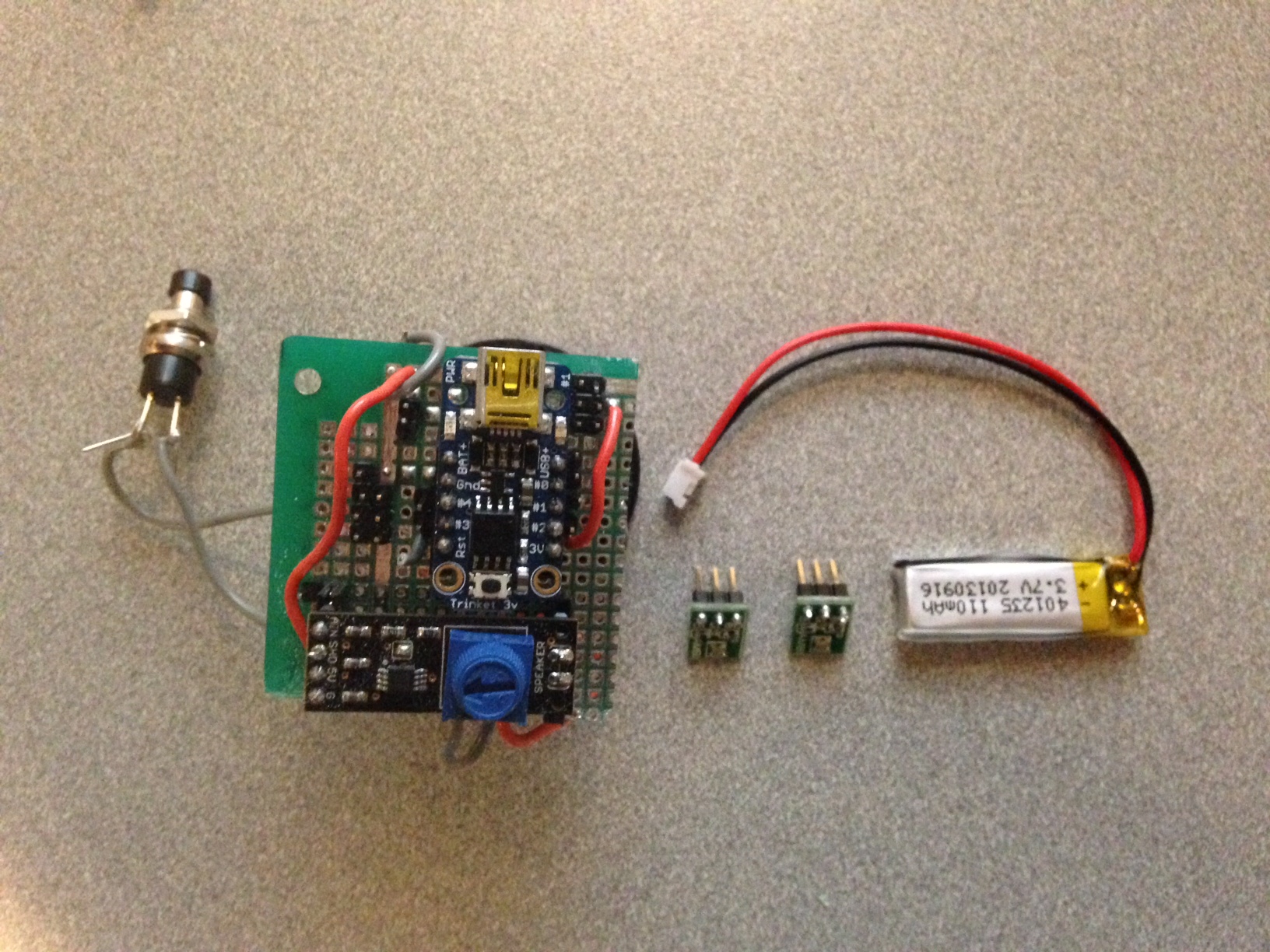

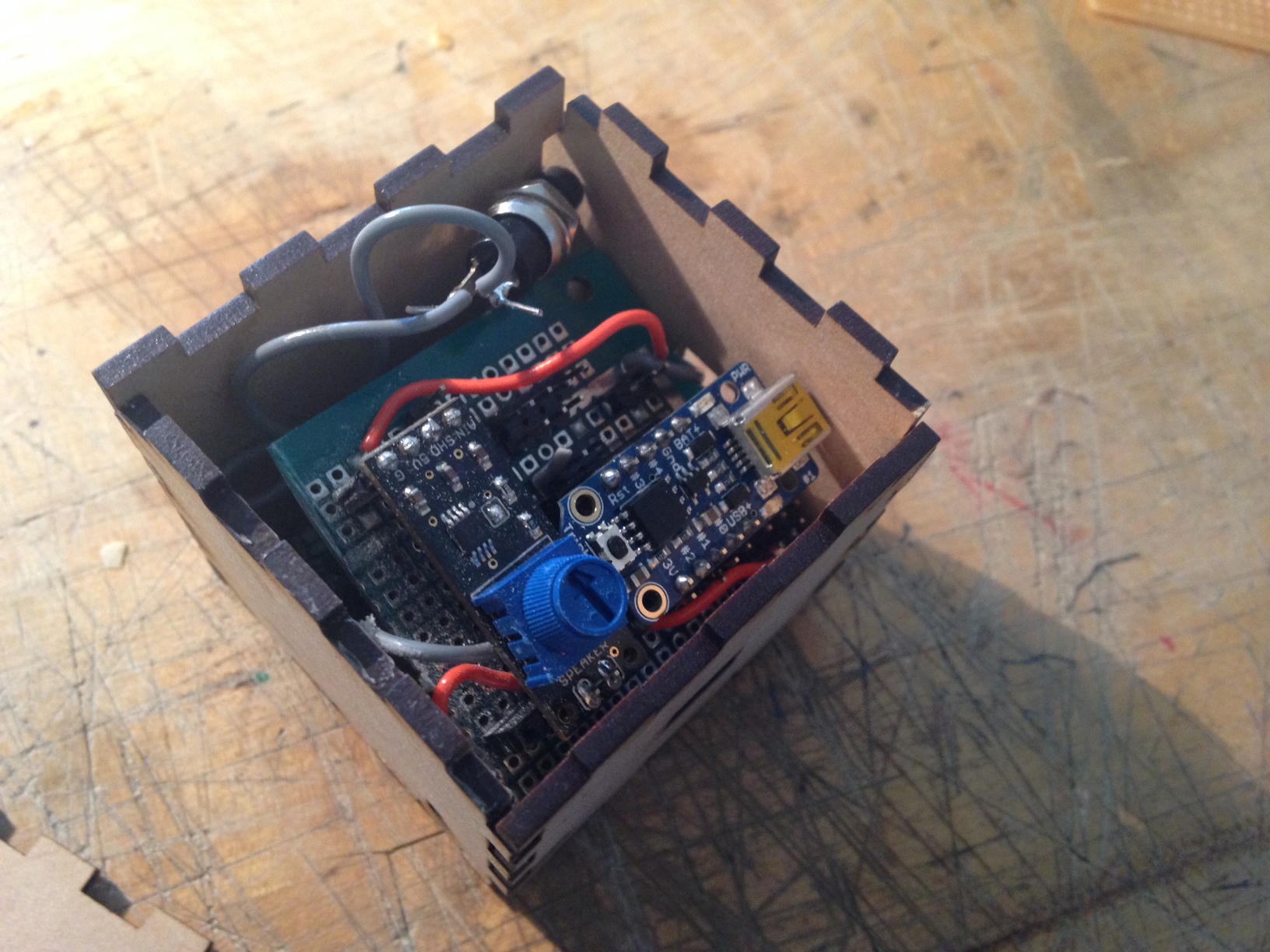

According to design critique from three guests, I rethought the scenarios and target user group. Instead of making tempos, I believe Tapo could produce more for users, for example, rhythms. Yes, with different cups and different resonance, it could generate various rhythms. Imagine multiple users play together, it would be a playful and generic environment for participants to make original rhythms with very original sounds and tempos. As for the target users, I think they depend on the situations where Tapo could fit in. For educational goals, it could be applied in a classroom, teaching students basic process of making rhythms and the connection between the sound and the physical properties of the cup. If it is set up in an public space, it encourages people to play and enjoy the process of making rhythm. So I believe it has great potential in people’s everyday activities. Based on the circuit I built I set up one prototype (actually two prototypes and the first one failed) to test if it runs correctly. Below images show how the prototype looks. Besides testing all components on the board, I also test batteries. In the board I set two separate interfaces for batteries which supply power for the trinket board and the extra solenoid individually. However, test demonstrated that only one battery worked well with all parts. Therefore, I finally selected one small LiPo as the only one power supply.

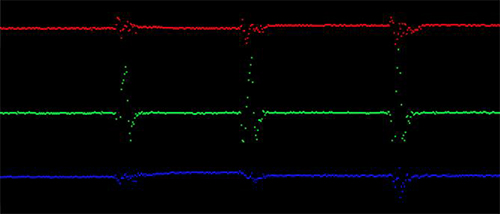

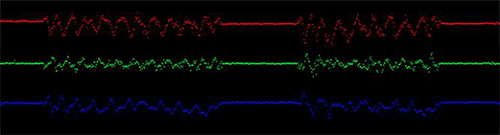

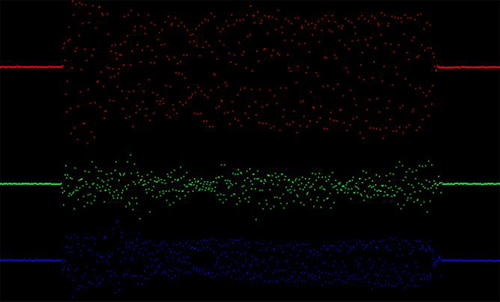

Another work is about gesture detection and recognition. At the beginning I took a complicated process to recognise user’s gesture. The entire solution is shown in the below diagram. The basic idea: The data of X-axis, Y-axis and Z-axis from accelerometer are sent to the controller board. Everytime set a window for data set (it has to be 2^n, I give it 128). When the window is full, calculate these data to get mean value of each axis, entropy value of each axis, energy value of each axis, and correlation value of each two axises (more details about formula and principles please refer to Ling’s paper). Store the result in the form of arff file. Then in Weka import this file and use J48 algorithm to train a decision tree. There are two parts in gesture recognition: gesture recognition model training and test. With different user’s gesture data and above process I could make a decision tree. More tester’s data makes it more robust and accuracy. Then when recognising one gesture, it follows above process but not produces arff file, instead, directly process data and send the result to the trained decision tree, and the classification tells the category of the gesture. I finished a Processing application to visualise the data received from the accelerometer and distinguished four gestures: pick-up, shake, stir counter clockwise, and stir clockwise. I used pick-up gesture to trigger the entire system. Shake gesture can be used to generate random predefined rhythms. Stir counter clockwise means slow down the speed of rhythms. Stir clockwise means speed up rhythms. Below shows the data variation of each axis in different gestures.

Pick-up gesture

Stir counter clockwise gesture

Stir clockwise gesture

Shake gesture

With this method it has one several limitations: a) it needs triggers to start and terminate the gesture detection process; b) two types of stir gestures are not well distinguished; c) since it collects a large number of data it causes delay. In addition, the mapping between the stir gestures and the control of speed of rhythms is weird and not natural. So I adapted another much simple and direct way to test gestures. Since user’s interaction with cups just lasts at most a few seconds, I used 40 data (every 50ms receiving X, Y, Z data) to detect only two gestures: shake and pick-up. The mapping remains the same. The device would be mounted on the cup, so I tried to monitor the data of the axis which is perpendicular to the ground. If the value reaches the threshold that I set, and the other two axises remain stable, it will be regarded as pick-up gesture. To simplify the process, the other conditions are considered as shake gestures. The only problem goes to what kind of interaction and input should exist in this context?

Here is a short demo of gesture recognition: