The project is centered around an 8-to-1 analog video multiplexer board. This board is a collaboration between Ray Kampmeier and Ali Momeni, and more information can be found here.

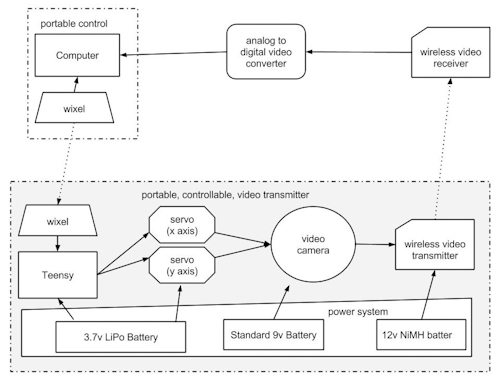

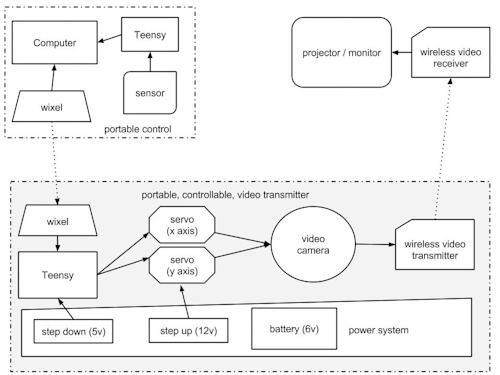

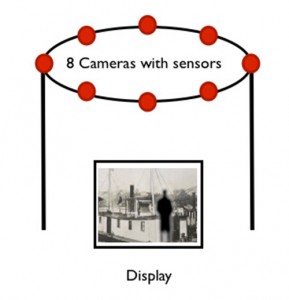

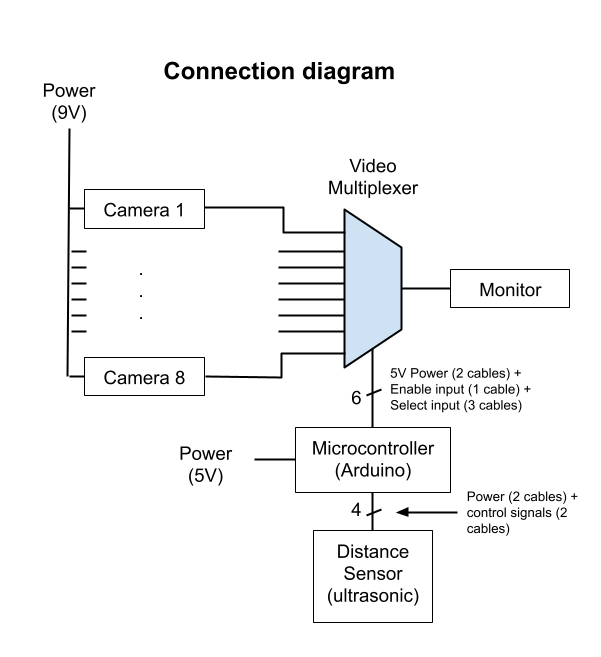

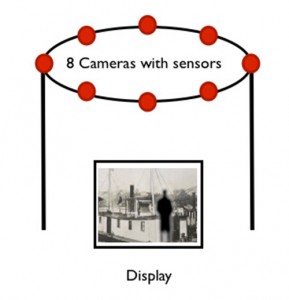

In the present setup, 8 analog small video cameras (“surveillance” type) are connected as inputs to the board, and the output is connected to a monitor. The selection of which of the 8 inputs gets routed to the output is done by an Arduino, which in turn maps the input of a distance sensor to a value between 1 and 8. Thus, one can cycle through the cameras simply by placing an object at a certain distance of the sensor. The connection diagram can be seen below:

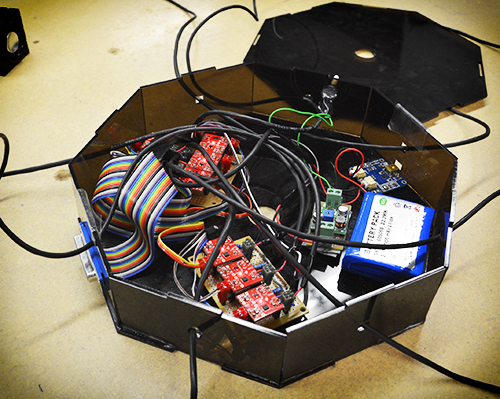

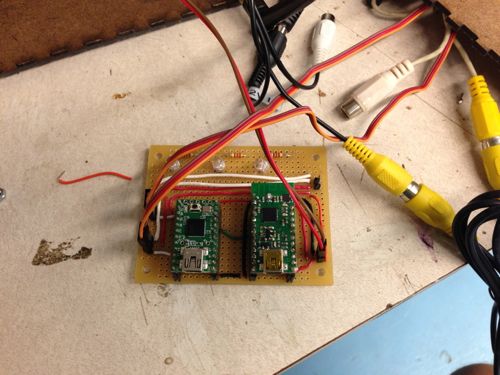

A picture of the board with 8 inputs is displayed below (note the RCA connectors):

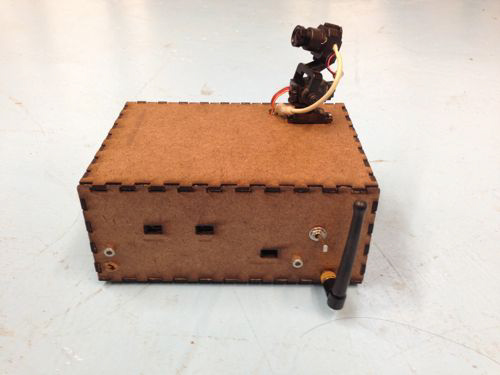

The original setup built for showcasing the project uses a box shaped cardboard structure to hold a camera in each of its corners, with the cameras pointing at the center of the box (see below).

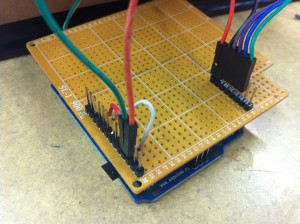

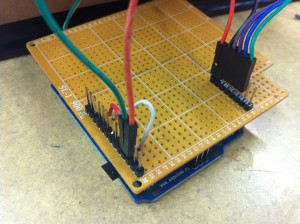

A simple “shield” board was designed to facilitate the interface between the Arduino, the distance sensor and the video mux.

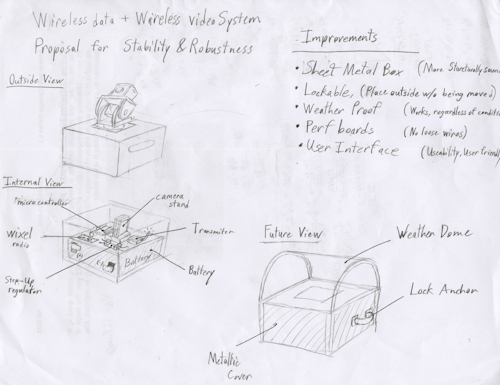

Improvements

The current camera frame is of cardboard which is not the most robust of materials. A new frame will be constructed of aluminum bars assembled in a strong cube:

This is the cube being assembled:

Wires attachments for the cameras will be routed away from the box to a board for further processing.

Project Ideas

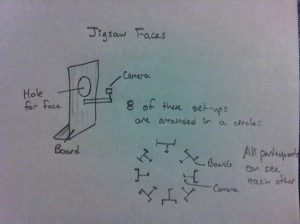

1) Jigsaw faces

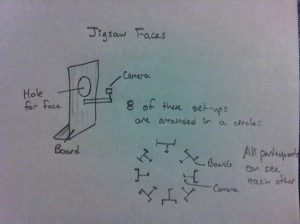

Our faces hold a universal language. We propose combining the faces of eight people to create a universal face. Eight cameras are set-up with one per person. The participants place their face through a hole in a board so that the camera only sees the face. These set-ups are arranged in a circle so that all the participants can see each other. The eight faces are taped and a section of face is selected from each person to combine into one jigsaw face. This jigsaw face is projected so that the participants can see it. This completes the feedback loop. The jigsaw face updates in real time so as the participants share an experience their individual expressions combine in the jigsaw face.

The Jigsaw Face will consist of a few boards (of wood) for people to put their face through. Each board will have a camera attachment and all the cameras will be attached to a central processor. The boards will be arranged so that people face each other across a circle. This will enable feedback among the participants.

2) The well of time : Time traveling instrument

I’m still thinking about the meaning of 8 cameras and why we need and what we can do originally. And, I assume that 8 cameras mean 8 different views and also it could be 8 different time points distinguishably. After arriving this point, I realized we can suggest a moment and situation that is a mixed timezone and image with a user’s present image from each camera and the old photo from in the past which is triggered by a motion or distance sensor, attached with each camera.

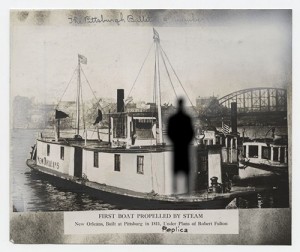

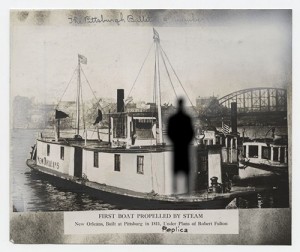

Here is a sample image of my thought. This image contains the moment of now and the past time when the computer science building had constructed.

And, if a user stand with another camera, we could represent the image like below. The moment of first introducing the propeller steam boat. basically, this is the artistic way of time traveling. So, our limitation by black and white camera couldn’t be problem and in this case, it could be benefit.

For this idea, of course, we have to make some situation and installation looks like this.

More precisely, it has this kind of structure.

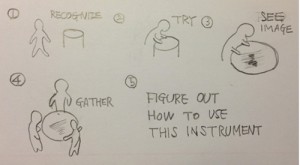

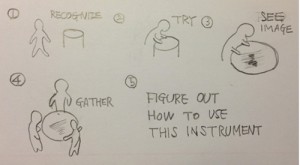

As a result, an example interaction scenarios with a user and diagram.

3) Object interventions

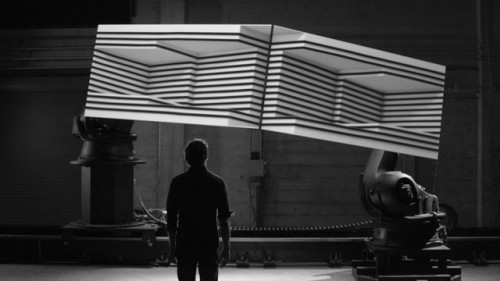

Face and body expressions can tell a lot about people and their feelings towards the surroundings or the objects of interaction. Imagine attaching eight cameras to a handheld object or a large sculpture. With eight cameras as the inputs, the one output will be the video from the camera that is activated by a person interacting with it in that specific area. The object can range from a small handheld object like a rubik’s cube, to a large sculpture at a playground. We think it will be very interesting to see the changes in face and body expressions as a person gets more (or less) comfortable with the object. It might be more interesting to hide these cameras so that the user will be less conscious of their expressions because they do not realize they are being filmed. It will be more difficult to do that with a large public sculpture, but we can do a prototype of a handheld object where we can design specific places where the cameras should locate so that they cannot be seen.

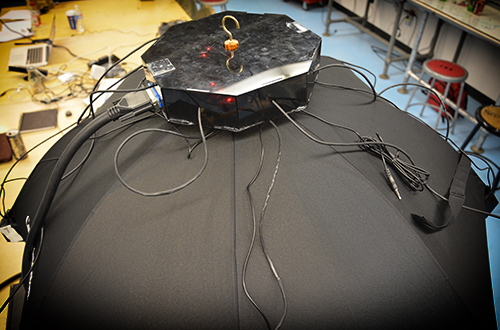

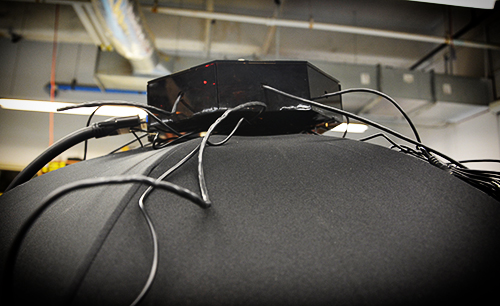

4) Body attachment

When we see the world we see it from our eyes. Why not view the world from our feet. Perceptions can change with a simple change in vantage point. We propose to place cameras on key body locations: feet, elbows, knees and hands in order to view the world from a new vantage point as we interact with our surroundings. Cameras would be attached via elastic and wires routed to a backpack for processing.

Group members:

- Mauricio Contreras

- Spencer Barton

- Patra Virasathienpornkul

- Sean Lee

- JaeWook Lee

- David Lu