Final Project: Jake Marsico

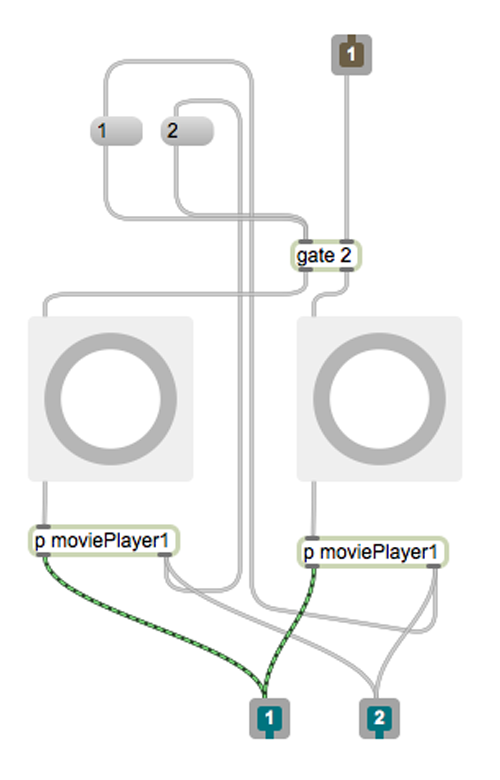

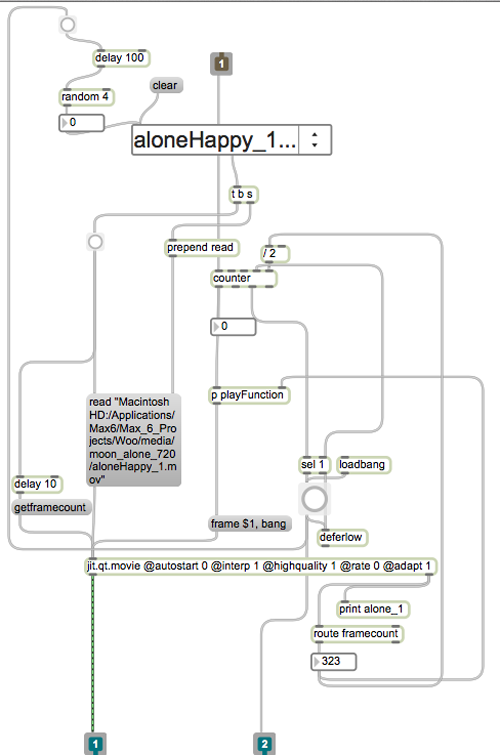

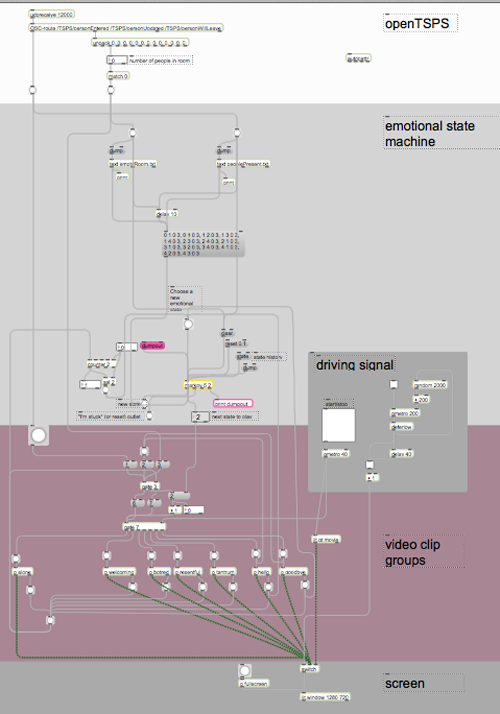

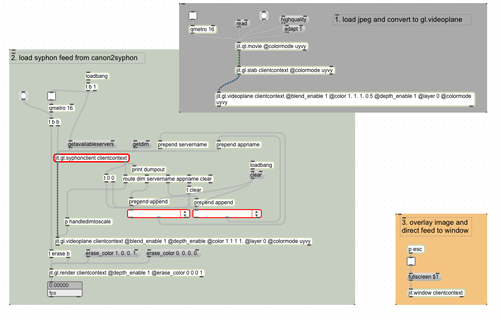

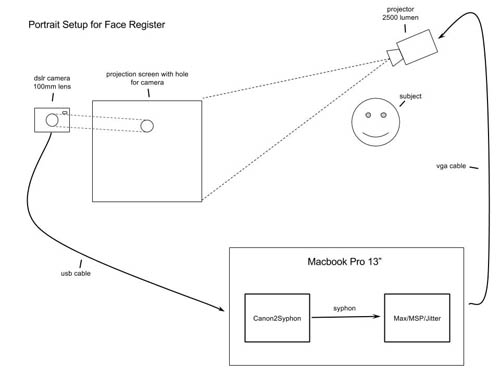

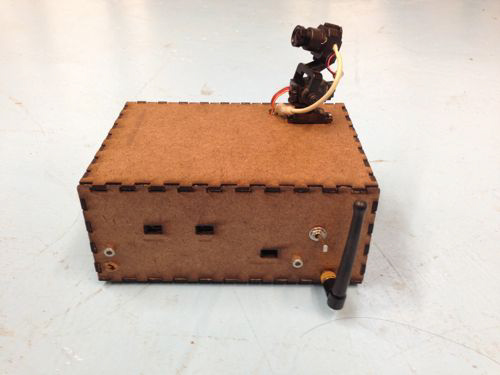

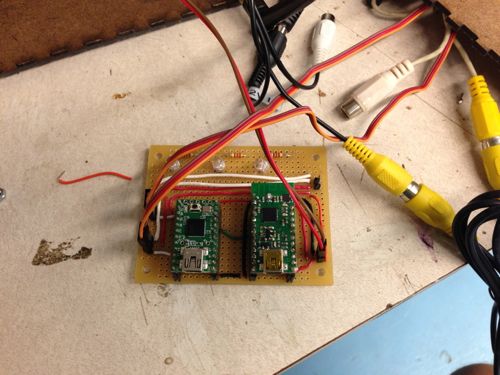

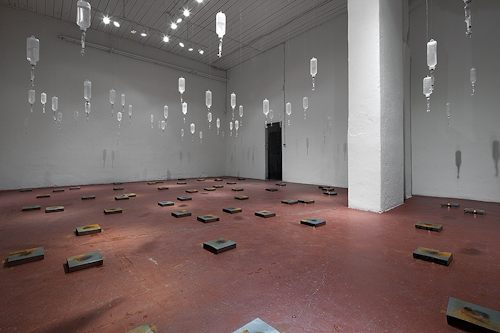

The final deliverable of these two instruments (video portrait register and reactive video sequencer) was a series of two installations on the CMU campus.

Learnings:

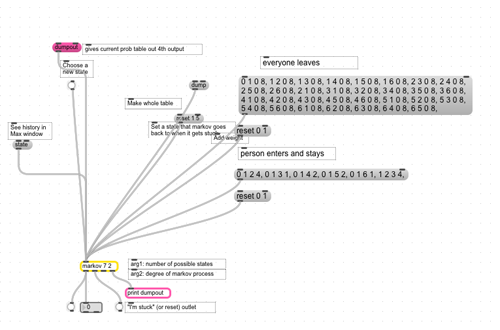

The version shown in both installations had major flaws. The installation was meant to show a range of clips that varied in emotion and flowed seamlessly together. Because I shot the footage before completing the software, it wasn’t clear exactly what I needed from the actor (exact time of each clip, precision of face registration, number of clips for each emotion). After finishing the playback software, it became clear that the footage on hand didn’t work as well as it could. Most importantly, the majority of the clips lasted for more than 9 seconds. In order to really nail the fluid transitions, I had to play each clip foward and then in reverse, so as to ensure each clip finished in the same position it started. To do that with each 9 second clip would have meant that each clip would have lasted a total of 18 seconds (9 forward, 9 backwards). These 18 second clips would eliminate any responsiveness to movements of viewers.

As a result, I chose to only use the first quarter of each clip and play that forward and back. Although this made the program more responsive to viewers, it cut off the majority of the subject’s motions and emotions, rendering the entire piece almost emotionless.

Another major flaw is that the transitions between clips very noticeable as a result of imperfect face registrations. In hindsight, it would require an actor or actress with extreme dedication and patience to perfectly register their face at the beginning of each clip. It might also require some sort of physical body registering hardware. A guest critic suggested that a better solution might be to pair the current face-registration tool with a face-tracking and frame re-alignment application in post production.

If this piece were to be shown outside the classroom, I would want to re-shoot the video with a more explicit “script” and look into building a software face-aligning tool using existing face-tracking tools such as ofxFaceTracker for openFrameworks.

Code:

github.com/jmarsico/Woo/tree/master