ideasthesia from JaeWook Lee on Vimeo.

Project Milestone 1 – Jake Marsico

Portrait Jig Prototype

One of the primary challenges of delivering fluid non-linear video is to make each clip transition as seamless as possible. To do this, I’m working on a jig that will allow the actor to ‘register’ the position of his face at the end of each short clip. As you can see in the image below, the jig revolves around a two-way mirror that sits between the camera and the actor. This will allow the actor to mark off eye/nose/mouth registers on the mirror and adjust his face into those registers at the end of every movement.

The camera will be located directly against the mirror on the other side to minimize any glare. As you can see below, the actor will not see the camera. Likewise, video shot from the camera will look as though the mirror is invisible. This can be seen in the test video used in the max/openTSPS demo below.

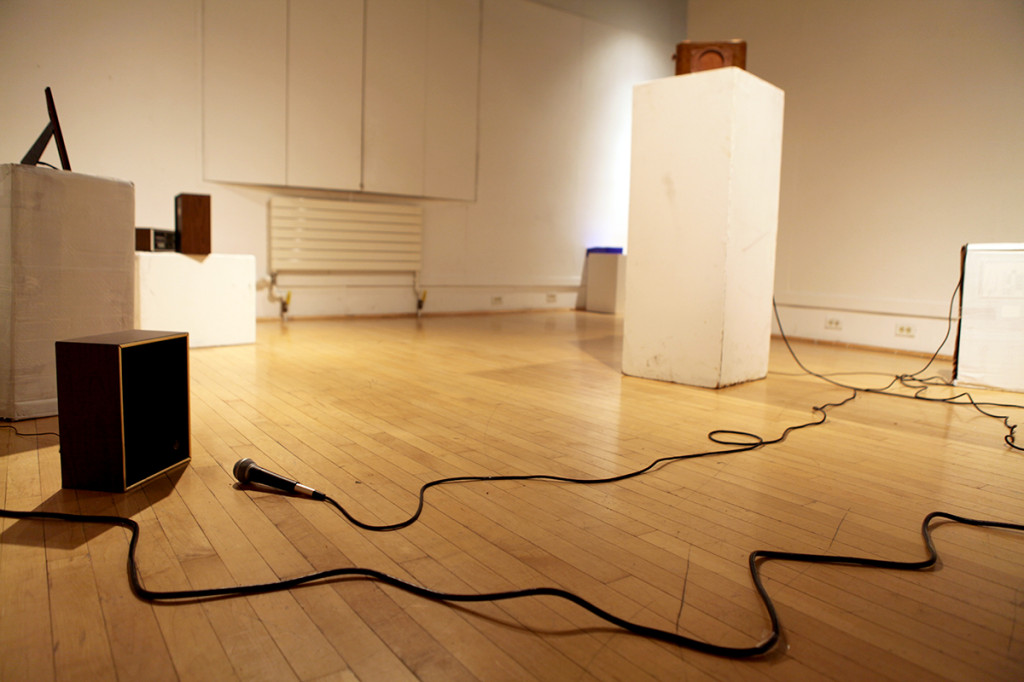

OpenTSPS-controlled Video Sequencer

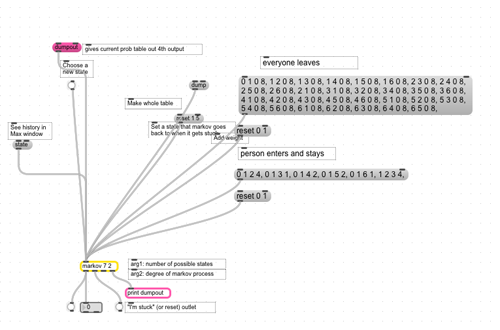

The software for this project is broken up into two sections: a Markov Chain state machine and a video queueing system. The video above demonstrates the first iteration of a video queuing system that is controlled by OSC messages coming from openTSPS, an open-source people tracking application built on openCV and openFrameworks. In short, the max/msp application queues up a video based on how many objects the openTSPS application is tracking.

The second part of the application is a probability-based state transition machine that will be responsible for selecting which emotional state will be presented to the audience. At the core of the state transition machine is an external object called ‘markov’, written by Nao Tokui. Mapping out the probability of each possible state transition based on a history of previous state transitions will require much thought and time.

Sound Design

Along with queueing up different video clips, the max patch will be responsible for controlling the different filters and effects that the actor’s voice will be passed through. For the most part, this section of the patch will rely on different groups of signal degradation objects and resonators~.

Actor Confirmed

Sam Perry has confirmed that he’d like to collaborate on this project. His character The Moon Baby is fragile, grotesque, self-obsessed and vain. These characteristics mesh up well with the emotional state changes shown in the project.

Final Project Milestone One: Jake Berntsen

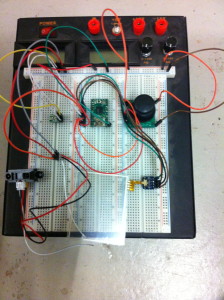

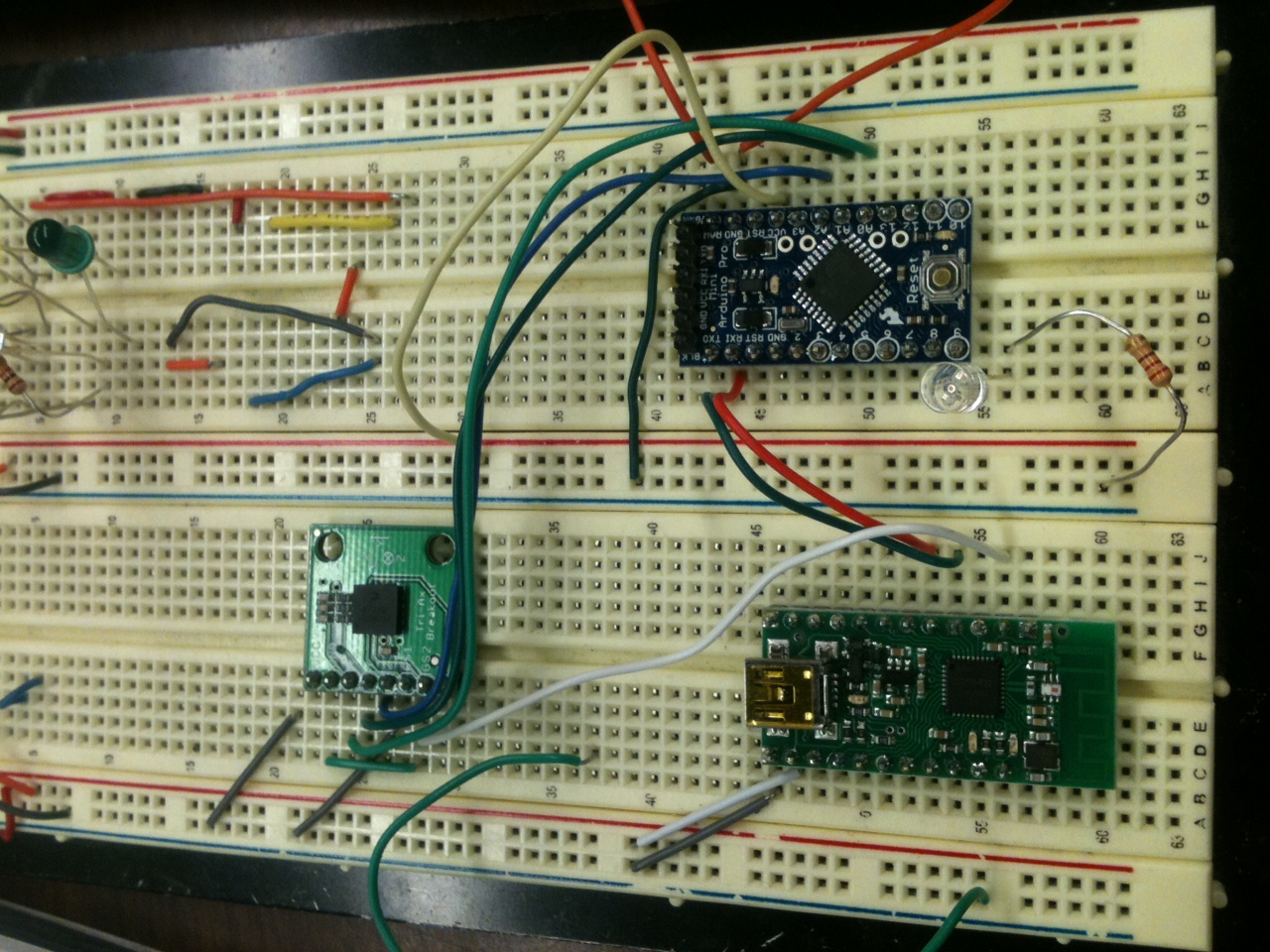

My struggles thus far in this class have rested almost entirely on the physical side of things; I’m relatively keen with regards to using relevant software, but my ability to actually build devices is much less developed, to say the least. With this in mind, I decided to make my early milestones for my final project focus entirely on the most technically challenging aspects in terms of electrical engineering; specific to my project, this meant getting all of the analog sensors running into my Teensy to obtain data I could manipulate in Max. To do so meant a lot of prototyping on a breadboard.

After exploring a wide variety of sensors, I found a few that seemed to give me a sharp control of the data received by Max. One of my goals for this project is to create a controller that offers a more nuanced musical command than the current status quo, and I believe that the secret to this lies within more sensitive sensors.

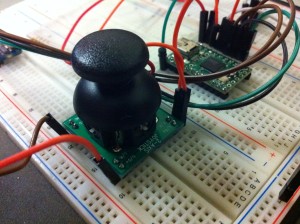

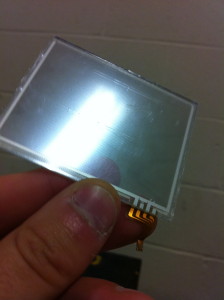

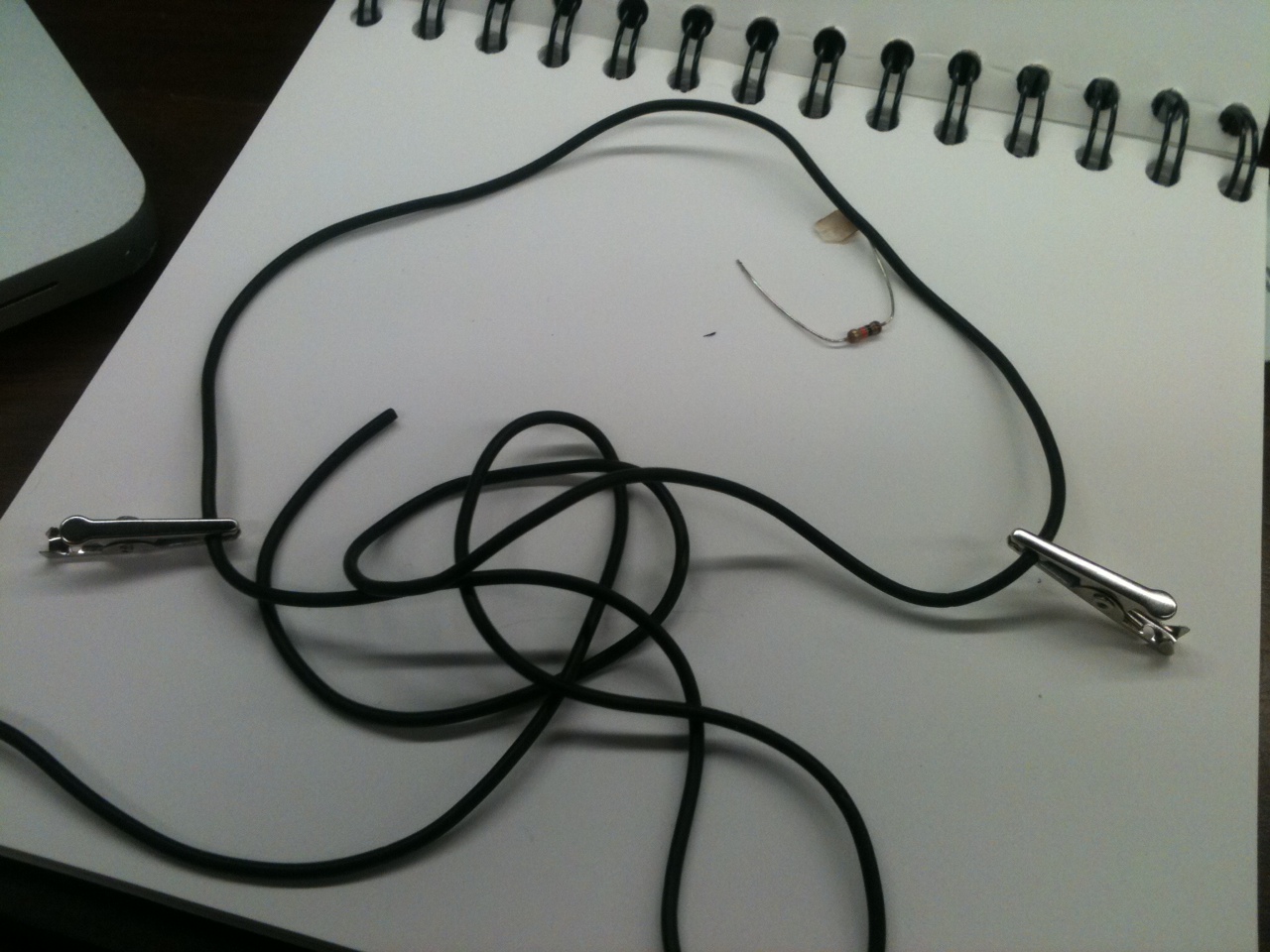

The sensors I chose are picture below: Joysticks, Distance Sensors, Light Sensors, and a Trackpad.

All of the shown sensors have proven to work dependably, with the exception of some confusion regarding the input sensors of the trackpad. The trackpad I am using is from the Nintendo DS gaming device, and while it’s relatively simple to get data into Arduino, I’m having trouble getting data all the way into Max.

The other hardware challenge that I was facing this week was fixing the MPK Mini device that I planned to incorporate into my controller. The problem with it was a completely detached mini USB port that is essential to the usage of the controller. Connecting a new port to the board is a relatively simple soldering job, and I successfully completed this task despite my lack of experience with solder. However, connecting the five pins that are essential for getting data from the USB is a much more complicated task, and after failing multiple times, I decided to train myself a bit in soldering before continuing. I’ve not yet seen success, but I’ve improved greatly in the past few days alone and feel confident that I will have the device working within the week.

If I continue working at this rate, I do believe that I will finish my project as scheduled. While I was anticipating only being able to use the sensors that I could figure out how to use, I instead was able to make most of the ones I tried work with ease and truly choose the best one.

Final Project Milestone 1 – Job Bedford

Project: SoundWaves – Wearable wireless instrument enabling user to synthesis rhythmic sounds through dance.

Milestone 1 Goal:

Decide on Sensors and music playing software.

Sensors:

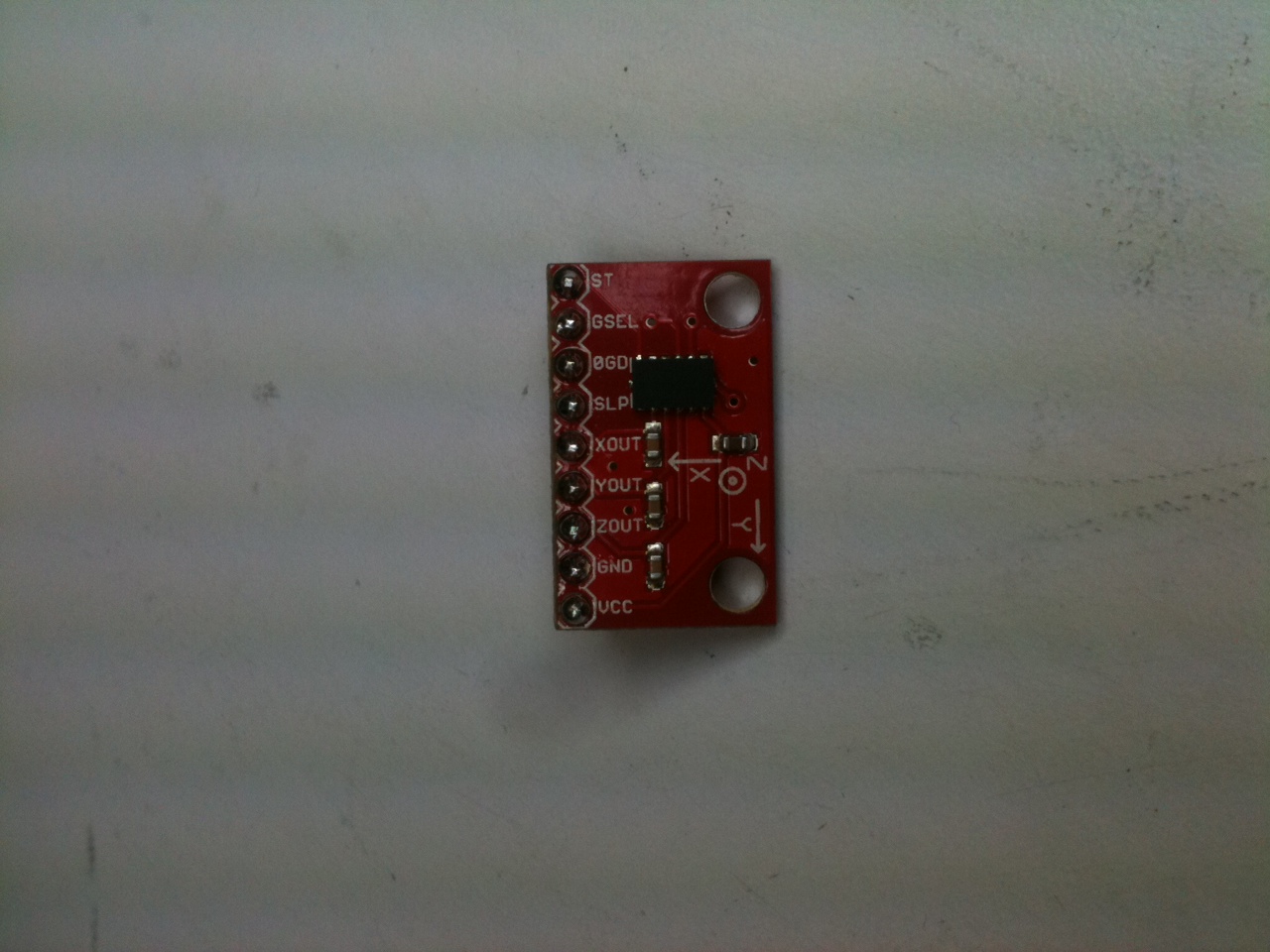

Decided to use: Conductive Rubber, Accelerometers, and homemade force-sensative resistors.

Video:

Prototyping:

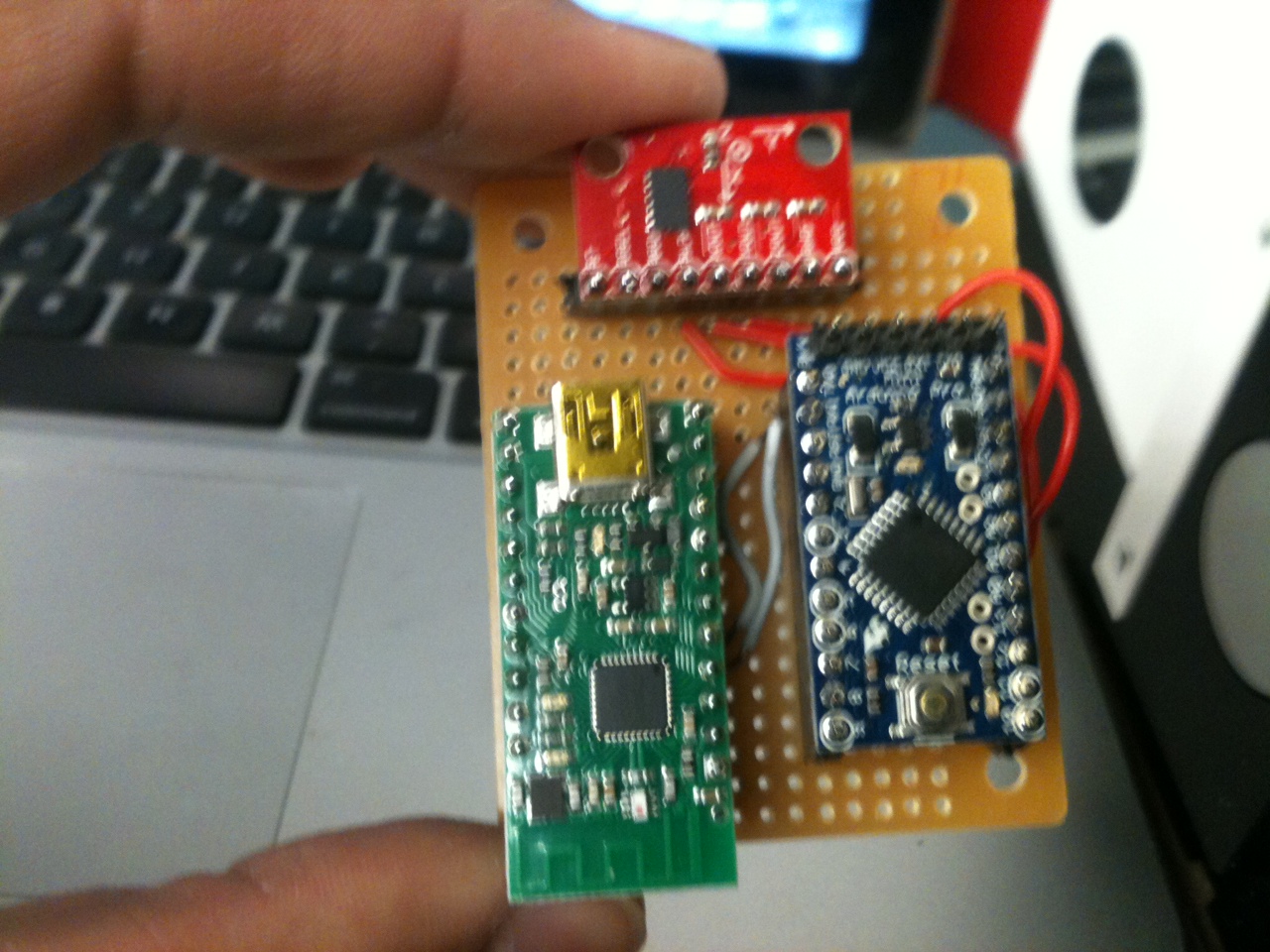

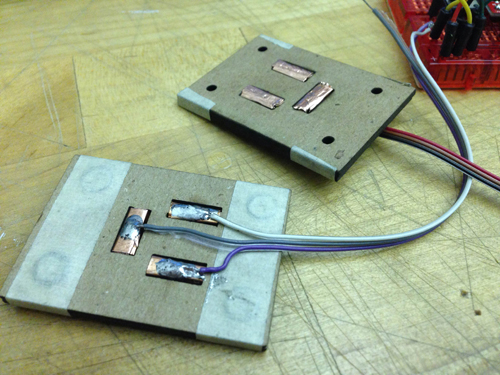

Perf Board

Prototype 2:

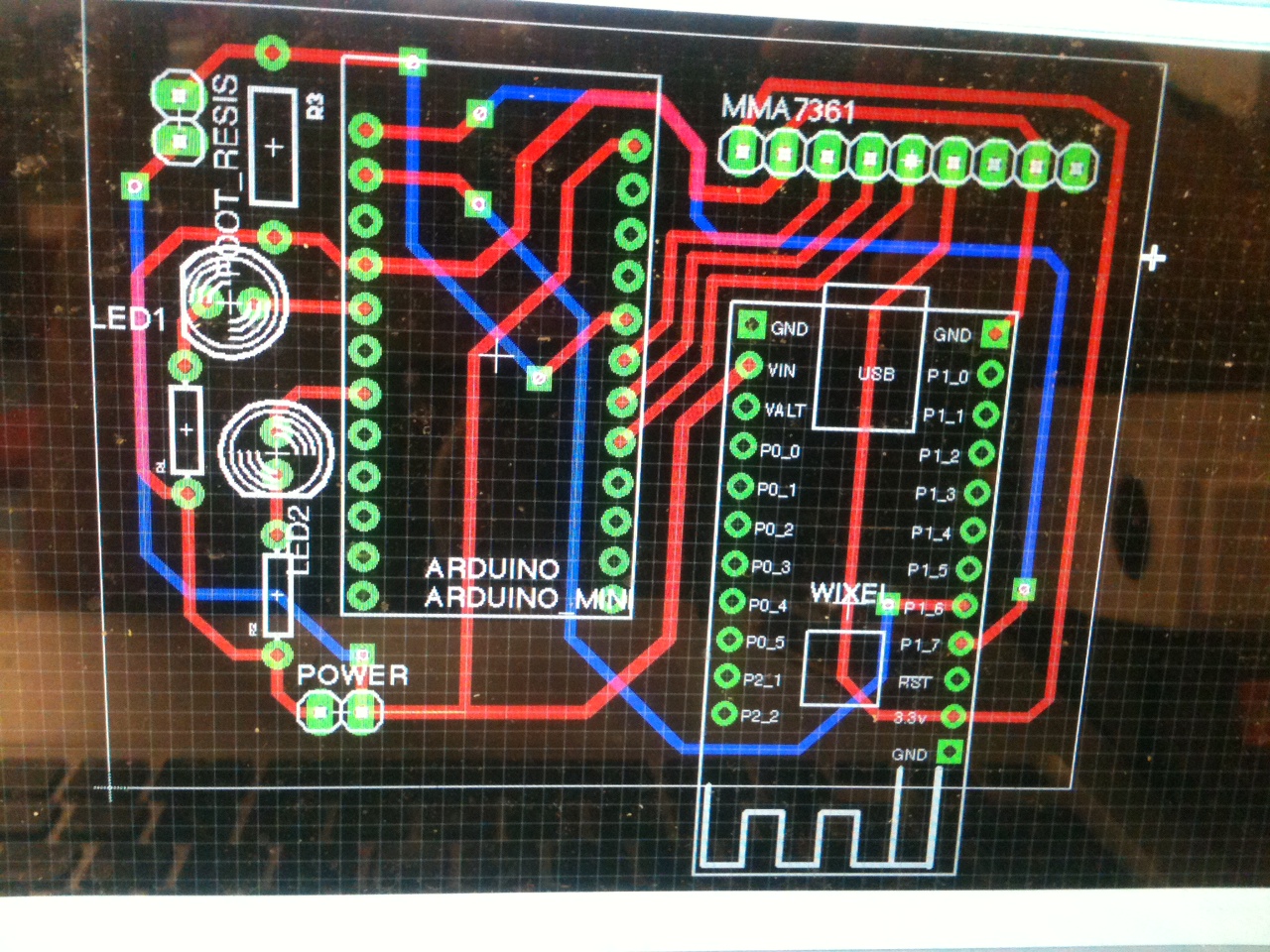

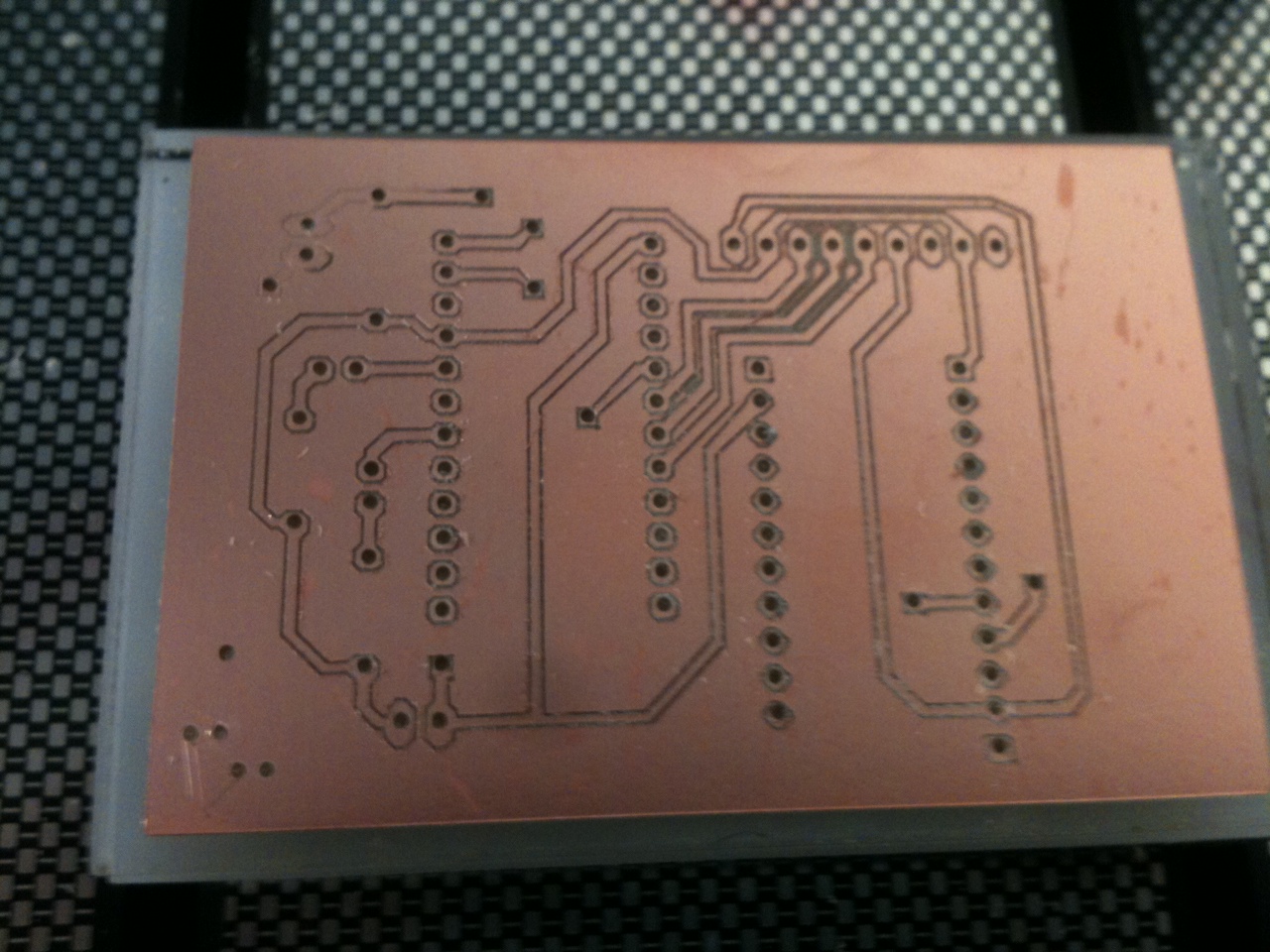

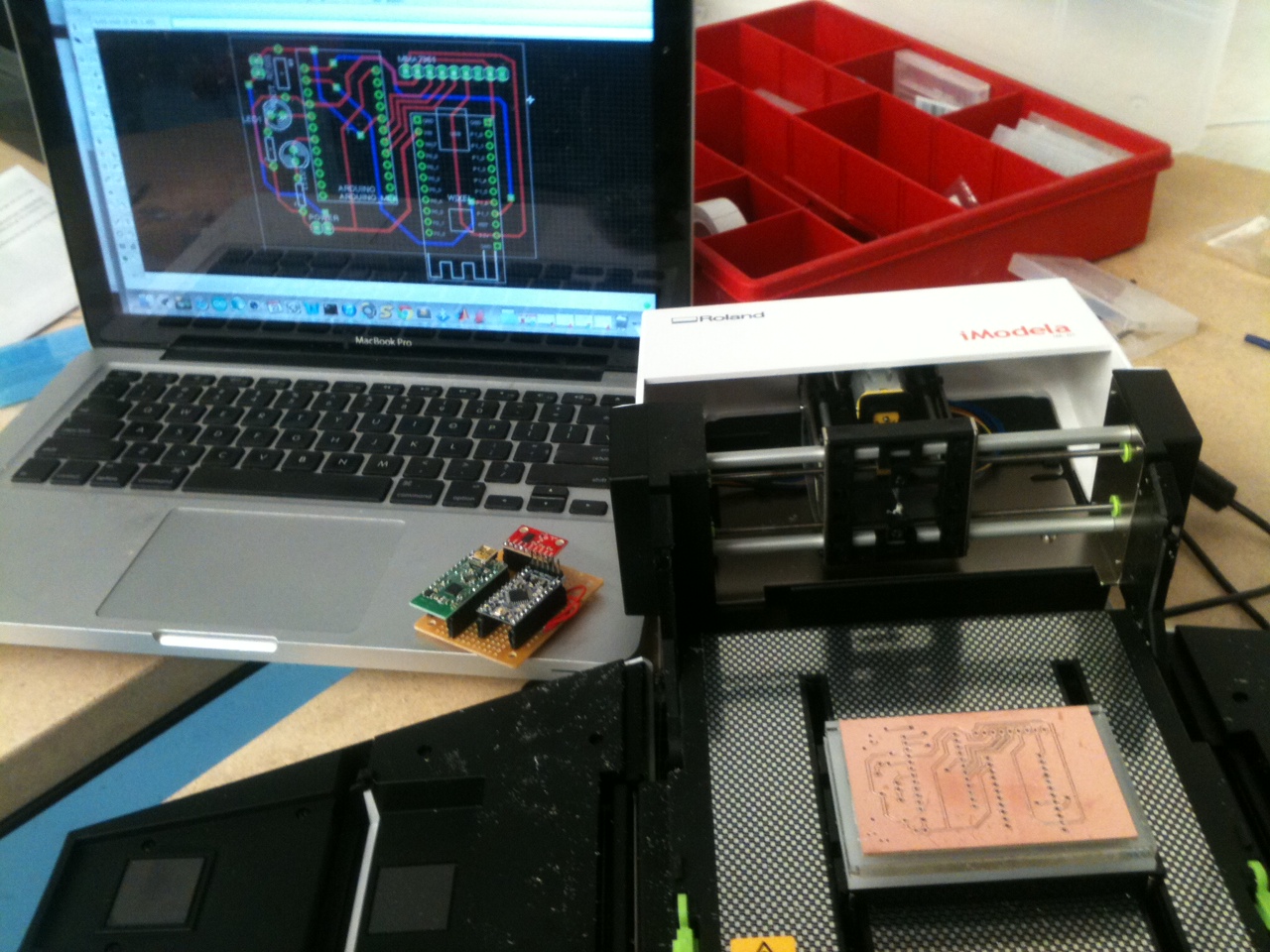

Used CNC router to cut boards

Fail. CNC router is not precise enough to cut traces of 24 mils with clearance from each other. Easier to make perf boards(I am considering print PCB’s from commercial Board maker.)

Hardware: Mounting upon Shinguards. Force sensitive resistors in Shoes. Dance is more about Lower body movement than upper body movement

Music Playing Software:

Choices

Synthesised Audio: Max_instruments_PeRcolate_06 – traditional instruments artificially reproduced in max, with a level of sensitivity to adjust elements such as pitch, Hardness, Reed stiffness, etc. Denied due to lack of quick adjustablilty to changes in values, still under consideration.

Samplers: Investigated use of free samplers such as Independence and SampleTank, but samplers became to convoluted to setup and the trail for free use expires after 10 days. Denied

Insystem Samplers: Audio DSL synth using midi-out Function in Max. over complicated. Denied.

Resonator/Wikonator: Still under consideration.

Simple Hardcoded Max mapping of gestures to sounds and sound files of 808 drum machine: Accepted.

Wireless:

Wixel are useful, but can only hook up one channel to system, can not read multiple channels on same line, or else getting confusing serial readings.

Bluetooth, Personalize channels but expensive.

Bluetooth and Wixel combination, under consideration.

Final Project Milestone 1 – Mauricio Contreras

My first milestone was to procure myself all the software tools necessary for at least simulating motion of a robotic arm within a framework which has been previously used by Ali Momeni. Namely, this means interacting with Rhinoceros 3D, the Grasshopper and HAL plugins, and ABB RobotStudio. I’ve now got all this pieces of software up and running in a virtual image of Windows (of the above only Rhino exists, as a beta, for OS X) and have basic understanding of all of them. I had basic dominion of Rhino throuh previous coursework, and now have done tutorials fro Grasshopper by digitaltoolbox.info and the Future CNC website for HAL and RobotStudio. I’ve got CAD files that represent the geometry of the robot, can move it in freehand with RobotStudio and am learning to rotate the different joints in Rhino from Grasshopper.

Update (12/11/2013): added presentation used the day of the milestone critique.

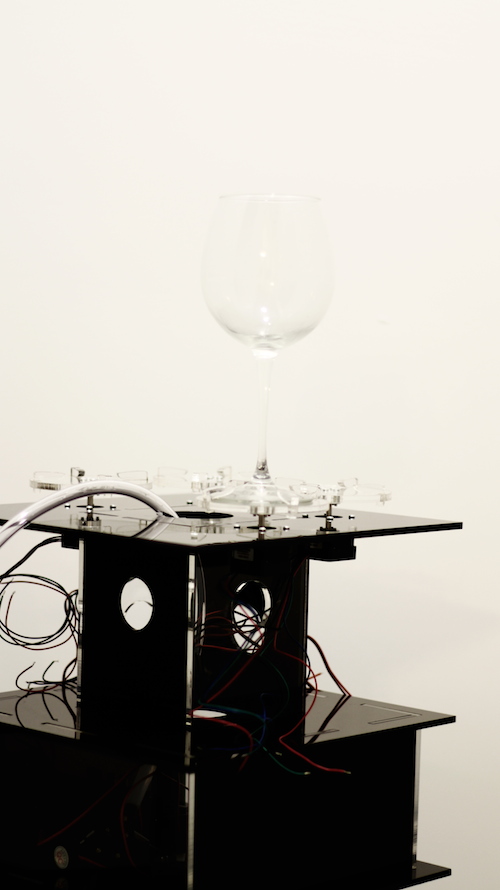

Final Project Milestone 1 – Ding Xu

We live in a world surrounding by different environments. Since music could have mutual influence with human’s emotions, environments also embrace some parameters which could get involved in that process. In certain content, environment may be a good indicator to generate certain “mood” to transfer into music and affect people’s emotions. Basically, the purpose of this project is to build a portable music player box which could sense the surrounding environment and generate some specific songs to users.

This device has two modes: the search mode and generate mode. In the first mode, input data will be transformed into some specific tags according to its type and value; then these tags are used to described a series of existing songs to users. In the second mode, two cameras are used to capture ambien images to create a piece of generative music. I will finish the second mode in this class.

In mode 2, for each piece of song, two types of images will be used. The first one is a music image captured by an adjustable camera and the content of this image will be divided into several blocks according to their edge pixels and generate several notes according to their color. The second image is a texture one captured by a camera with a pocket microscope. When the device is placed on the different materials, corresponding texture will be distinguished and attach different instrument filter to music.

Below is a list of things I finished in the first week:

- System design

- platform test and select specific hardware and software for project

- Be familiar with raspberry pi OS and Openframeworks

- Experiment about data transmission among gadgets

- Serial communication between arduino/teensy and Openframeworks

- get a video from camera and play sound in openframeworks

Platform test:

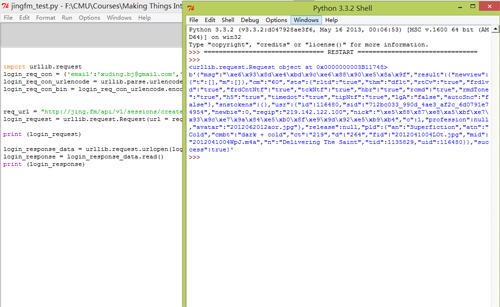

Since this device is a portable box which need to be played without supporting of a PC, therefore, I want to use raspberry pi, arduino and sensors (including camera and data sensors) to do this project. As for the software platform, I chose openframeworks as the maor IDE and plan to use PD to generate sound notes, connecting it with openframeworks for control. Moreover, it’s more comfortable for me to use C++ rather than python, since I spent much more time to achieve some a HTTP request to log into Jing.FM in python.

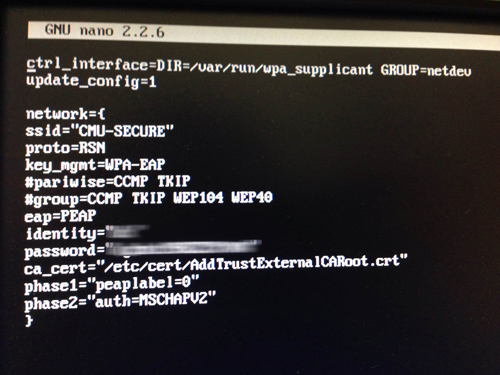

Raspberry Pi Network setting

As a fresh hand for Raspberry Pi without any knowledge about Linux, I got some problems when accessing into the CMU-secure wifi with Pi since the majority of tutorial is about how to connect the Pi with a router, and CMU-secure is not the case. Finally, I figured it out with the help of a website explaining the very specific parameters in wpa_supplicant.conf, the information provided by CMU computing service website and copying the certificate file into Pi. I also want to mention that each hardware which use CMU wifi need to register the machine in netreg.net.cmu.edu/ and this process takes effect after 30 minutes.

Data Transmission between gadget and arduino

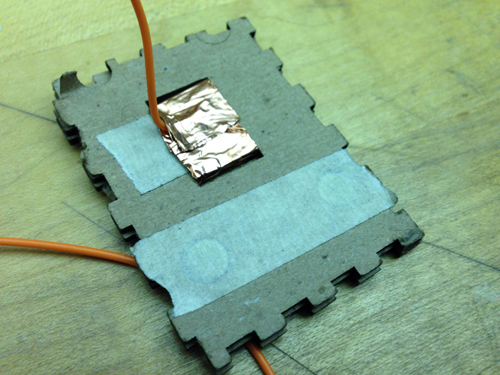

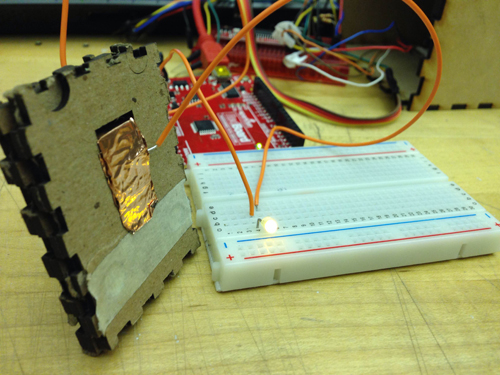

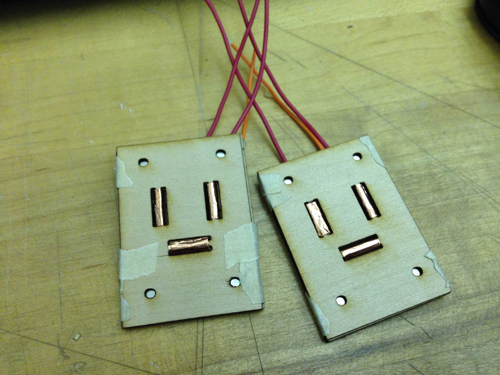

Experiment 1: Two small magnets attached in the gadget with the height of 4 mm

Experiment 2: Four magnets attached in the gadget with the height of 2.9 mm and 3.7 mm

the prototype 2 is easier to attach the gadgets together but all of these gadgets fail to transmit accurate data if not pressing the two their sides. A new structure is required to build to solve this problem.

Connecting arduino with openframeworks with serial communication

Mapping 2 music to different sensor input data value

Final Project Milestone 1 – David Lu

Milestone 1: make sensors

The left circle has a force/position sensitive resistor, the black thing along the perimeter. The right circle has 3 concentric metal meshes with a contact mic in the middle. The metal meshes sense change in capacitance (ie proximity of my finger) when connected to the Teensy microcontroller.

Final Project Milestone 1 – Ziyun Peng

My first milestone is to test out the sensors I’m interested in using.

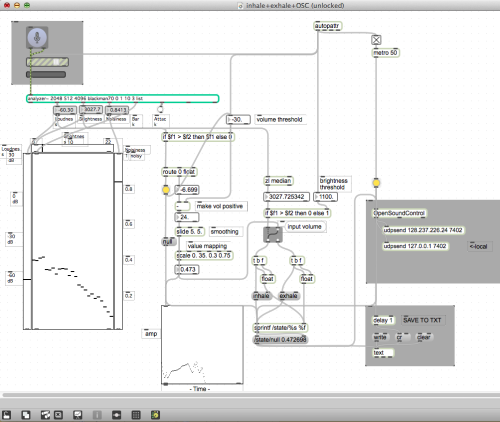

Breath Sensor: for now I’m still unable to find any off-the-shelf one which can differentiate between inhale and exhale. Hence I went to the approach using homemade microphone to get audio and using Tristan Jehan‘s analyzer object with which I found the main difference of exhale and inhale is the brightness. Nice thing about using microphone is that I can also easily get the amplitude of the breath which is indicating the velocity of the breath. Problem with this method is it needs threshold calibration each time you change the environment and homemade mic seems to be moody – unstable performance with battery..

Breath Sensor from kaikai on Vimeo.

–

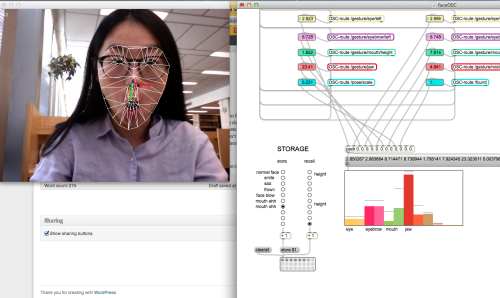

Muscle Sensor: It works great on bicep although I don’t have big ones but the readings on face is subtle – it’s only effective for big facial expressions – face yoga worked. It also works for smiling, opening mouth, and also frowning (if you place the electrodes just right). During the experimentation, I figure it’s not quite a comfortable experience having sticky electrodes on your face but alternatives like stretching fabric + conductive fabric could possibly solve the problem which is my next step. Also, readings don’t really differentiate each other, meaning you won’t know if it’s opening mouth or smiling by just looking at the single reading. Either more collecting points need to be added, or it could be coupled with faceOSC which I think is more likely the way I’m going to approach.

FaceOSC: I recorded several facial expressions and compared the value arrays. The results show that the mouth width, jaw and nostrils turned out to be the most reactive variables. It doesn’t perform very well with the face yoga faces but it does better job on differentiating expressions since it offers you more parameters to take reference of.

Next step for me is to keep playing around with the sensors, try to figure out a more stable sensor solution (organic combination use) and put them together into one compact system.

Final Project Milestone 1 – Haochuan Liu

Plan for milestone 1

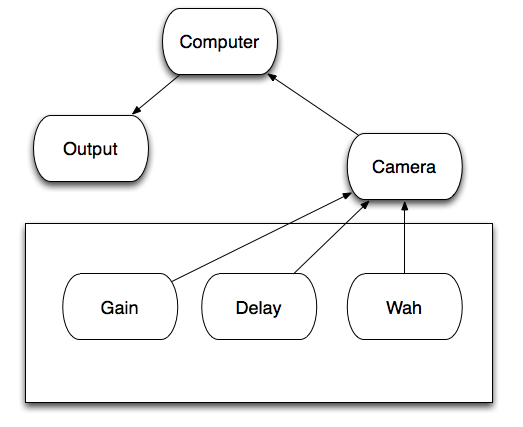

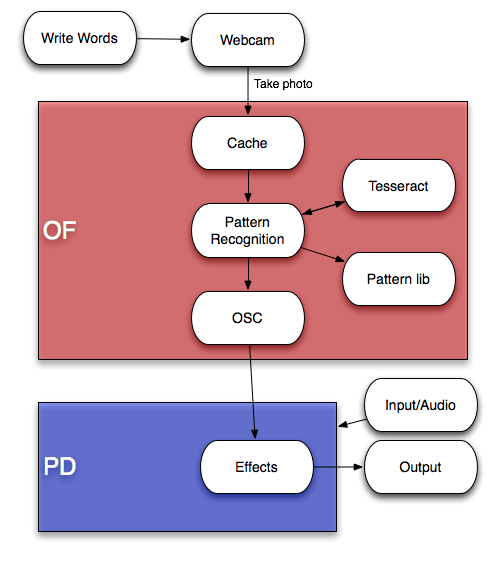

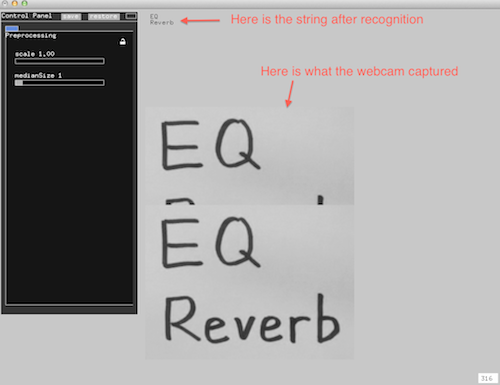

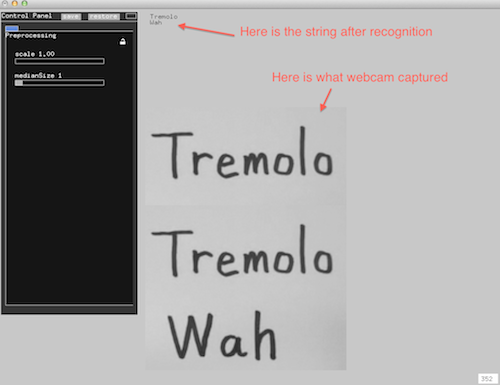

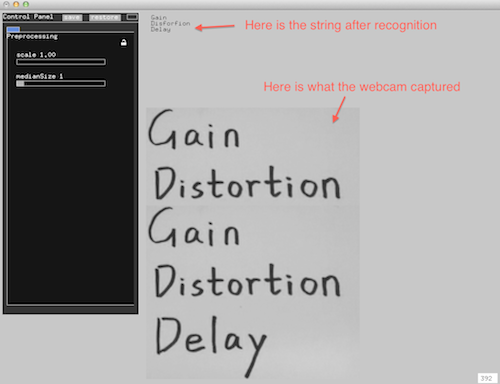

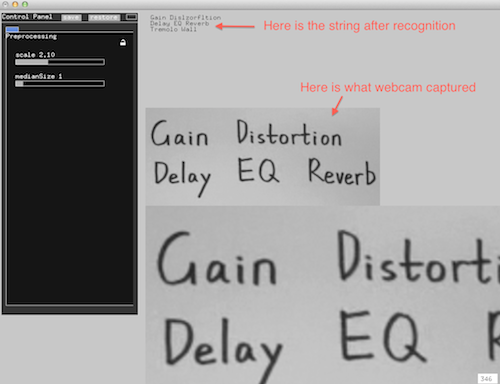

The plan for milestone 1 is to build the basic system of the drawable stompbox. First, letting the webcam to capture the image of what you have drawn on paper, then the computer can recognize the words on the image. After making a pattern comparison, the openframwork will send a message to puredata via OSC. Puredata will find the right effect which is pre-written in it and add it to your audio/live input.

Here is the technical details of this system:

After writing words on a white paper, the webcam will take a picture of it and then store this photo to cache. The program in OpenFrameworks will load the photo, and turn the word on the photo to a string and store it in the program using optical character recognition via Tesseract. The string will be compared to the Pattern library to see if the stompbox you draw is in the library. If it is, then the program will send a message to let PureData enable this effect via OSC addon. You can use both audio files or live input in puredata, and puredata will add the effect what you have drawn to your sound.

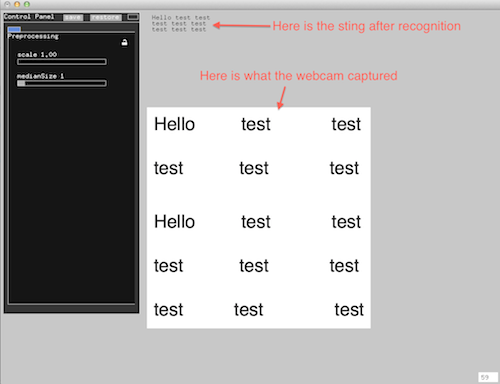

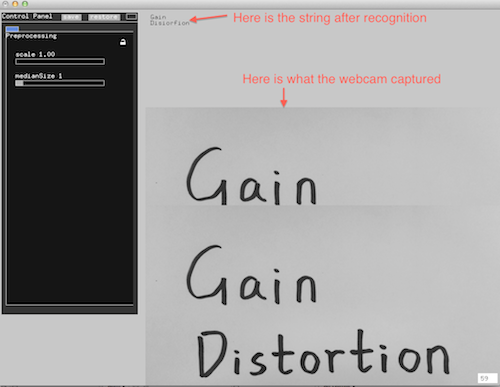

Here are some OCR tests in OF:

About the pattern library on OF:

After a number of test for OCR, the accuracy is pretty high but not perfect. Thus a pattern library is needed to do a better recognition. For example, Tesseract always can not distinguish “i” and “l”, “t” and “f” , and “t” and “l”. So the library will determine what you’ve drawn is “Distortion” when the result after recognition is “Dlstrotlon”, “Disforfion” or “Dislorlion”.

Effects in PureData:

Until now, I’ve made five simple effects in PureData, which are Boost, Tremolo, Delay, Wah, and Distortion.

Here is a video demo of what I’ve done for this project:

Hybrid Instrument Final Project Milestone 1 from Haochuan Liu on Vimeo.