Group Project: “Introducing OSC, with CollabJazz” by Robert Kotcher & Can Ozbay (part 1)

Project Description

Our assignment was to showcase how OSC can be used to remote control/connect media objects, or computers together. After an hour of brainstorming, we’ve decided to build a collaborative jazz drumkit, with pureData, and implemented the following control parameters :

- HH, Snare, Kick Volume & Texture controls,

- Cutoff Frequency & Q controls,

- Business & Swing intensity controls.

We’ve added 13 different parameters in total and made a universally controlled jazz drum machine.

Later, we wrote a client app also in PureData, which can be installed on all 13 users’ computers to control the parameters we’ve created. More ? In the video.

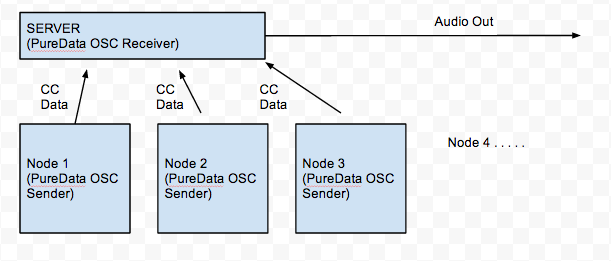

Connectivity Diagram

Potential Possible Ideas

This system can be used to create a cumulative rhythm by a jazz orchestra, or an electronic music ensemble to control the overall speed of the current track.

Another great application would be online collaborative rhythm exercises.

Also the system could be easily integrated into DAW software, and it could essentially enable an entire band to work on a single project.

Problems needed to be addressed

Currently, with this system, the nodes can only talk to the server, and the server is the sound output. However the system can be improved to provide two way connection, and this would dramatically improve the capabilities of the system. Ex: Server sending current volume data to everyone.

Also, for if it had to be implemented for a Jazz improv orchestra, it would require the entire band to have control over the tempo, not just one node. Although this is easy to implement, with our prototype, all we wanted to do was to try and create an experimental drum machine.

Group Project: Wireless Data + Wireless Video System (part 1)

Idea Proposals:

1. Interactive Ceiling Robot

Wireless => Portability. To showcase the substantial reach of wireless control, a robot with a camera on a high ceiling, interacts with persons beneath it. The robot move around in the high shadows, feeding video of the ground below in various directions. When no person is underneath to robot releases a small ball of yarn/ candy bar on string just above ground level, enticing those beneath to it. As soon as movement is detect/ person goes for the bait, the robot reels the bait back in out of reach. The video feed capture the person dismay and distraught. Repeats process.

The ceil is a new frontier, ofter unexpected and unnoticed. A robot, supposedly a machine subservient to human, now turns the tables and mocks them from its noble high perch. From above it claim a birds-eye view, supposedly monitoring like big brother or looking down upon those beneath. A reverse of power structure.

Possible robustness factor will be a versatile clamping mechanism that easy hook on to various pipe or structural supports along the ceiling. Possible internal cushioning in case of falls. Wireless controlled camera with easy to use user interface.

2. “Re-Enter”

We will place the wireless video system near an entrance and record people walking into a building. Inside the building a delayed playback of that video will be projected elsewhere in the building, near the entrance. Some visitors, who happen to travel past the playback location, will possibly see a video of themselves entering the building in the past. The project is a mobile version of Dan Graham’s “Time Delay Room“. Users will be able to affect the playback time and angle of the camera.

The project aims to confuse visitors just enough to stop their quick routine. By introducing the possibility of seeing a moving image of themselves, visitors are forced to contemplate their current and near-past action. This team, built of mechanical and electrical engineers, artists and A/V experts, is well equipped to take on the challenges presented by this project, including confronting their fast-paced schedules.

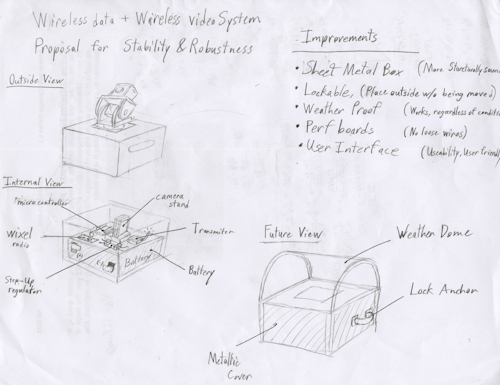

A main challenge of this proposal will be to build a wireless video transmitter that can handle outdoor weather and be secured against theft. See drawings below for proposed changes. To deal with weather, the team will build a temporary vestibule to sheild it from rain and wind. To prevent theft, the team will include anchor points on the wireless box that can be used to lock it to a permanent structure nearby.

3. yelling robot

we will fix this portable camera on a vehicle with four wheels and place two sensors to capture the applause sound from the opposite direction. Initially, the people will be divided into two groups and the vehicle will be put in the middle initially; then this little vehicle will move toward to the group has larger applause sound and keep to capture the faces of winning group at the same time.

this project aims to simulate the competing between two groups. It’s like a wireless edition of pull-push game. Since the projector will display the winning group, the other group may try their best to get the focus of projection image so that they will make better interactions to make larger sound.

The main challenge may be that how to capture the winning group’s faces and adjust the angle of camera since it approaches the winning group back and forth.

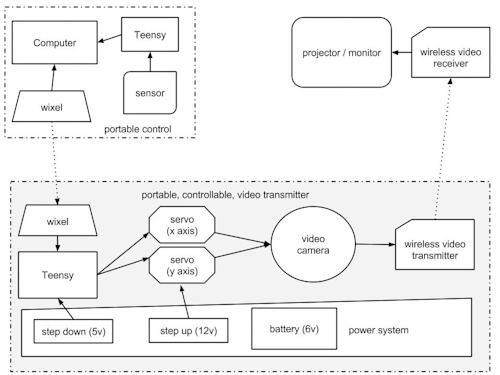

Diagram of improved, more robust, weatherproof box:

participants: Job Bedford, Chris Williams, Ziyun Peng, Ding Xu, Jake Marsico

Group Project: Multi-Channel analog video recording system (part 1)

The project is centered around an 8-to-1 analog video multiplexer board. This board is a collaboration between Ray Kampmeier and Ali Momeni, and more information can be found here.

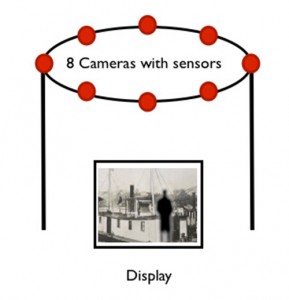

In the present setup, 8 analog small video cameras (“surveillance” type) are connected as inputs to the board, and the output is connected to a monitor. The selection of which of the 8 inputs gets routed to the output is done by an Arduino, which in turn maps the input of a distance sensor to a value between 1 and 8. Thus, one can cycle through the cameras simply by placing an object at a certain distance of the sensor. The connection diagram can be seen below:

A picture of the board with 8 inputs is displayed below (note the RCA connectors):

The original setup built for showcasing the project uses a box shaped cardboard structure to hold a camera in each of its corners, with the cameras pointing at the center of the box (see below).

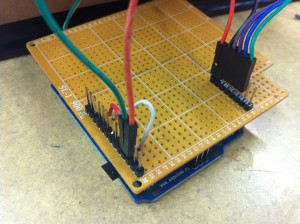

A simple “shield” board was designed to facilitate the interface between the Arduino, the distance sensor and the video mux.

Improvements

The current camera frame is of cardboard which is not the most robust of materials. A new frame will be constructed of aluminum bars assembled in a strong cube:

This is the cube being assembled:

Wires attachments for the cameras will be routed away from the box to a board for further processing.

Project Ideas

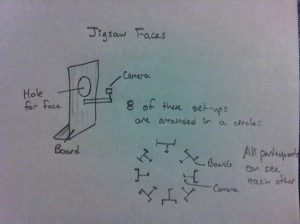

1) Jigsaw faces

Our faces hold a universal language. We propose combining the faces of eight people to create a universal face. Eight cameras are set-up with one per person. The participants place their face through a hole in a board so that the camera only sees the face. These set-ups are arranged in a circle so that all the participants can see each other. The eight faces are taped and a section of face is selected from each person to combine into one jigsaw face. This jigsaw face is projected so that the participants can see it. This completes the feedback loop. The jigsaw face updates in real time so as the participants share an experience their individual expressions combine in the jigsaw face.

The Jigsaw Face will consist of a few boards (of wood) for people to put their face through. Each board will have a camera attachment and all the cameras will be attached to a central processor. The boards will be arranged so that people face each other across a circle. This will enable feedback among the participants.

2) The well of time : Time traveling instrument

I’m still thinking about the meaning of 8 cameras and why we need and what we can do originally. And, I assume that 8 cameras mean 8 different views and also it could be 8 different time points distinguishably. After arriving this point, I realized we can suggest a moment and situation that is a mixed timezone and image with a user’s present image from each camera and the old photo from in the past which is triggered by a motion or distance sensor, attached with each camera.

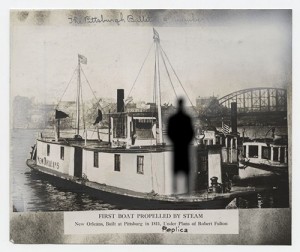

Here is a sample image of my thought. This image contains the moment of now and the past time when the computer science building had constructed.

And, if a user stand with another camera, we could represent the image like below. The moment of first introducing the propeller steam boat. basically, this is the artistic way of time traveling. So, our limitation by black and white camera couldn’t be problem and in this case, it could be benefit.

For this idea, of course, we have to make some situation and installation looks like this.

More precisely, it has this kind of structure.

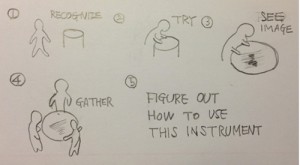

As a result, an example interaction scenarios with a user and diagram.

3) Object interventions

Face and body expressions can tell a lot about people and their feelings towards the surroundings or the objects of interaction. Imagine attaching eight cameras to a handheld object or a large sculpture. With eight cameras as the inputs, the one output will be the video from the camera that is activated by a person interacting with it in that specific area. The object can range from a small handheld object like a rubik’s cube, to a large sculpture at a playground. We think it will be very interesting to see the changes in face and body expressions as a person gets more (or less) comfortable with the object. It might be more interesting to hide these cameras so that the user will be less conscious of their expressions because they do not realize they are being filmed. It will be more difficult to do that with a large public sculpture, but we can do a prototype of a handheld object where we can design specific places where the cameras should locate so that they cannot be seen.

4) Body attachment

When we see the world we see it from our eyes. Why not view the world from our feet. Perceptions can change with a simple change in vantage point. We propose to place cameras on key body locations: feet, elbows, knees and hands in order to view the world from a new vantage point as we interact with our surroundings. Cameras would be attached via elastic and wires routed to a backpack for processing.

Group members:

- Mauricio Contreras

- Spencer Barton

- Patra Virasathienpornkul

- Sean Lee

- JaeWook Lee

- David Lu

Group Project: Multi-Channel Sound System (part 1)

- A mobile disk that can be worn (as a hat, etc) – “Ambiance Capture Headset/Scenes from a Memory”

Every day, we move about from place-to-place to spend our time as driven by our motivations. Home, Road/Car, Office/School, Library, Park, Cafeteria, Bar, Nightclub, Friend’s place, Quite Night- we all experience a different ambiance around us and a change of environment is usually a good thing. It may soothe us, or trigger a certain personal mode we have (like a work mode, a social mode or a party mode). What if we could capture this ambiance, in a ‘personalized’ way and create this around us when we want- introducing the Ambiance Capture Headset. This headset has a microphone array around it and it records all the audio around you- it may catalog this audio using GPS data. You come home, connect the headset to your laptop & an 8-speaker circle and after processing audio (extracting ambiance only, using differences in amplitude and correlation in time etc.), the system lets you choose the ambiance you’d want. You can quickly recall your day by sweeping through and re-experiencing where you’ve been.

- Head-sized disk with speakers positioned evenly around the disk. Facing inward – “Circle of Confusion”

In this section, our ideas tend to fall in two categories, either using the setup to confuse a listener’s perception of the world around them, or to enhance it in some way. In the first scenario, one idea is to amplify sounds that are occurring at 180 degrees from the speaker, in other words, experiencing a sonic environment that is essentially reversed from reality.

- Speakers hanging from the ceiling in arbitrary shapes – “EARS”

Sometimes you just need someone to listen to you, like a few ears to hear you out maybe? A secret, a desire, an idea, a confession. This is a setup that connects with people, and let’s them express what they want. It’s a room you walk into which has speakers suspended from the ceiling. You raise your hand towards one, and when that speaker senses you coming near, it descends to your mouth level so you may talk/whisper into it (speakers can act as microphones as well! or we may attach a mic to each). You may tell different things to the different speakers, and once you’ve said all you want, you hear what the speakers have heard before. This is chosen by the current position of speakers, as all speakers start to descend if you try to touch them. All voices are coded, like through a vocoder to protect identities of people. Hearing some more wishes, problems, inspirations, hopes you probably feel lighter than you did before.

- A 3D setup (perhaps a globe-shaped setup) – “World Cut”

You enter into an 8 speaker circle, having a globe in front. You spin the globe and input a particular planar intersection of the world- this planar intersection ‘cuts’/intersects a number of countries/locations. These intersected locations map to a corresponding direction in our circle, so you hear music/voices/languages from the whole ‘cut’ at a go in out 8 speaker circle. You can spin the globe and explore the world in the most peculiar of ways.

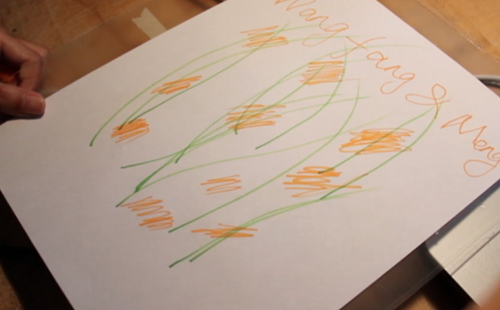

Assignment 2 “Musical Painting” by Wanfang & Meng(2013)

What:

This idea came from the translation between music and painting. When somebody draws some picture, the music will change at the same time. So, it looks like you draw some music:)

How:

We use sensor to test the light, get the analog input , then transform it to sound. I think the musical painting is a translation from visible to invisible, from seeing to listening.

Why:

It is fun to break the rule between different sense. To on the question, the differences between noise, sound and music, personally thinking, that the sound is normal listening for people. The noise may be disturb people. The music is a sound beyond people’s expect. So based on the environment, people’s idea will also change, we will create more suitable music .

Assignment 2: “Comfort Noise” by Haochuan Liu & Ziyun Peng (2013)

Idea

People who don’t usually pay attention to noise would often take it for granted as disturbing sounds, omitting the musical part in it – the rhythm, the melody and the harmonics. We hear it and we want to translate and amplify the beauty of noise to people who didn’t notice.

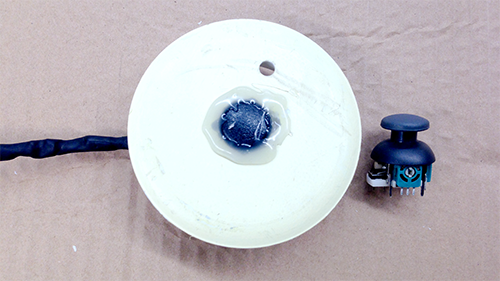

Why pillow?

The pillow is a metaphor for comfort – this is what we aim for people perceiving from hearing noise through our instrument, on the contrary to what noise has been impressed people.

When you place your head on a pillow, it’s almost like you’re in a semi-isolated space – your head is surrounded by the cotton, the visual signals are largely reduced since you’re now looking upwards and there’s not that much happening in the air. We believe by minimizing the visual content, one’s hearing would become more sensitive.

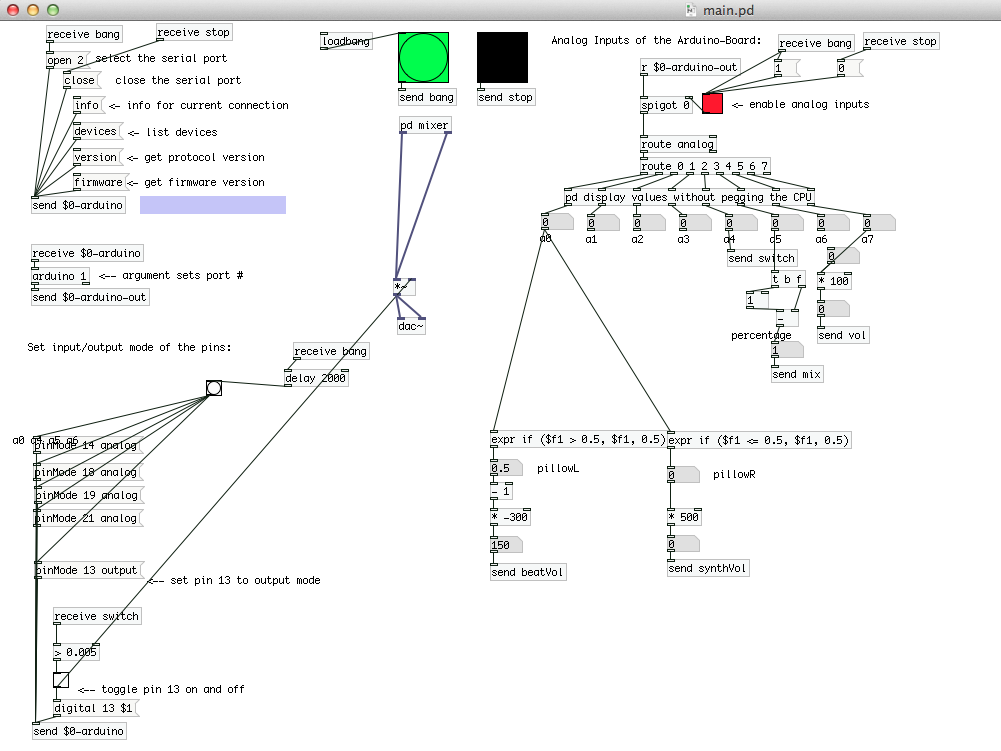

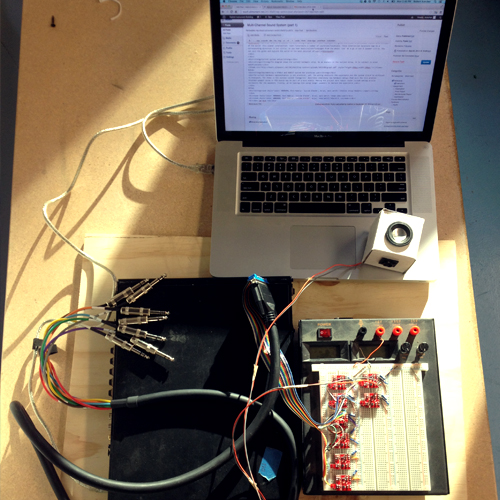

Make

We use computational tools ( Pure Data & SPEAR) and our musical ears to extract the musical information in the noise, then map them to the musical sounds (drum and synth ) that people are familiar with.

The Pduino (for reading arduino in PD) and PonyoMixer (multi-channel mixer) helped us a lot.

Inside the pillow, there’s a PS2 joystick used to track user’s head motions. It’s a five-direction joystick but in this project we’re just using the left and right. We had a lot of fun making this.

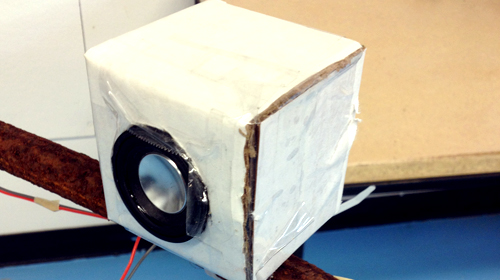

Here’s the mix box we made for users to adjust the volume and the balance of the pure noise and the musical noise extraction sounds.

The more detailed technical setting is as listed below:

Raspberry Pi – running Pure Data

Pure Data – reading sensor values from arduino and sending controls to sounds

Arduino Nano – connected to sensors and Raspberry Pi

Joystick – track head motion

Pots – Mix and Volume control

Switch – ON/OFF

LED – ON/OFF indicator

Assignment 2: “Drip Hack” by David Lu (2013)

Drip Hack is a hack that drips. Inspired by my past experiences with cobbling together random pieces of cardboard, this piece aims to explore our childlike curiousity of water dripping in the kitchen sink. The rate of dripping can be controlled by the pair of valves on top, and the water drops hits the metal bowl and plastic container below. The resulting vibrations are picked up by piezo-electric mics, which are amplified by ArtFab’s pre-amp. Since there are two drippers, interesting cross rhythms can be generated.