Overview

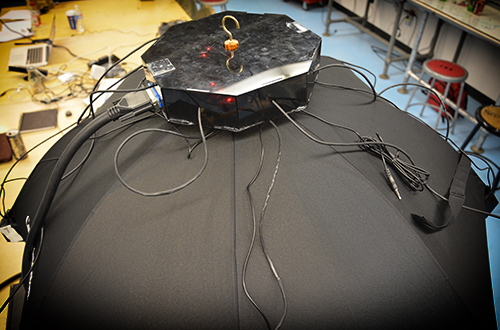

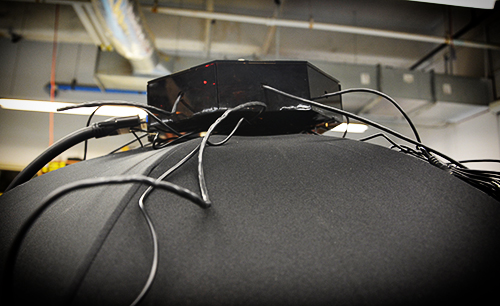

Eight video cameras present eight different views into a dynamic world. They can be oriented a number of ways including inward or outward on a subject.

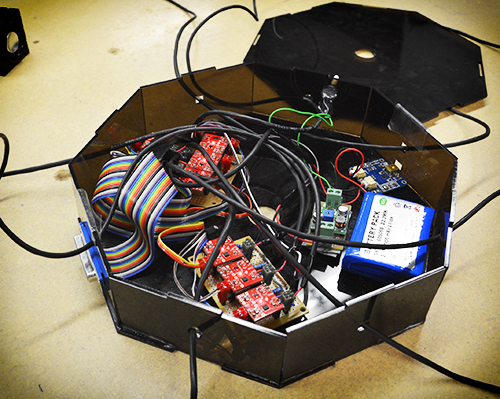

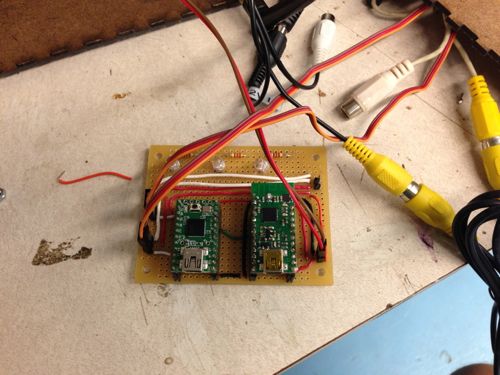

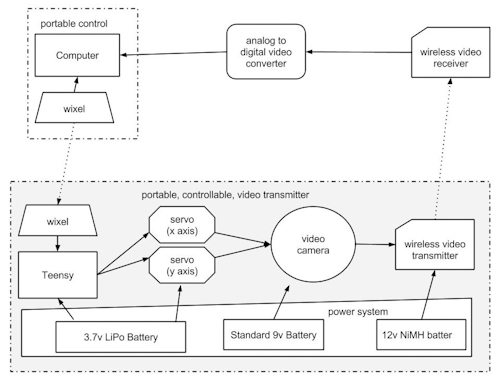

Our basic setup is a box with the eight cameras arranged along the top. Cables run from the cameras to the base of the box where they are connected to power sources and a video multiplexer. The base also contains an Arduino which is used to control the mux. Power for the mux is supplied via the Arduino. A basic diagram of this setup can be seen below (this setup matches our “Fat Shark” application, as described in this post, but also can be generalized).

Hardware Details

Cameras

These cameras are generic analog mini cameras you can buy on the internet or steal from the artfab lab. They can be fed with 9-12V, and are provided with a 3 conductor cable: V+, GND and video.

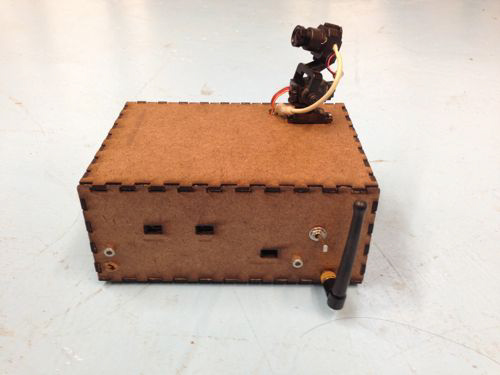

Camera boxes

The camera boxes were constructed out of MDF. There is nothing special about the design except that there are holes to allow the camera to poke out as well as for the cord to come in. A good place to create a box is here: boxmaker.rahulbotics.com/.

Our camera cords are secured inside by foam padding and a zip-tie.

Open-beam structure

Open beam is very structural. openbeamusa.com/

Cords

Each camera has power input and video output. We used three 1-to-4 power splitters to distribute power from a single 9V source to the 8 cameras and other components. The video output eventually terminates as RCA to connect to the video MUX.

Mux

The mux takes 8 analog video inputs, a selector input, an enable input, a power source, and 1 analog video output. This board is a collaboration between Ray Kampmeier and Ali Momeni, and more information can be found here www.raykampmeier.net

Arduino

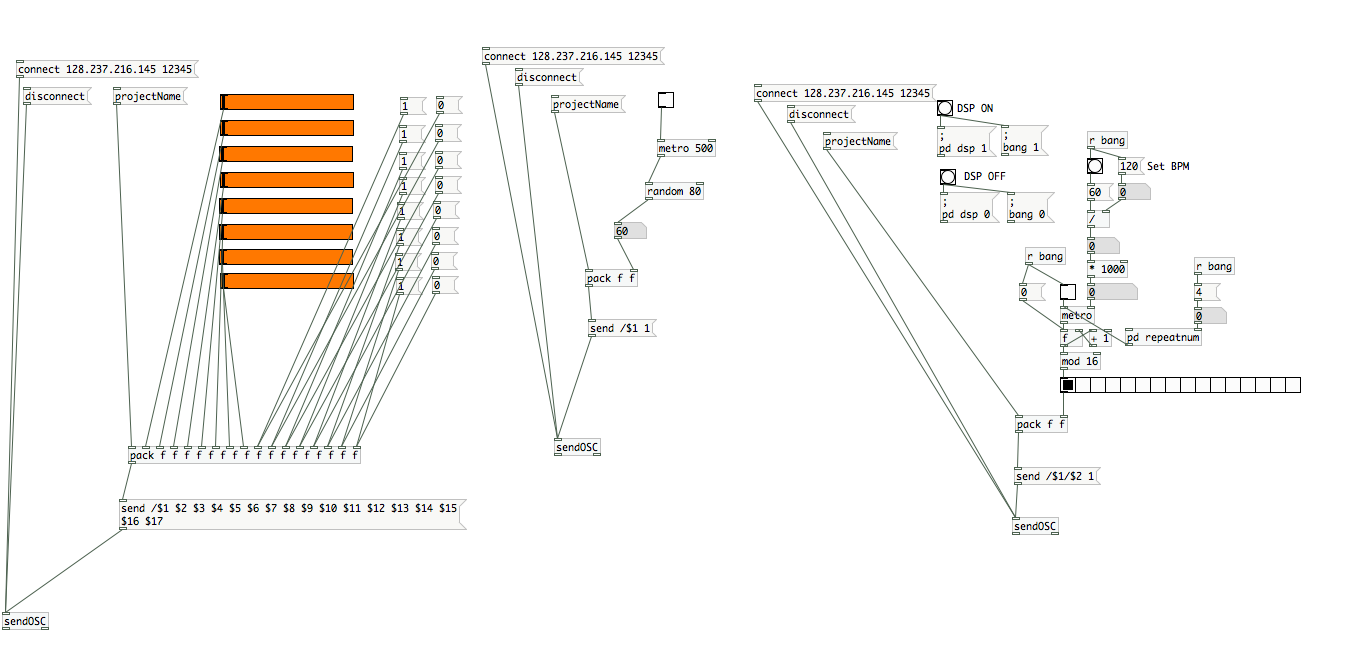

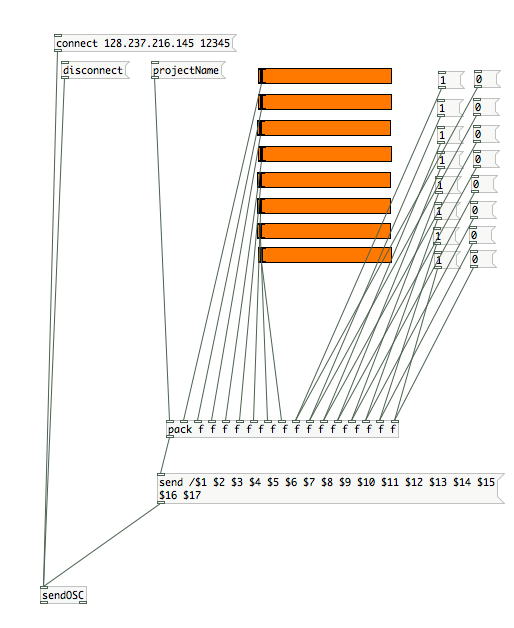

The arduino controls the mux selector from either being programmed to switch channels or by an external controller eg a computer or a phone outputting OSC. Any arduino would do.

Immersion RC

The video output can be routed to a sender antenna that takes in a video input and power. Their website is: www.immersionrc.com

Fat Shark

The goggles with screens inside: www.fatshark.com

Code

All our code and documentation is located on github github.com/sbarton272/VideoMux.

Looking Outwards: Fat Shark

As shown in the diagram, the video multiplexer is connected to the immersion RC chip that sends a radio signal to the Fat Shark, where a single output video appears through the goggles. Fat Shark is a very interesting device because it allows you to see videos and images that are not necessarily where you can traditionally view. As of now, the cameras are fixed in place on the Open-beam structure. The structure is robust enough for mobility, which allows for a wide range of possibilities on the location of the system.

Looking Inwards

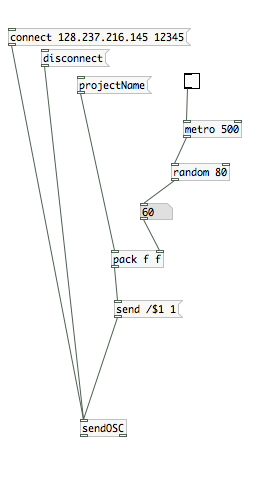

The setup of the cameras allows us to rotate the cameras inwards. For this application, we place an object in the middle, and use the eight cameras to view it from different angles. In order to hold the object in place, we cut out a square piece of masonite, that is placed on top of four screws and can be moved up and down depending on the size of the object. Similar to the Looking Outwards application, the videos are controlled by the phone through touchOSC.

Third Application

We took the outward facing camera set-up and did a few shoots using our new compass controller. Here are the results:

Experiment 1:

Experiment 2:

Fourth Application: An Image Capture System for 8 Cameras with Different Angles.

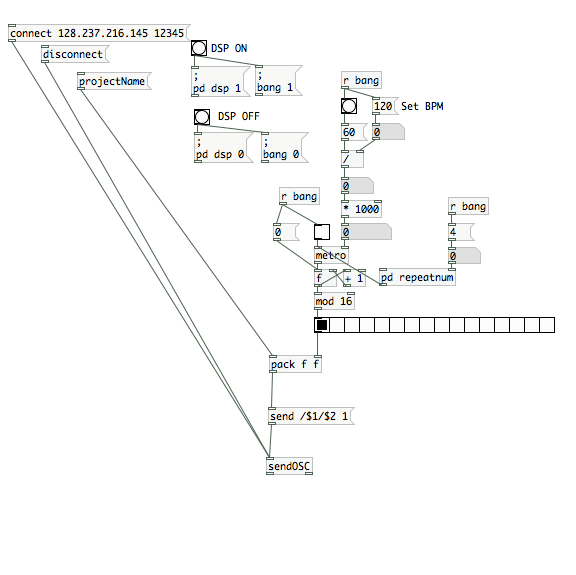

The aim of this project is building a image capture system with our camera box for 8 cameras. The capture system is composed by a real time processing software : Pure Data and Open source hardware platform : Arduino. Function is simple like that first, we made a connection between a video multiplexer and arduino, so we can control and choose which camera and angle we want to use. And, in Pure data patch for this combination (Arduino and Video multiplexer), we can use a user interface which help to choose which camera with GUI and make a captured and file-saved images into PC. And then, we can use these images for making 3D scan image and multi viewed photo like a panorama image.

Group members

- Mauricio Contreras

- Spencer Barton

- Patra Virasathienpornkul

- Sean Lee

- JaeWook Lee

- David Lu