Final Project Milestone 3: Jake Marsico

Sequencing Software

The past two weeks were dedicated to building out the dynamic video sequencing software. As a primary goal of this project was to create seamless playback across many unique video clips, building a system with low-latency switching was key. Another goal of the project was to build reactive logic into the video sequencing.

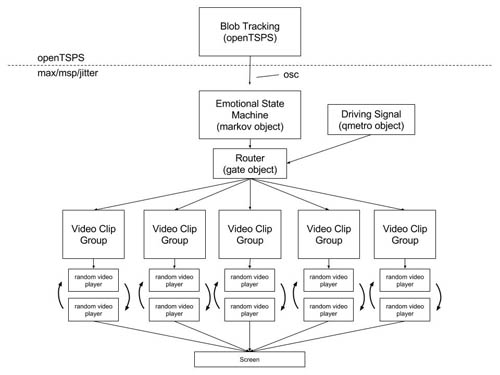

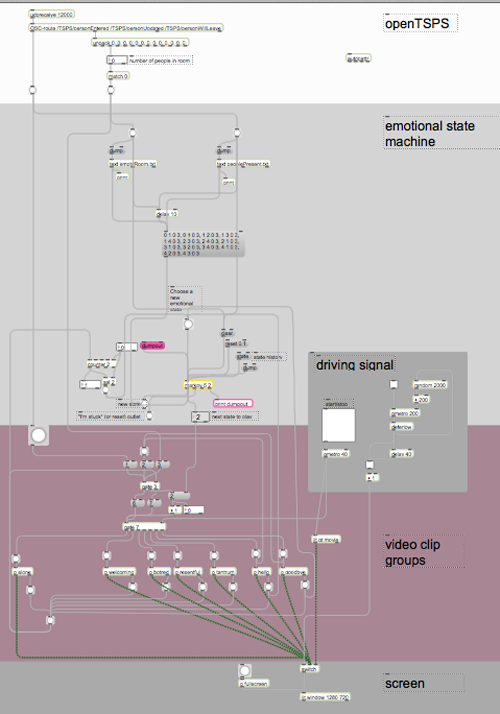

I will explain how I addressed both of these challenges later when I explain the individual components. Below is a high-level diagram of the software suite.

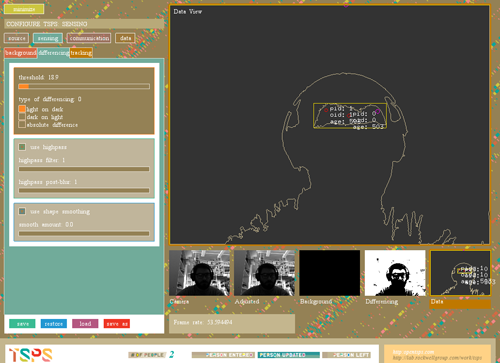

At the top of the diagram is openTSPS, an open source blob tracking tool built with openFrameworks by Rockwell Labs. openTSPS picks up webcam or kinect video, analyzes it with openCV and sends OSC packets that contain blob coordinates and other relevant events. For this project, we are only concerned with ‘personEntered’ events and knowing when the room is empty.

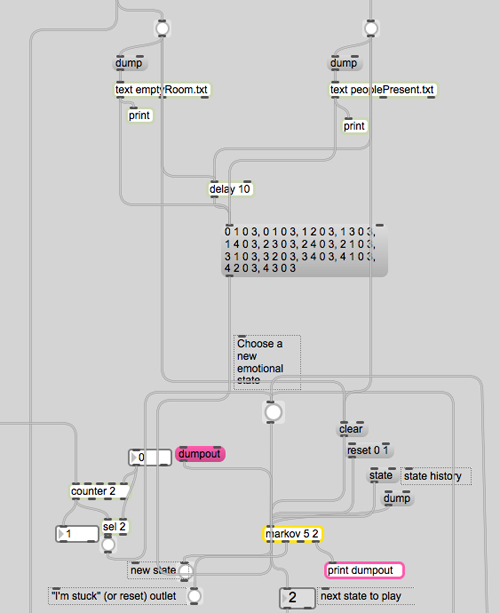

Blow openTSPS in the above diagram is the max/msp/jitter program, which starts with a probabilistic state machine that is used to choose which group of videos will play next. The state machine relies on Nao Tokui’s markov object, which uses a markov chain to add probability weights to each possible state change. This patch loads two different state change tables: one for when people are present and one for when the room is empty. This relates to a key idea of the project: that people act very differently when they are alone or around others.

state transition with markov object

state transition with markov object

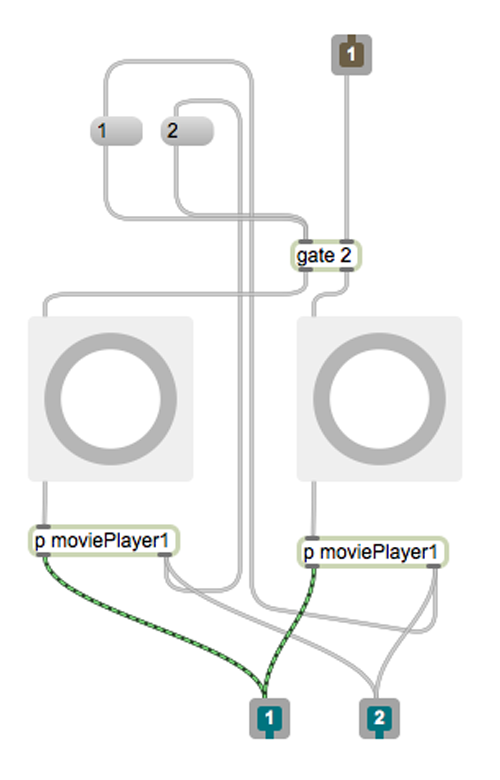

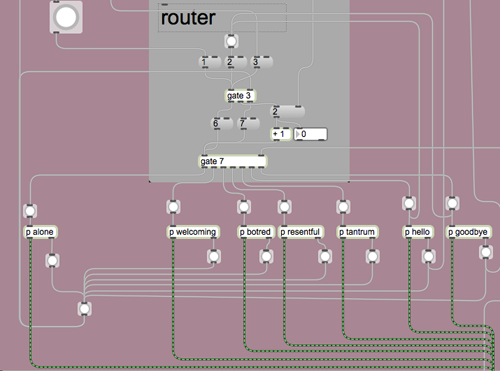

The markov state transition machine is connected to a router that passes a metro signal to the different video groups. The metro is called the ‘Driving Signal’ in the top diagram. The video playback section of the patch relies on a constantly running metro that is routed to one of seven double-buffered playback modules, which are labeled as emotional states according to the groups of video clips they contain.

router with video group modules

router with video group modules

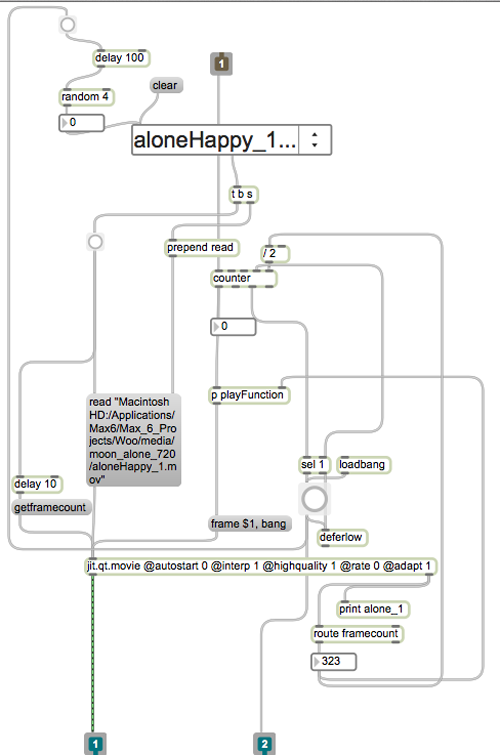

Each video group module contains two playback modules. The video group module controls which of the two playback modules is running.

While one playback module is playing, the other one loads a new, random video from a list of videos.

Here is a shot of the entire patch, from top to bottom:

Challenges

The software works well on its own. Once connected to openTSPS’s OSC stream, it starts to act up. The patch is set up to bypass the state transition machine on two occasions: when a new person enters the room and when the last person leaves the room. To do this properly, the patch needs correct data from openTSPS. In its current location, openTSPS (paired with a PS3eye) is difficult to calibrate, resulting in false events being sent the application. One option is to build a filter at the top of the patch that only allows a certain number of entrances or ’empties’ within a given time period. Another option is to find a location which has more controllable and consistent light.

Another challenge is that the footage was shot before the completion of the software. As a result, much of the footage seems incomplete and without emotion. To make the program more responsive to visitors, the clips need to be shorter (more like ~2 seconds each instead of ~9, which is the average for this batch).