Final Project Milestone One: Jake Berntsen

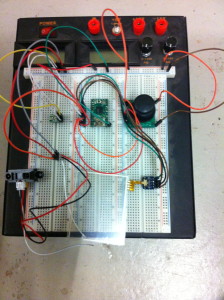

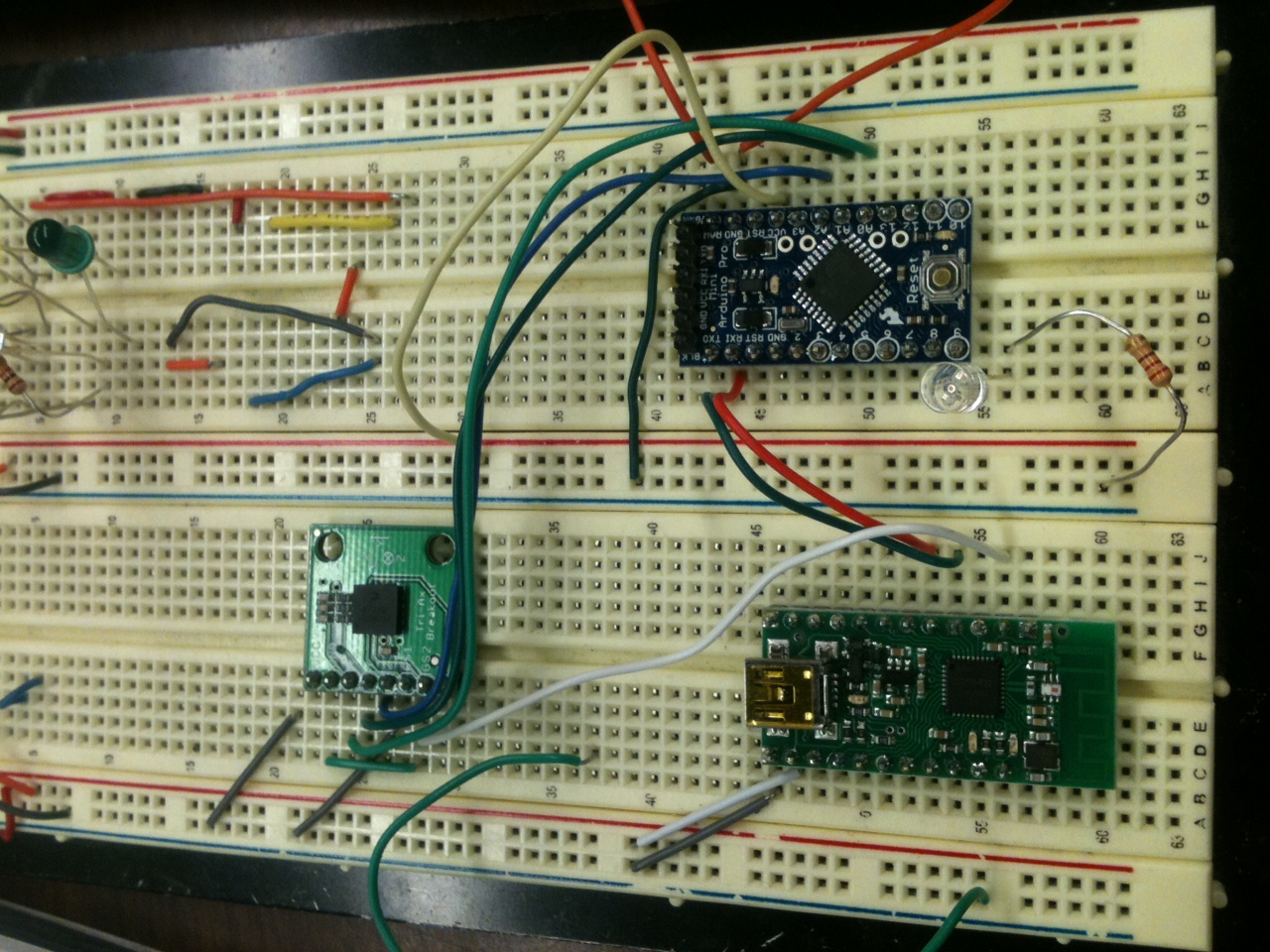

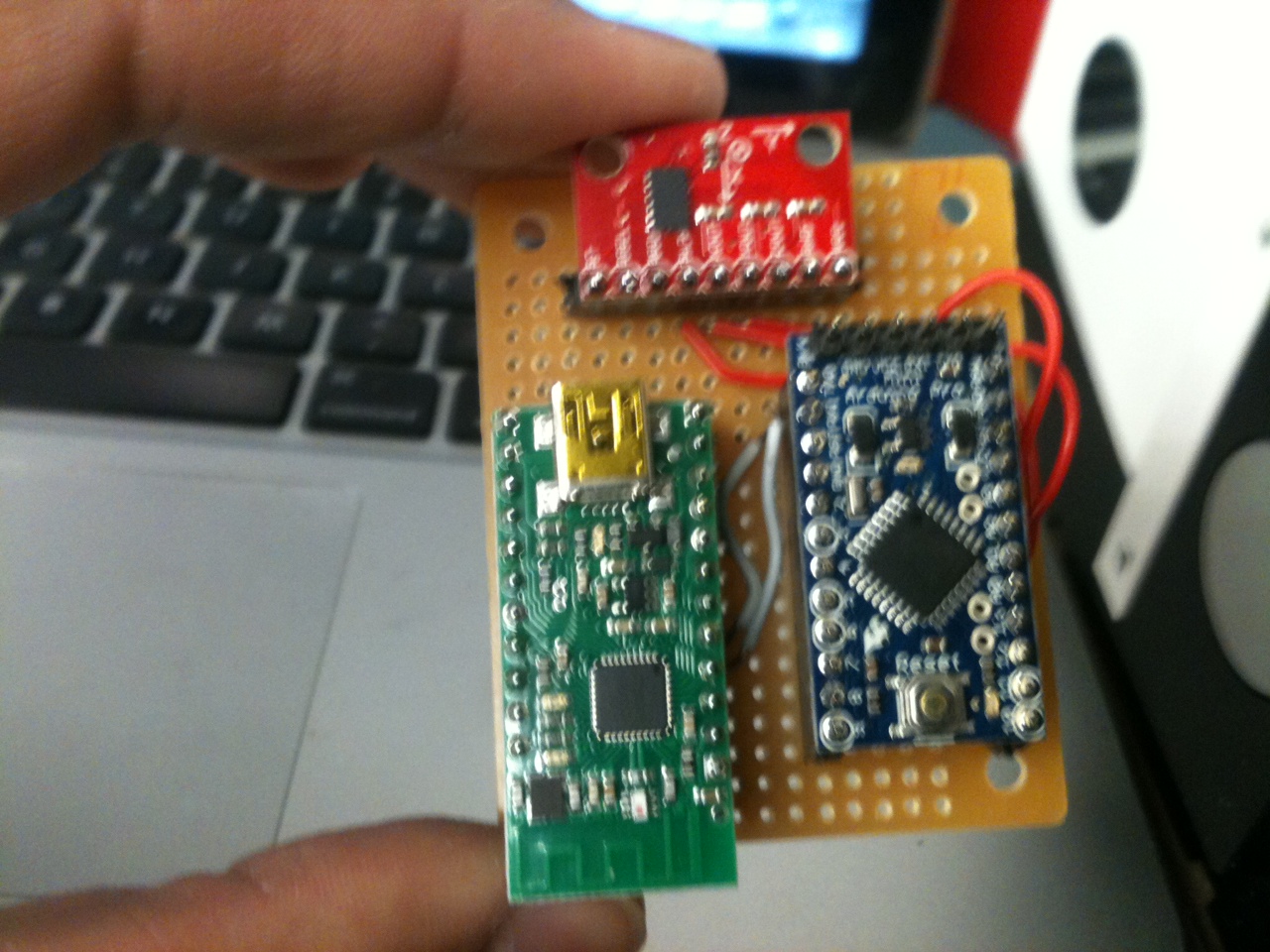

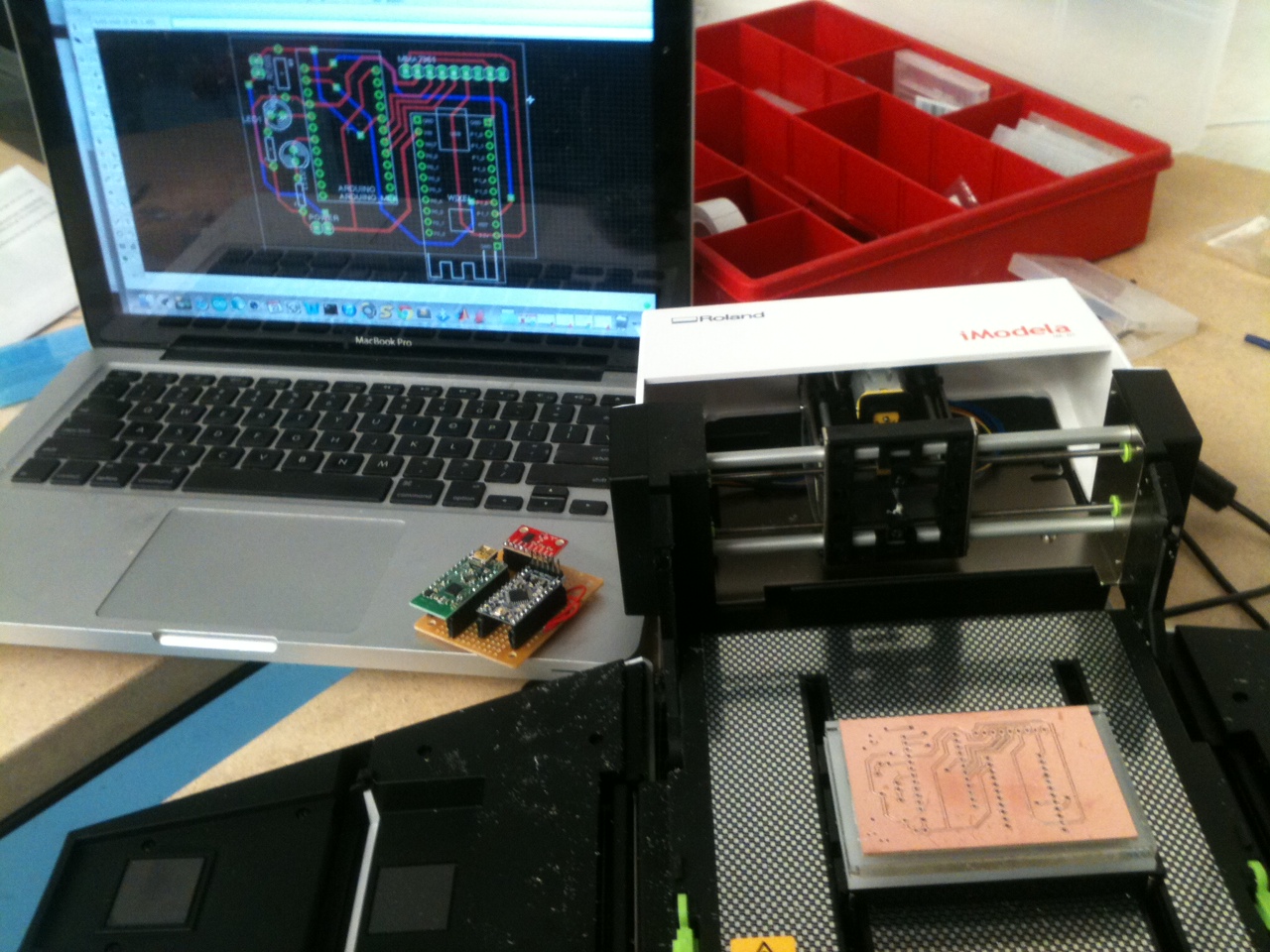

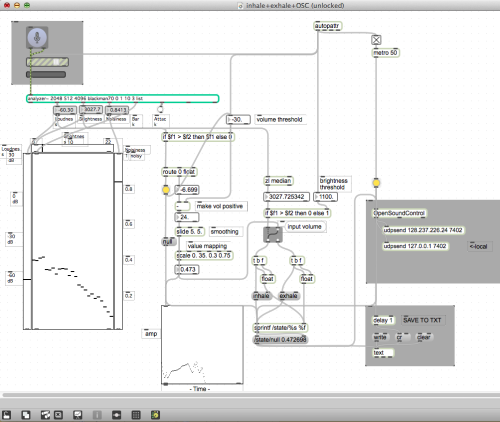

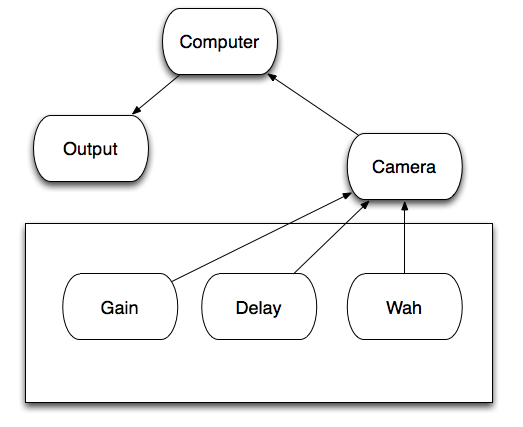

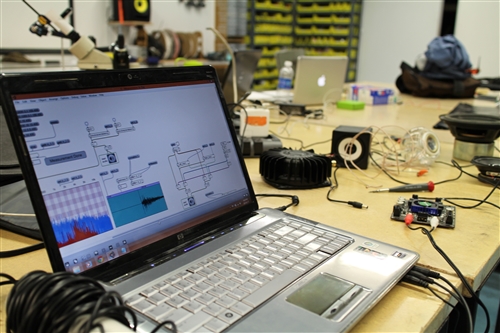

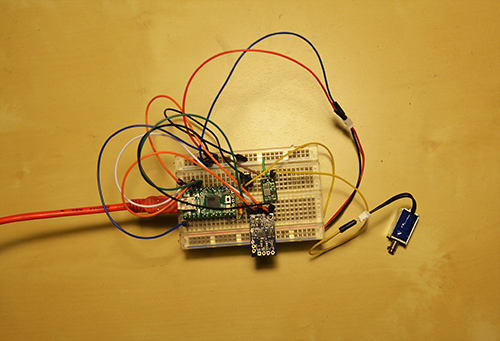

My struggles thus far in this class have rested almost entirely on the physical side of things; I’m relatively keen with regards to using relevant software, but my ability to actually build devices is much less developed, to say the least. With this in mind, I decided to make my early milestones for my final project focus entirely on the most technically challenging aspects in terms of electrical engineering; specific to my project, this meant getting all of the analog sensors running into my Teensy to obtain data I could manipulate in Max. To do so meant a lot of prototyping on a breadboard.

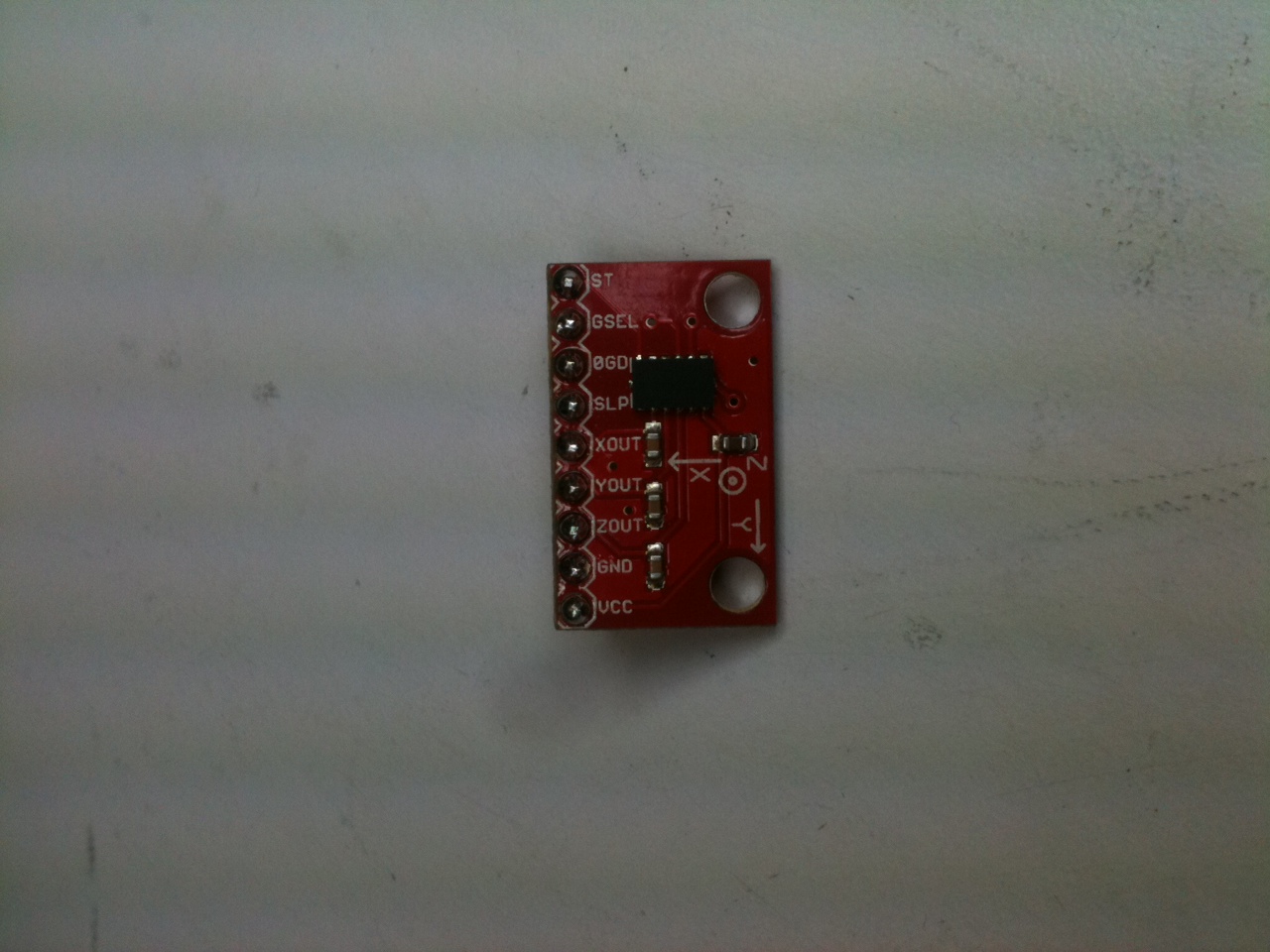

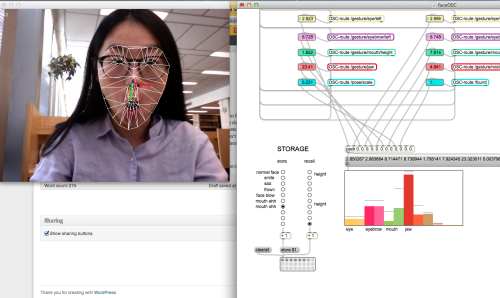

After exploring a wide variety of sensors, I found a few that seemed to give me a sharp control of the data received by Max. One of my goals for this project is to create a controller that offers a more nuanced musical command than the current status quo, and I believe that the secret to this lies within more sensitive sensors.

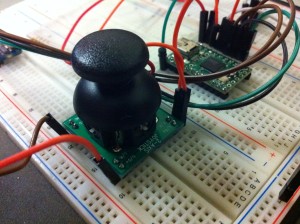

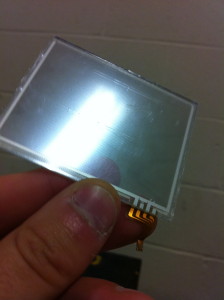

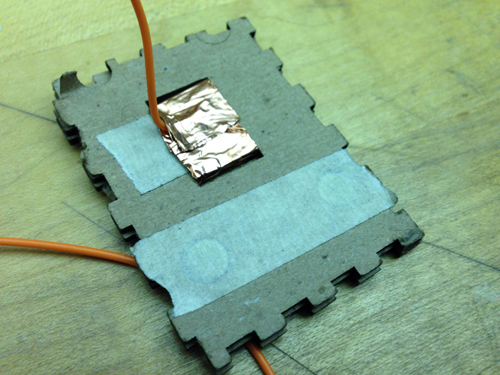

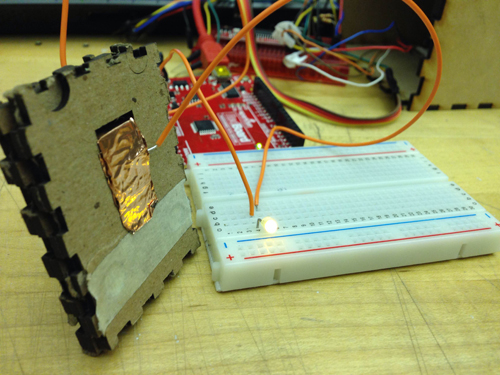

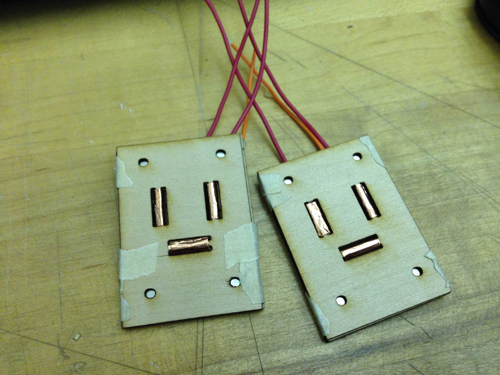

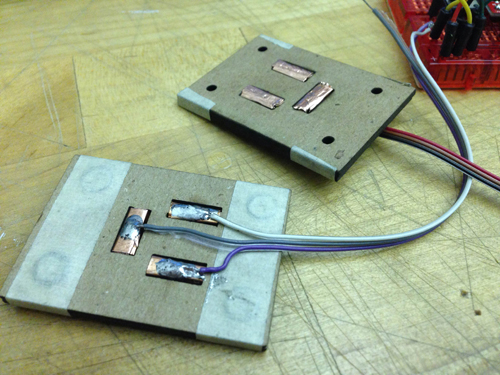

The sensors I chose are picture below: Joysticks, Distance Sensors, Light Sensors, and a Trackpad.

All of the shown sensors have proven to work dependably, with the exception of some confusion regarding the input sensors of the trackpad. The trackpad I am using is from the Nintendo DS gaming device, and while it’s relatively simple to get data into Arduino, I’m having trouble getting data all the way into Max.

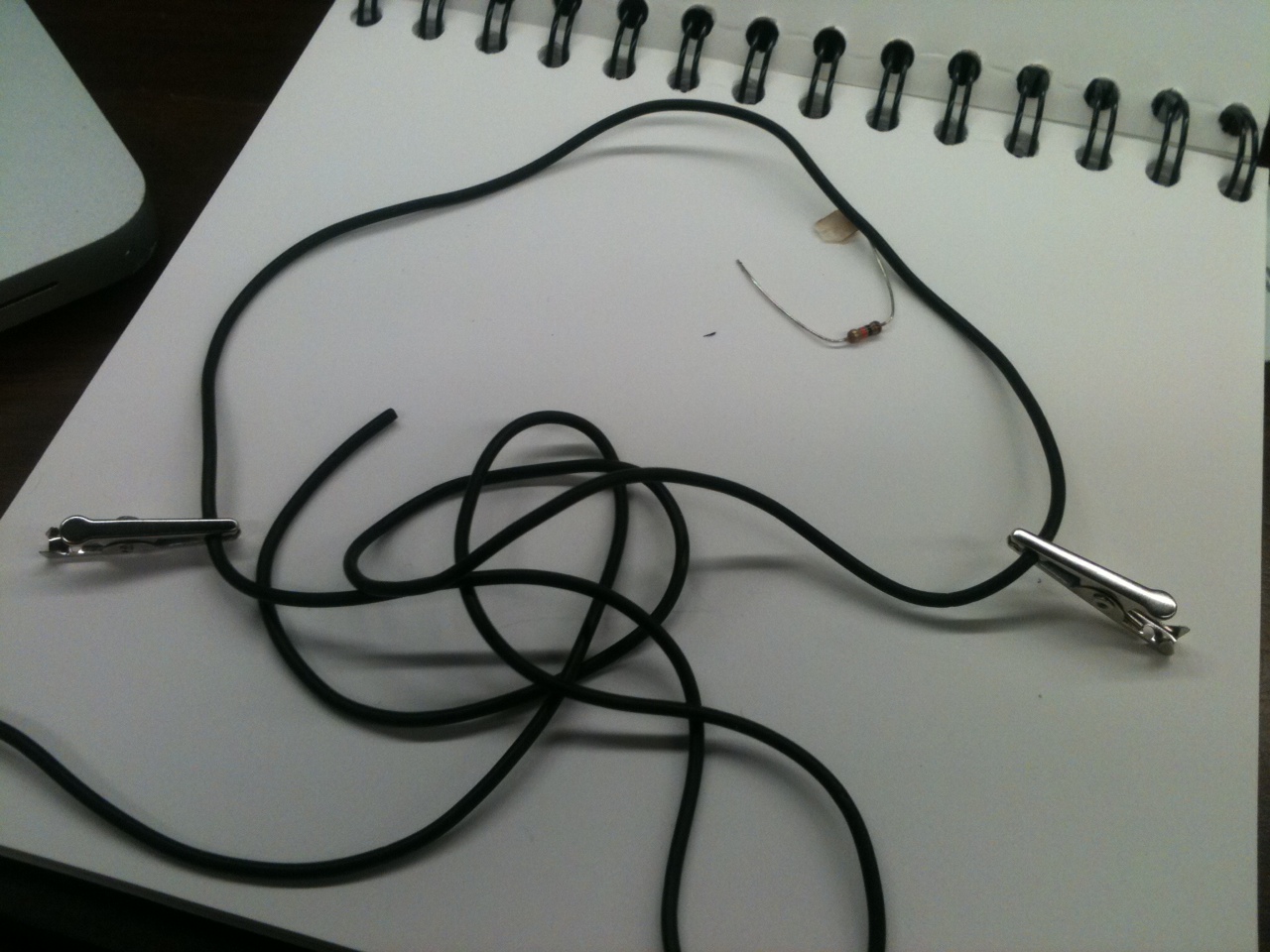

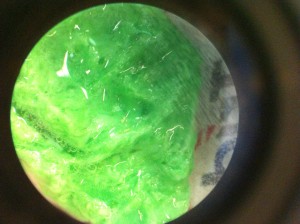

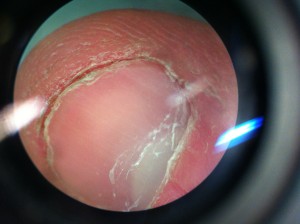

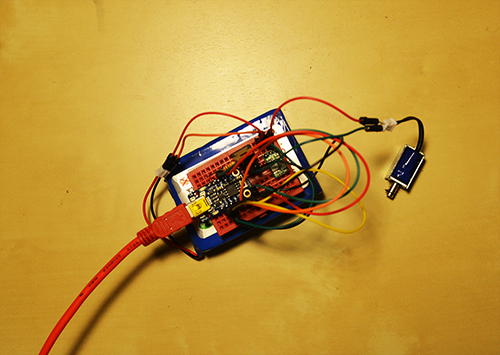

The other hardware challenge that I was facing this week was fixing the MPK Mini device that I planned to incorporate into my controller. The problem with it was a completely detached mini USB port that is essential to the usage of the controller. Connecting a new port to the board is a relatively simple soldering job, and I successfully completed this task despite my lack of experience with solder. However, connecting the five pins that are essential for getting data from the USB is a much more complicated task, and after failing multiple times, I decided to train myself a bit in soldering before continuing. I’ve not yet seen success, but I’ve improved greatly in the past few days alone and feel confident that I will have the device working within the week.

If I continue working at this rate, I do believe that I will finish my project as scheduled. While I was anticipating only being able to use the sensors that I could figure out how to use, I instead was able to make most of the ones I tried work with ease and truly choose the best one.