Group Project: Wireless Data + Wireless Video System (part 2)

Overview

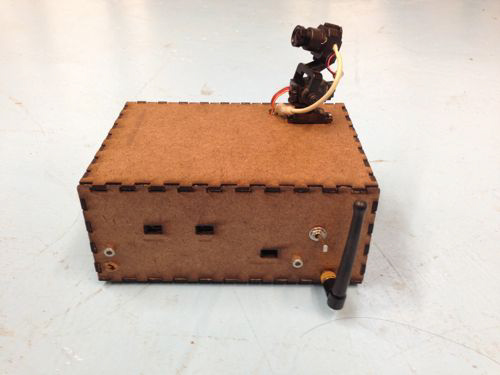

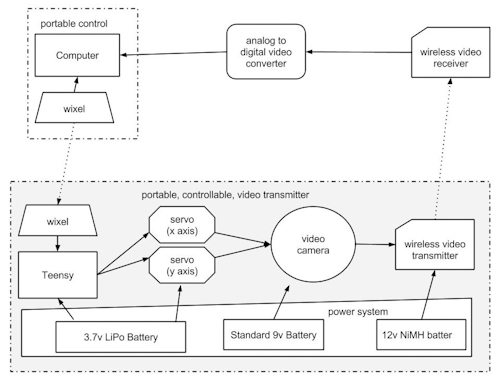

This project combines a Wixel wireless data system, servos, microcontrollers and wireless analog video in a small, custom-built box to provide wireless video with remote viewfinding control.

Hardware

Camera-Box:

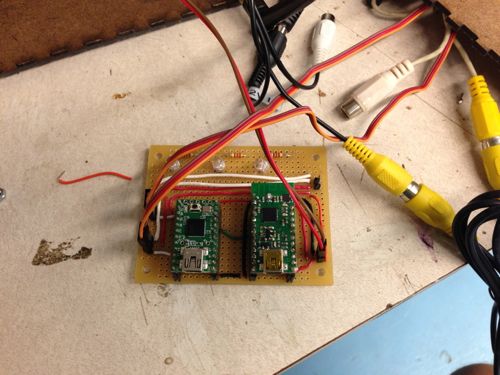

- Wixel wireless module

- Teensey 2.0 (code found HERE)

- Wireless video transmitter

- 3.3v servo (2x)

- FatShark analog video camera

- 12v NiMH battery

- 9v battery

- 3.7v LiPo battery

- Adafruit LiPo USB charger

Control Side:

- Alpha wireless video receiver

- Analog to Digital video converter (ImagingSource DFG firewire module)

- Wixel Wireless unit

- Max/MSP (patch found HERE)

System Diagram:

Tips and Gotchas:

1. Max/MSP Patch Setup:

- Connect the your preferred video ADC to your computer

- Open the patch

- hit the “getvdevlist” message box , select your ADC in the drop-down menu

- hit the “getinputlist” message box, select the correct input option (if there are multiple on your unit)

- if you see ““NO SIGNALS” in the max patch:

- double check the cables… this is a problem with older analog video

- verify that the camera and wireless transmitter are powered at the correct voltage

2. Power Choices:

- We ended up using three power sources within the box. This isn’t ideal, but we found that power requirement for the major components (teensey, wixel, transmitter, camera) are somewhat particular. Also keep in mind that the video transmitter is the largest power consumer at around 300mA.

Applications:

1. Face Detection and 2. Blob Tracking

Using the cv.jit suite of objects, we built a patch that pulls in the wireless video feed from the box and uses openCV’s face detection capabilities to identify people’s faces. The same patch also uses openCV’s background removal and blob tracking functions to follow blob movement in the video feed.

Future projects can use this capability to send movement data to the camera servos once a face was detected, either to center the person’s face in the frame, or to look away as if it were shy.

We can also use the blob tracking data to adjust playback speed or signal processing parameters for the delayed video installation mentioned in the first part of this project.

3. Handheld Control

In an effort to increase the mobility and potential covertness of the project, we also developed a handheld control device that could fit in a user’s pocket. The device uses the same Wixel technology as the computer-based controls, but is battery operated and contains its own microcontroller.

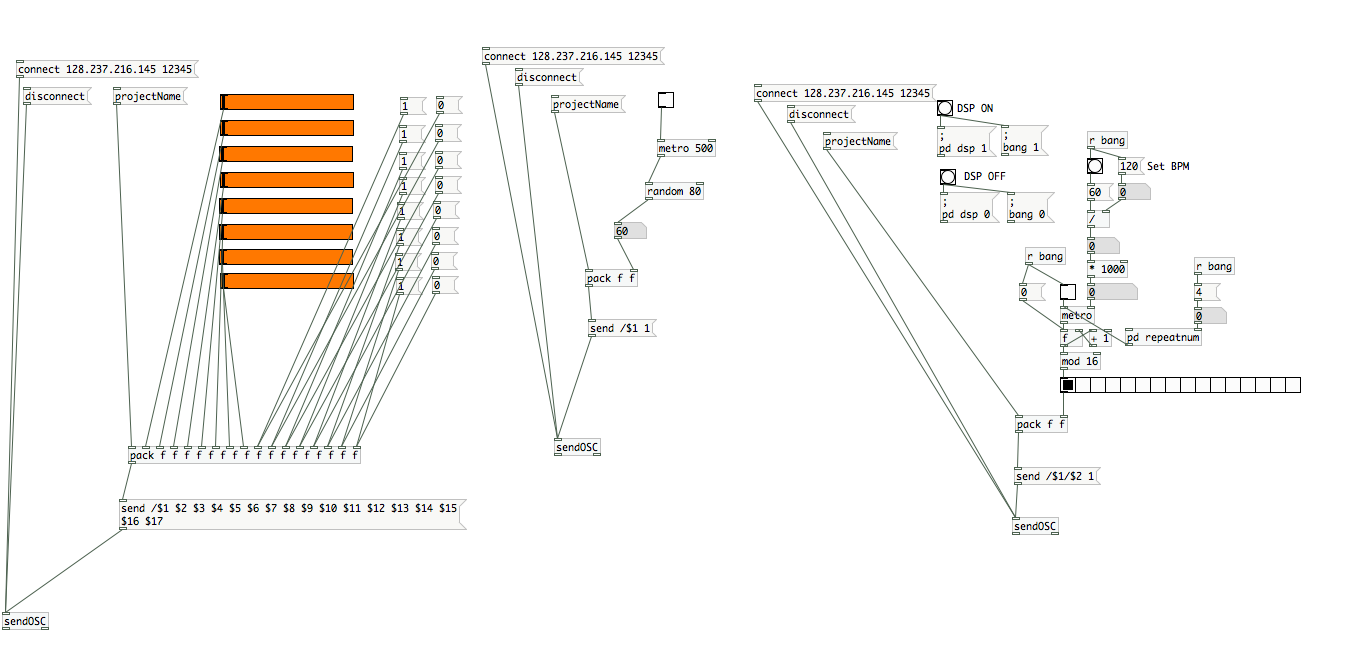

Group Project: OSC “Ground Control” (part 2)

Ground Control ?

I’ve been tasked to design a central control panel for all the instruments that are being built during the course. Naturally, I wanted to be platform and software-license independent, and I picked PureData as a base platform, for everyone to be able to install and use it on their computers. So it can be compiled into an app, and can be deployed rapidly on any computer in minutes and it’s as reliable as the Wi-Fi connection of the computer.

Every project utilizes OSC somehow, however even with a standardized protocol like OSC, in order to control all projects from one central control panel, all projects needed a common ground. This is where Ground Control comes in.

Essentially it is a collection of PD patches I’ve created, but they can work harmoniously together.

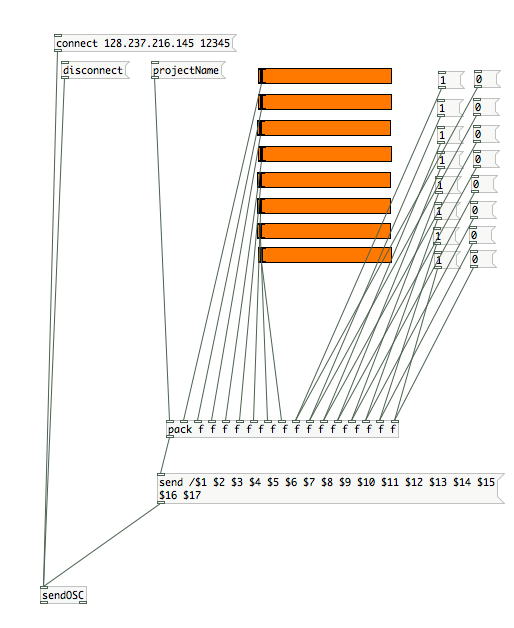

8 Channel Faders / Switches

Assuming some projects would have both variable inputs and on/off switches, I made this patch to control 8 faders, and 8 switches. Although it’s infinitely expandable, current photo shows only 8 objects.

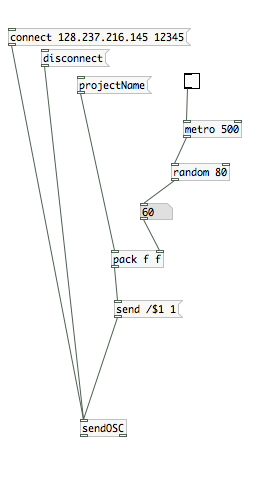

Randomness

Considering many people are working on an instrument, and these instruments are extraordinary hybrid instruments, I thought the control mechanism could benefit from having some level of randomness in it. This patch can generate random numbers every X seconds, in a selected number range, and send this data do the instrument. An example usage would be to control odd electrical devices in a random order.

Considering many people are working on an instrument, and these instruments are extraordinary hybrid instruments, I thought the control mechanism could benefit from having some level of randomness in it. This patch can generate random numbers every X seconds, in a selected number range, and send this data do the instrument. An example usage would be to control odd electrical devices in a random order.

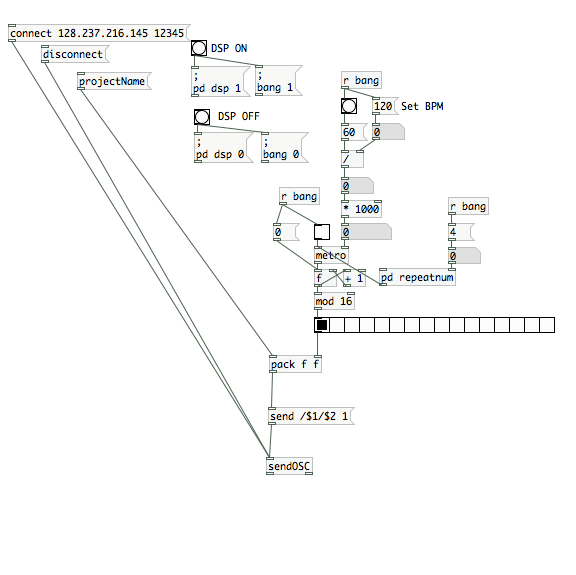

Sequencer

Making hybrid instruments is no fun, if computers are not being extra-helpful. I thought a step sequencer could dramatically improve some hybrid instruments by adding a time management mechanism. Using this cameras can be turned on and off for selective periods, specific speakers can be notated/coordinated or devices can be turned on/off in an orchestrated fashion.

Group Project: Multi-Channel analog video recording system (part 2)

Overview

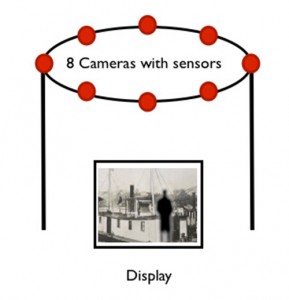

Eight video cameras present eight different views into a dynamic world. They can be oriented a number of ways including inward or outward on a subject.

Our basic setup is a box with the eight cameras arranged along the top. Cables run from the cameras to the base of the box where they are connected to power sources and a video multiplexer. The base also contains an Arduino which is used to control the mux. Power for the mux is supplied via the Arduino. A basic diagram of this setup can be seen below (this setup matches our “Fat Shark” application, as described in this post, but also can be generalized).

Hardware Details

Cameras

These cameras are generic analog mini cameras you can buy on the internet or steal from the artfab lab. They can be fed with 9-12V, and are provided with a 3 conductor cable: V+, GND and video.

Camera boxes

The camera boxes were constructed out of MDF. There is nothing special about the design except that there are holes to allow the camera to poke out as well as for the cord to come in. A good place to create a box is here: boxmaker.rahulbotics.com/.

Our camera cords are secured inside by foam padding and a zip-tie.

Open-beam structure

Open beam is very structural. openbeamusa.com/

Cords

Each camera has power input and video output. We used three 1-to-4 power splitters to distribute power from a single 9V source to the 8 cameras and other components. The video output eventually terminates as RCA to connect to the video MUX.

Mux

The mux takes 8 analog video inputs, a selector input, an enable input, a power source, and 1 analog video output. This board is a collaboration between Ray Kampmeier and Ali Momeni, and more information can be found here www.raykampmeier.net

Arduino

The arduino controls the mux selector from either being programmed to switch channels or by an external controller eg a computer or a phone outputting OSC. Any arduino would do.

Immersion RC

The video output can be routed to a sender antenna that takes in a video input and power. Their website is: www.immersionrc.com

Fat Shark

The goggles with screens inside: www.fatshark.com

Code

All our code and documentation is located on github github.com/sbarton272/VideoMux.

Looking Outwards: Fat Shark

As shown in the diagram, the video multiplexer is connected to the immersion RC chip that sends a radio signal to the Fat Shark, where a single output video appears through the goggles. Fat Shark is a very interesting device because it allows you to see videos and images that are not necessarily where you can traditionally view. As of now, the cameras are fixed in place on the Open-beam structure. The structure is robust enough for mobility, which allows for a wide range of possibilities on the location of the system.

Looking Inwards

The setup of the cameras allows us to rotate the cameras inwards. For this application, we place an object in the middle, and use the eight cameras to view it from different angles. In order to hold the object in place, we cut out a square piece of masonite, that is placed on top of four screws and can be moved up and down depending on the size of the object. Similar to the Looking Outwards application, the videos are controlled by the phone through touchOSC.

Third Application

We took the outward facing camera set-up and did a few shoots using our new compass controller. Here are the results:

Experiment 1:

Experiment 2:

Fourth Application: An Image Capture System for 8 Cameras with Different Angles.

The aim of this project is building a image capture system with our camera box for 8 cameras. The capture system is composed by a real time processing software : Pure Data and Open source hardware platform : Arduino. Function is simple like that first, we made a connection between a video multiplexer and arduino, so we can control and choose which camera and angle we want to use. And, in Pure data patch for this combination (Arduino and Video multiplexer), we can use a user interface which help to choose which camera with GUI and make a captured and file-saved images into PC. And then, we can use these images for making 3D scan image and multi viewed photo like a panorama image.

Group members

- Mauricio Contreras

- Spencer Barton

- Patra Virasathienpornkul

- Sean Lee

- JaeWook Lee

- David Lu

Group Project: Multi-Channel analog video recording system (part 1)

The project is centered around an 8-to-1 analog video multiplexer board. This board is a collaboration between Ray Kampmeier and Ali Momeni, and more information can be found here.

In the present setup, 8 analog small video cameras (“surveillance” type) are connected as inputs to the board, and the output is connected to a monitor. The selection of which of the 8 inputs gets routed to the output is done by an Arduino, which in turn maps the input of a distance sensor to a value between 1 and 8. Thus, one can cycle through the cameras simply by placing an object at a certain distance of the sensor. The connection diagram can be seen below:

A picture of the board with 8 inputs is displayed below (note the RCA connectors):

The original setup built for showcasing the project uses a box shaped cardboard structure to hold a camera in each of its corners, with the cameras pointing at the center of the box (see below).

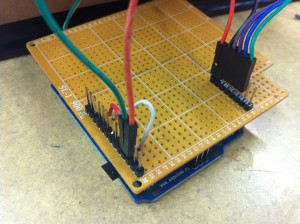

A simple “shield” board was designed to facilitate the interface between the Arduino, the distance sensor and the video mux.

Improvements

The current camera frame is of cardboard which is not the most robust of materials. A new frame will be constructed of aluminum bars assembled in a strong cube:

This is the cube being assembled:

Wires attachments for the cameras will be routed away from the box to a board for further processing.

Project Ideas

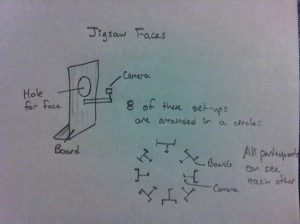

1) Jigsaw faces

Our faces hold a universal language. We propose combining the faces of eight people to create a universal face. Eight cameras are set-up with one per person. The participants place their face through a hole in a board so that the camera only sees the face. These set-ups are arranged in a circle so that all the participants can see each other. The eight faces are taped and a section of face is selected from each person to combine into one jigsaw face. This jigsaw face is projected so that the participants can see it. This completes the feedback loop. The jigsaw face updates in real time so as the participants share an experience their individual expressions combine in the jigsaw face.

The Jigsaw Face will consist of a few boards (of wood) for people to put their face through. Each board will have a camera attachment and all the cameras will be attached to a central processor. The boards will be arranged so that people face each other across a circle. This will enable feedback among the participants.

2) The well of time : Time traveling instrument

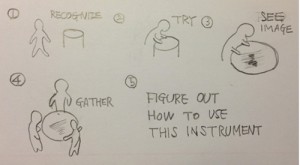

I’m still thinking about the meaning of 8 cameras and why we need and what we can do originally. And, I assume that 8 cameras mean 8 different views and also it could be 8 different time points distinguishably. After arriving this point, I realized we can suggest a moment and situation that is a mixed timezone and image with a user’s present image from each camera and the old photo from in the past which is triggered by a motion or distance sensor, attached with each camera.

Here is a sample image of my thought. This image contains the moment of now and the past time when the computer science building had constructed.

And, if a user stand with another camera, we could represent the image like below. The moment of first introducing the propeller steam boat. basically, this is the artistic way of time traveling. So, our limitation by black and white camera couldn’t be problem and in this case, it could be benefit.

For this idea, of course, we have to make some situation and installation looks like this.

More precisely, it has this kind of structure.

As a result, an example interaction scenarios with a user and diagram.

3) Object interventions

Face and body expressions can tell a lot about people and their feelings towards the surroundings or the objects of interaction. Imagine attaching eight cameras to a handheld object or a large sculpture. With eight cameras as the inputs, the one output will be the video from the camera that is activated by a person interacting with it in that specific area. The object can range from a small handheld object like a rubik’s cube, to a large sculpture at a playground. We think it will be very interesting to see the changes in face and body expressions as a person gets more (or less) comfortable with the object. It might be more interesting to hide these cameras so that the user will be less conscious of their expressions because they do not realize they are being filmed. It will be more difficult to do that with a large public sculpture, but we can do a prototype of a handheld object where we can design specific places where the cameras should locate so that they cannot be seen.

4) Body attachment

When we see the world we see it from our eyes. Why not view the world from our feet. Perceptions can change with a simple change in vantage point. We propose to place cameras on key body locations: feet, elbows, knees and hands in order to view the world from a new vantage point as we interact with our surroundings. Cameras would be attached via elastic and wires routed to a backpack for processing.

Group members:

- Mauricio Contreras

- Spencer Barton

- Patra Virasathienpornkul

- Sean Lee

- JaeWook Lee

- David Lu

Assignment 2 “Musical Painting” by Wanfang & Meng(2013)

What:

This idea came from the translation between music and painting. When somebody draws some picture, the music will change at the same time. So, it looks like you draw some music:)

How:

We use sensor to test the light, get the analog input , then transform it to sound. I think the musical painting is a translation from visible to invisible, from seeing to listening.

Why:

It is fun to break the rule between different sense. To on the question, the differences between noise, sound and music, personally thinking, that the sound is normal listening for people. The noise may be disturb people. The music is a sound beyond people’s expect. So based on the environment, people’s idea will also change, we will create more suitable music .

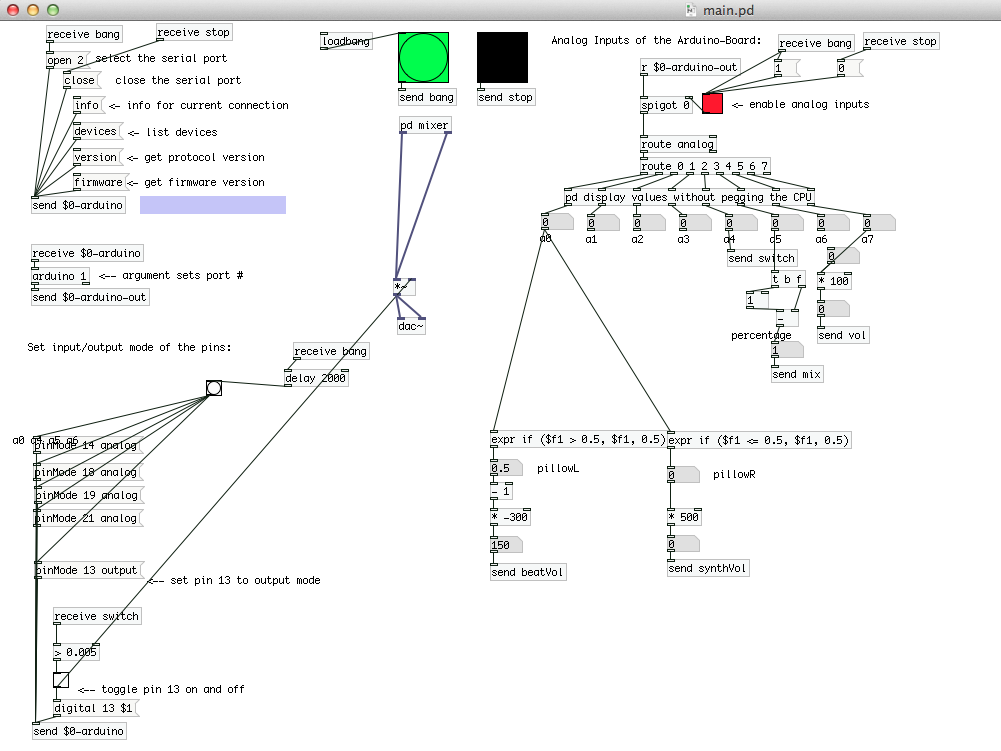

Assignment 2: “Comfort Noise” by Haochuan Liu & Ziyun Peng (2013)

Idea

People who don’t usually pay attention to noise would often take it for granted as disturbing sounds, omitting the musical part in it – the rhythm, the melody and the harmonics. We hear it and we want to translate and amplify the beauty of noise to people who didn’t notice.

Why pillow?

The pillow is a metaphor for comfort – this is what we aim for people perceiving from hearing noise through our instrument, on the contrary to what noise has been impressed people.

When you place your head on a pillow, it’s almost like you’re in a semi-isolated space – your head is surrounded by the cotton, the visual signals are largely reduced since you’re now looking upwards and there’s not that much happening in the air. We believe by minimizing the visual content, one’s hearing would become more sensitive.

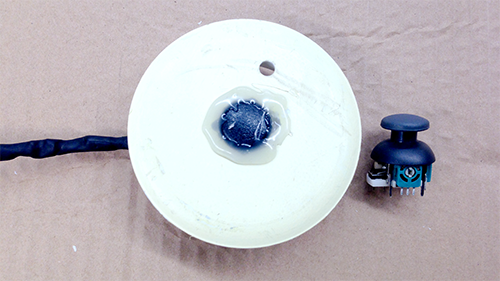

Make

We use computational tools ( Pure Data & SPEAR) and our musical ears to extract the musical information in the noise, then map them to the musical sounds (drum and synth ) that people are familiar with.

The Pduino (for reading arduino in PD) and PonyoMixer (multi-channel mixer) helped us a lot.

Inside the pillow, there’s a PS2 joystick used to track user’s head motions. It’s a five-direction joystick but in this project we’re just using the left and right. We had a lot of fun making this.

Here’s the mix box we made for users to adjust the volume and the balance of the pure noise and the musical noise extraction sounds.

The more detailed technical setting is as listed below:

Raspberry Pi – running Pure Data

Pure Data – reading sensor values from arduino and sending controls to sounds

Arduino Nano – connected to sensors and Raspberry Pi

Joystick – track head motion

Pots – Mix and Volume control

Switch – ON/OFF

LED – ON/OFF indicator

Assignment 2: “Drip Hack” by David Lu (2013)

Drip Hack is a hack that drips. Inspired by my past experiences with cobbling together random pieces of cardboard, this piece aims to explore our childlike curiousity of water dripping in the kitchen sink. The rate of dripping can be controlled by the pair of valves on top, and the water drops hits the metal bowl and plastic container below. The resulting vibrations are picked up by piezo-electric mics, which are amplified by ArtFab’s pre-amp. Since there are two drippers, interesting cross rhythms can be generated.

Assignment 2: “Be Still” by Jakob Marsico (2013)

“Be Still” mimics rhythmic patterns that can only be heard when one stops to listen. The piece focuses on our need to pay attention to nature. Using motion sensors, solenoid valves and a micro controller, “Be Still” forces the visitor to stop for an exaggerated amount of time before it grants them the pleasure of hearing its song.