Final Project: Drawable Stompbox – Haochuan Liu

Drawable stompbox

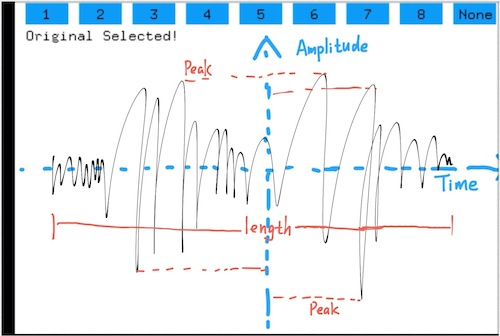

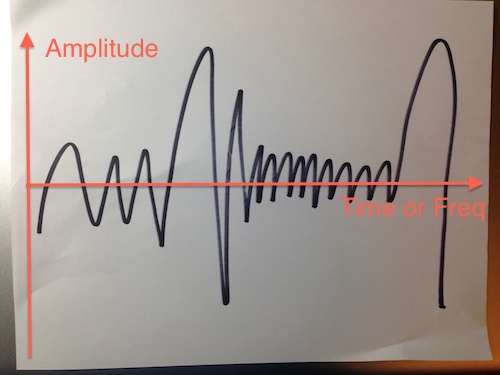

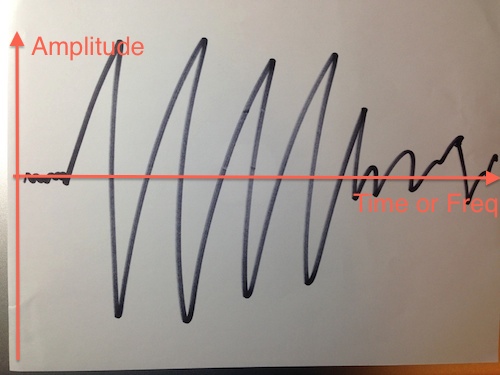

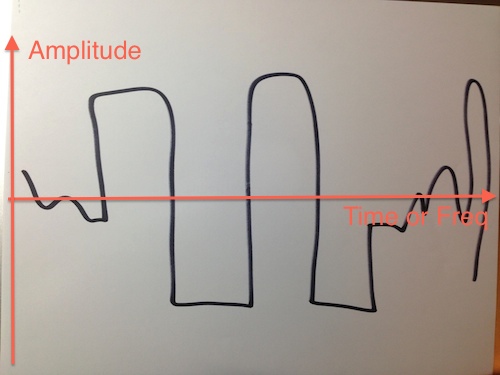

Drawable stompbox offers a more interesting and interactive way for guitarist to explore the variety of the parameters of the guitar effect world. With this instrument, people can select the guitar effect they want, then use finger to draw the parameters of your effect. Just like the diagram of time-domain and frequency-domain, this instrument can map what you’ve drawn to specific a set of number representing the amplitude and frequency information which will change the pre-written guitar effect’s parameter. You will get a lot of fun when you are trying to figure out the relationship between your drawing and the sound you heard.

Screenshot and video demo

Here is the screenshot of the Drawable Stompbox running in my iPad:

When you are drawing on iPad, you can not see the lines or patterns. The reason I made the canvas always blank is letting people hear what they have drawn instead of seeing what they have drawn.

Here is a video demo:

drawable stompbox final video from Haochuan Liu on Vimeo.

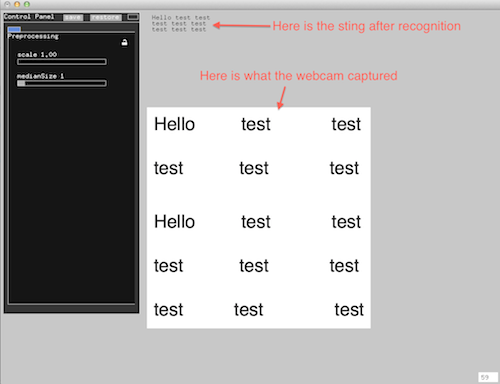

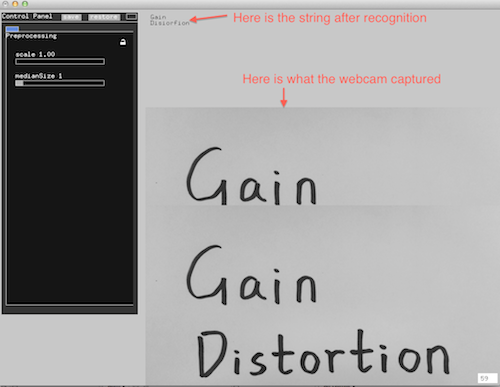

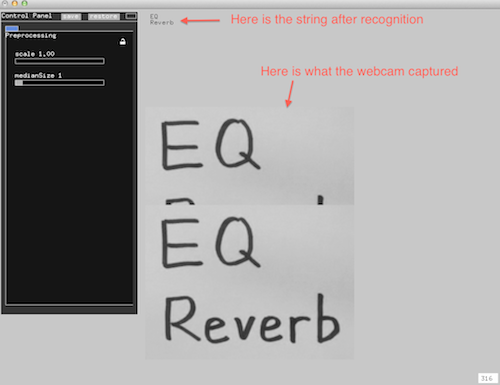

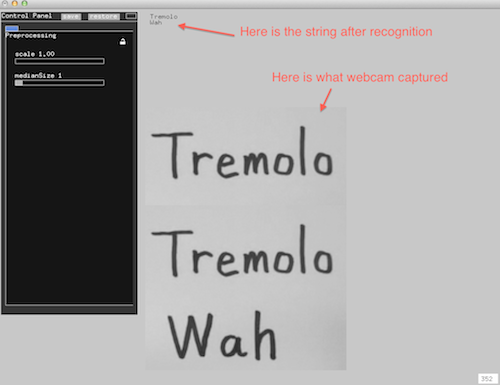

Previous version

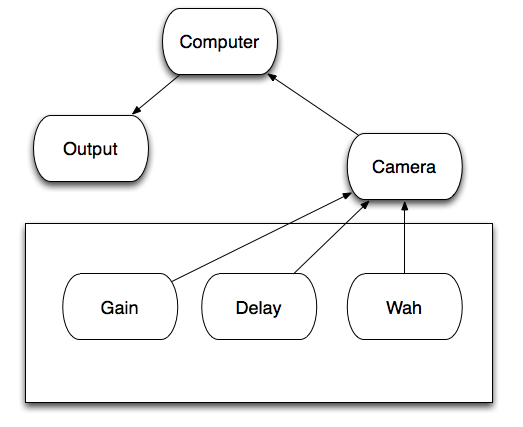

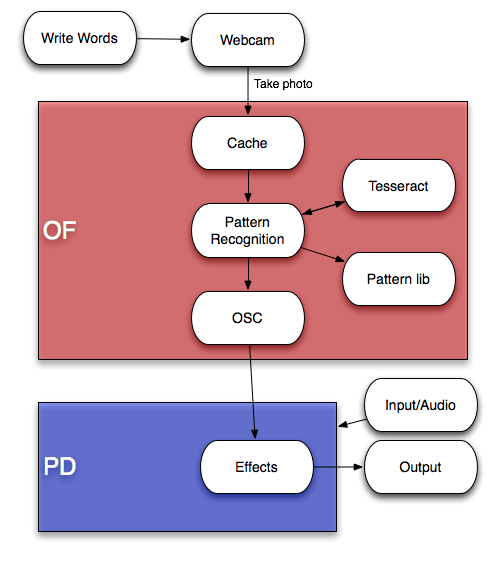

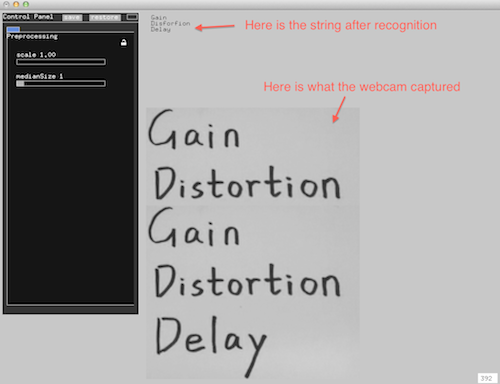

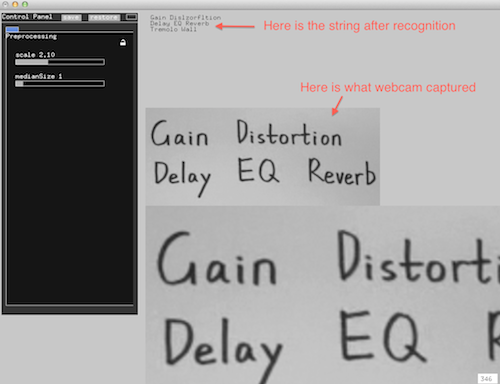

There had been a big change of my project. At the beginning, drawable stompbox was just like a selector for guitar effects: After people wrote down the effects on a piece of paper, the webcam which was above the paper would capture what you had written into a software written in openframeworks. The software would analyze the words and do the recognition using optical character recognition (OCR). When you wrote the right words, the software will tell puredata to turn on the specific effect through OSC, you would finally hear what you’ve written when you play your guitar.

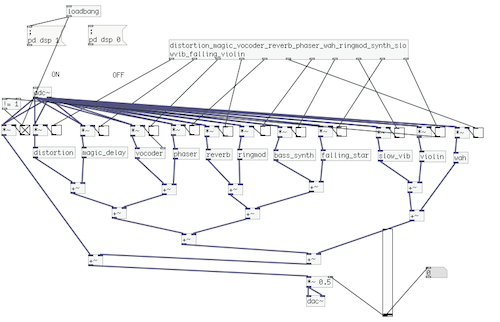

Technical Details

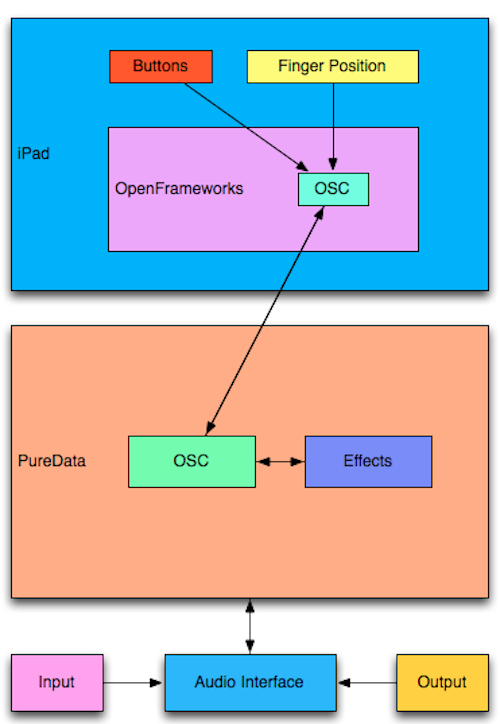

Here is the diagram of Drawable Stompbox:

Buttons and coordinates

I use very simple functions in OpenFrameworks to draw the buttons and get the x/y coordinates when moving fingers on the screen of iPad.

Mapping

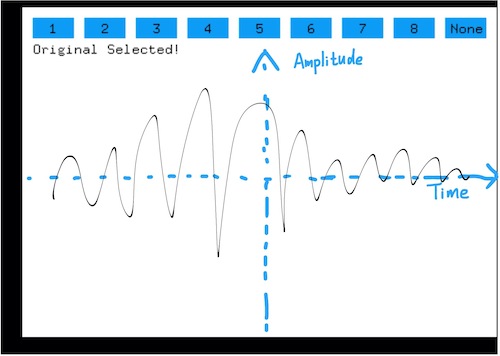

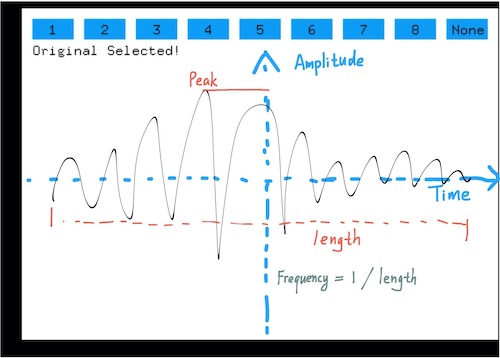

The blue coordinates which is invisible in the real software represents amplitude (x coordinate) and time (y coordinate) information.

When you draw something on the canvas, the peak will determine the volume of the sound you will hear. The length of your drawing will determine the frequency parameter.

Communication

The software in iPad uses OSC to communicate with PureData running on the laptop. Thus, PureData can always know which effect is selected and also the values of amplitude and frequency.

Future Work

Currently when you play the guitar with using the Drawable Stompbox you still need a partner to help you draw something on the canvas of iPad to get the parameter change of the effect. It is right now just a prototype or a toy for people to practice instead of performance. The improvement of this project can be changing using people’s finger to using people’s foot. Thus, you can play the guitar and use your foot to draw the effect parameter at the same time.