Final Project Presentation – Mauricio Contreras

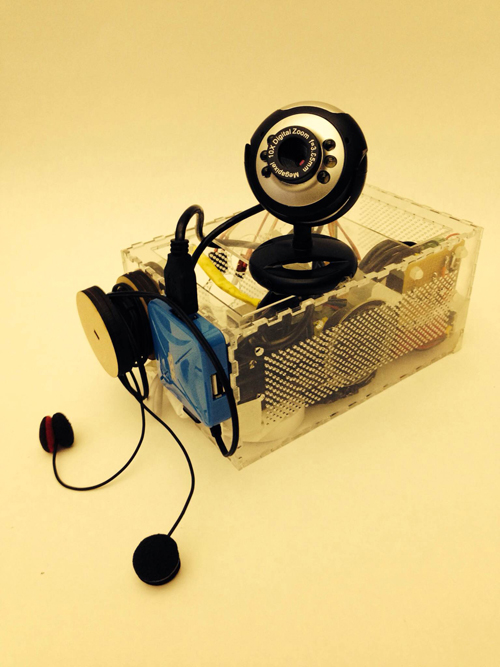

My fourth and final milestone and final project presentation involved real time driving of the movement of a simulated robotic arm with a haptic feedback capable gestural controller. By this time, I was interfacing with an actual ABB IRB 6640 industrial robot, and my controller was a smartphone. The IMU of the smartphone allows to read its orientation, and thus allows for mapped gestural control of the position of the head of the robot based on the tilt of the smartphone on each of its own axis. The haptic feedback is provided by the smartphone’s vibrator. Through the development up until the 3rd milestone, I had concluded that even though I had implemented already the motion control system and vibration upon the robot touching “virtual walls” (preset coordinates beyond which motion is not allowed), with the smartphone vibrating upon touching the wall, the “quality” of the motion I was getting was not enough to make it a desirable sculpting tool. I then shifted the priorities of the project in order to get better motion characteristics, as opposed to exploring further on the haptic feedback side, which up to now is only binary (touch = max vibration, not touch = 0 vibration).

Motion experiments

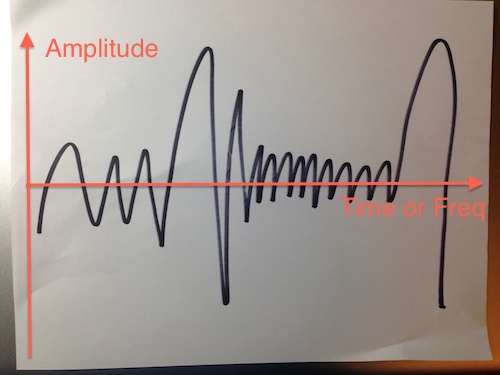

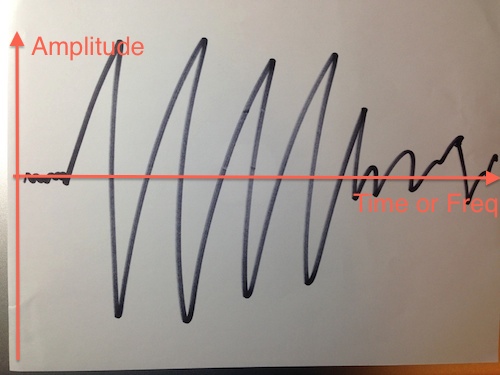

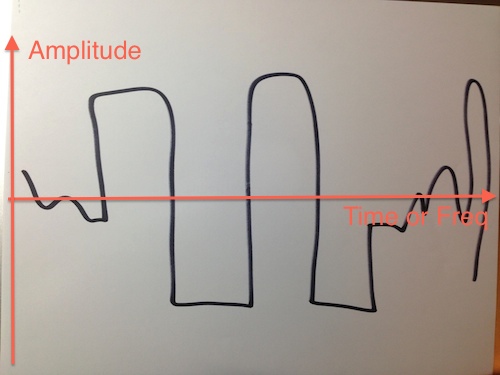

The motion of the ABB industrial robotic arms is limited to receive targets (6 degrees of freedom points: 3 coordinates for position and 3 for head orientation/rotation) and the motion path between them cannot be interrupted. This means updating the next target upon real time variation of the driving variable (in this case the smartphone’s orientation) is essentially not possible. I say essentially because there is a parameter of the movement instructions called “zone”, which specifies how near the head of the robot needs to be to the current target being pursued for the instruction to be considered complete, and then move to the next one. “zone = fine” means the targets have to be reached precisely. “zone = zX”, where X belongs to a group of preset numbers, allows the robot to reach “near” the target (how near is specified by the different X). Upon reaching the “zone” around the target, the next instruction in the program starts being executed.

With the above information, I considered the following alternatives for improved motion, grouped mainly in two categories:

A. Better target generation

1) Low pass filtering of the orientation

2) Keep a reception buffer with at least one more target than the current instruction (for “zone!=fine” to work well)

3) Smart generation of targets based on gesture recognition

B. Optimization of movement commands parameters

1) Setting of correct speed, step size and zone.

Out of all the available options, I started by B.1. The original motion to compare against is the fixed speed (max), fixed step size (usually 5-10 cm) and zone=fine of the first motion test, as shown in the video below:

The main progress in this direction was achieved considering variable step size in each axis based on the change of magnitude of the rotation in that axis. Also, variable speed, based on the max of the absolute values of all rotation components. Static weights were applied so that the largest step size would be around 10 cm. and the speed varies between 0-100%, with the max speed of the robot being 200 mm/s (in the manual mode of operation which is what I’ve been allowed to use). The result of these parameters may be seen below:

The resulting motion, albeit keeping its piecewise nature, seems to be much better suited to precise yet responsive control, with very little motion being performed when the rotation is near 0 (smartphones own axis aligned with the worlds coordinate system, as described here) and larger displacements being performed due to higher magnitude rotations. This resembles the way humans work on a physical piece in the sense that when precision is required movements are slow and short/local, whereas the movement between areas of precision is by definition non precise and therefore is optimized with greater speed and less accuracy. This parameter optimization was shown in the final project presentation. The final precision reached, along with smartphone vibration upon touching virtual walls (“sandbox”) was shown both in free air and also with a very simple demo. It consisted in a pencil being attached to the arm’s head and a canvas layer on top of a table, where people could draw (safely, since a “sandbox” was created which would not allow the robot to pierce through the table or adjacent wall). A video of the presentation was taken:

Assessment

The overall goal of the semester was to give a step towards an overarching ambitious project for my degree, related to being able to sculpt with a robotic arm. For this class the goal was to get acquainted with the workflow around the robotic arms present in dFab, the Dept. of Architecture digital fabrication laboratory. The factual outcome would be to use the same software that previous users are familiar with and be able to connect a gestural controller and haptic feedback capable device to drive the robots. As stated this was achieved, but the following was learnt during the project:

- Responsiveness: real time driving of the robot seems to be crucial in order for the user to feel she/he is in actual control of the robot. The slower the response time, the harder it is to relate one’s own motion to that of the robot in an intuitive way.

- Quality of the motion: The piecewise motion obtained due to the constraint imposed by the ways the robots are programmed (the lowest layer accessible to the user being RAPID) greatly reduces the quality with which users seem to regard the quality of the motion. “Dumb” and “robotic” were adjectives used repeatedly by users/observers. Even when good parameter choice for motion commands aided the situation, this is a key aspect to address in future development. There are other robots which are made to more closely resemble human arms and allow better real time interaction, but my degree is based on architecture and on the practical side of things I want to explore and also give dFab a creation tool useful and tuned to their setup, which means using the ABB robots. My intuition tells me that A.3, smart target generation, may provide the greatest improvement and is the next step I intend to explore in future courses.

- Mapping: the final setup maps smartphone orientation to position of the robot’s head. Whereas it proves the concept of gestural control, it is indirect (as opposed of driving position with position which was the original intent) and the final degree of control available to the user seems far from what is desired. Presentation observers had a really tough time trying to draw on the paper provided. As it is now, the controller more resembles a 3 degree of freedom joystick, and very likely an of the shelve one that would be better in some sense could be purchased. Again my intuition tells me direct mapping (position to position and orientation to orientation) is required for “natural” control, and since my research so far points that stand alone positioning through an IMU is not solved (at least in free air) and cannot be applied to the project, seems like external sensor based technologies, such as visual motion capture (“mocap”) are necessary.

- Why?: the question came back again from many observers in the presentation. Since the example application was drawing, many commented on the fact that humans can draw much better than the robot did. To this I replied yes, absolutely, since a naturally feeling motion has not been achieved yet, but more importantly, because the idea of using an industrial robotic arm is in tasks that would be impossible or at least very difficult for a human to do directly and cumbersome to do with a power tool, like bending/milling/etc. very hard/large materials, and that with precision and speed. Essentially, a big industrial robotic arm is made for high power/high precision/large sized applications, so anything that needs a very powerful hand tool, a position hard to reach and very high precision work in either/both scenarios a robot, with the correct instructions, can do better than bare hand. I think now that it is essential to somehow preserve the high precision nature of the robot, but still explore the liveliness of human physical sculpting. A way to do this is with a mixed analog/digital instruction set, such as in drawing software which mixes free form drawing with a mouse, but also allows for precise mathematical operations to be performed on top of that. This is tried and true for sculpting in the virtual world (any CAD software), hence it is likely that some of it can be translated in a useful way to the physical world. I intend to build this mixed human driven/software enhanced toolkit.

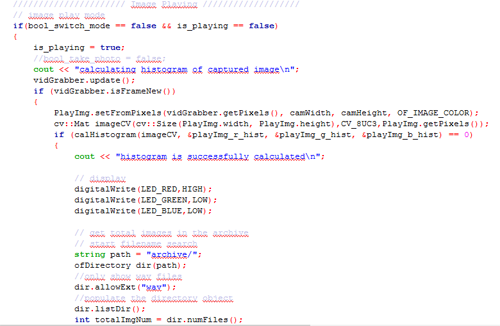

Code

Rapid, Android. See the Future CNC course website and ABB’s full reference for further information on RAPID.

Acknowledments

I would like to thank very much Mike Jeffers, Madeline Gannon, Zack Jacobson-Weaver, Ali Momeni, Jeremy Ficca, Joshua Bard, Garth Zegling and CMU’s Manipulation Lab for their incredible support to this project.