Audio Graffiti and Music in Motion: Location Based + Spatial Sound

Some impressive spatial audio examples/works by Zack Settle and company. See Zack’s page for more…

Some impressive spatial audio examples/works by Zack Settle and company. See Zack’s page for more…

This project combines a Wixel wireless data system, servos, microcontrollers and wireless analog video in a small, custom-built box to provide wireless video with remote viewfinding control.

1. Max/MSP Patch Setup:

2. Power Choices:

Using the cv.jit suite of objects, we built a patch that pulls in the wireless video feed from the box and uses openCV’s face detection capabilities to identify people’s faces. The same patch also uses openCV’s background removal and blob tracking functions to follow blob movement in the video feed.

Future projects can use this capability to send movement data to the camera servos once a face was detected, either to center the person’s face in the frame, or to look away as if it were shy.

We can also use the blob tracking data to adjust playback speed or signal processing parameters for the delayed video installation mentioned in the first part of this project.

In an effort to increase the mobility and potential covertness of the project, we also developed a handheld control device that could fit in a user’s pocket. The device uses the same Wixel technology as the computer-based controls, but is battery operated and contains its own microcontroller.

Ground Control ?

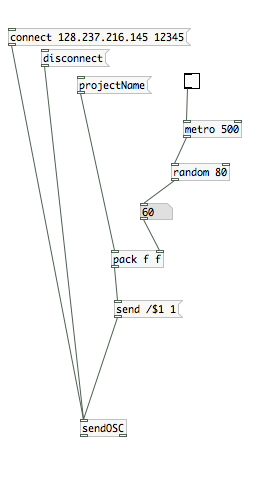

I’ve been tasked to design a central control panel for all the instruments that are being built during the course. Naturally, I wanted to be platform and software-license independent, and I picked PureData as a base platform, for everyone to be able to install and use it on their computers. So it can be compiled into an app, and can be deployed rapidly on any computer in minutes and it’s as reliable as the Wi-Fi connection of the computer.

Every project utilizes OSC somehow, however even with a standardized protocol like OSC, in order to control all projects from one central control panel, all projects needed a common ground. This is where Ground Control comes in.

Essentially it is a collection of PD patches I’ve created, but they can work harmoniously together.

8 Channel Faders / Switches

Assuming some projects would have both variable inputs and on/off switches, I made this patch to control 8 faders, and 8 switches. Although it’s infinitely expandable, current photo shows only 8 objects.

Randomness

Considering many people are working on an instrument, and these instruments are extraordinary hybrid instruments, I thought the control mechanism could benefit from having some level of randomness in it. This patch can generate random numbers every X seconds, in a selected number range, and send this data do the instrument. An example usage would be to control odd electrical devices in a random order.

Considering many people are working on an instrument, and these instruments are extraordinary hybrid instruments, I thought the control mechanism could benefit from having some level of randomness in it. This patch can generate random numbers every X seconds, in a selected number range, and send this data do the instrument. An example usage would be to control odd electrical devices in a random order.

Sequencer

Making hybrid instruments is no fun, if computers are not being extra-helpful. I thought a step sequencer could dramatically improve some hybrid instruments by adding a time management mechanism. Using this cameras can be turned on and off for selective periods, specific speakers can be notated/coordinated or devices can be turned on/off in an orchestrated fashion.

What:

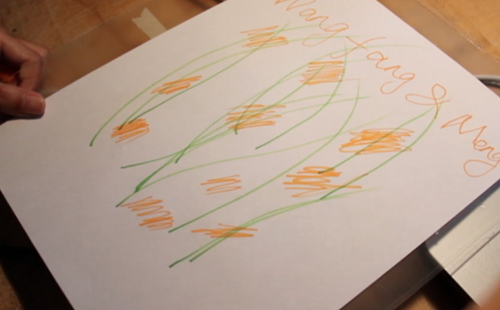

This idea came from the translation between music and painting. When somebody draws some picture, the music will change at the same time. So, it looks like you draw some music:)

How:

We use sensor to test the light, get the analog input , then transform it to sound. I think the musical painting is a translation from visible to invisible, from seeing to listening.

Why:

It is fun to break the rule between different sense. To on the question, the differences between noise, sound and music, personally thinking, that the sound is normal listening for people. The noise may be disturb people. The music is a sound beyond people’s expect. So based on the environment, people’s idea will also change, we will create more suitable music .

Our idea begins from traditional Chinese Painting, painting lines express a feeling of strength and rhythm concisely. Our work tries to transfer the beauty of painting to music. However, I found that making a harmonious”sound” from people’s easy input from 9 photosensors is not as easy as I thought. I tried some “chord”,like C mA D and so on. It helps a little bit…The final result is in the vedio in Vimeo.

Our idea begins from traditional Chinese Painting, painting lines express a feeling of strength and rhythm concisely. Our work tries to transfer the beauty of painting to music. However, I found that making a harmonious”sound” from people’s easy input from 9 photosensors is not as easy as I thought. I tried some “chord”,like C mA D and so on. It helps a little bit…The final result is in the vedio in Vimeo.

I know there are pretty work to improve the “algorithm”. Although, not sound really like music, I think the value of the work is that we try to break the line between sound and light, and see what happens.

In order to find a improvement way, I observed several other interactive musical instruments works, I think maybe using some basic rhythm pieces play repeatedly and only changing the chords as interactive elements is a way of improvement of this work.

The technology we used are photo sensors and arduino and Max.

assignment1 1 from Wanfang Diao on Vimeo.

Suspended Motion is a setup that tends to make the user believe that he/she is in a state of motion on a spinning chair, while in fact for most part of the experience the user remains stationary. It is based on a Philosophical Theme revolving around Scientism.

Today, we all live in the Age of Science and we embrace everything that science brings with it. Just look around and you will find that we are surrounded by technology that was just science fiction some decades ago- but this sometimes tends to make us believe that Science is the most authoritative worldview: it has all the answers to our questions and it alone can explain the true inner working of the universe- only science can answer how the universe came about, how we evolved or what our purpose in this world is. Suspended Motion gives a different perspective on the topic.

Suspended Motion consists of a rotating-chair (in fact any rotating chair) where the user sits on the chair, wears a headphone (preferably wireless, or hold your laptop as you spin) and follows the instructions on the sound clip (link below). He is first instructed to close his eyes, spin the chair and observe how the sound field exactly matches his current position. This is done by angular position data sent to the laptop via OSC from an iPhone’s Compass attached to the chair. After about 40 seconds, the user is instructed to give a final push and to set off in a decelerating rotation. The user focuses on the sound, and experiences Suspended Motion for the last 25 seconds of his spin.

This is more of an ‘experience-based’ instrument so I would urge you to try it yourself using the following setup. You can always hear the audio clip, just to get a sense of how things go.

Audio Clip (Disclaimer: Lock Howl-Storm Corrosion from the 2012 release “Storm Corrosion” is the copyrighted property of its owner(s). )

MAX Sketch

Ambisonics for MAX

Compass Data via GyroOSC (iPhone App)

In order to add extra functionality to max, you can download and install “3rd party externals”. These are binaries that you download, unzip and place within your MAX SEARCH PATH (i.e. in Max, go to Options > File Preferences… and add the folder where you’ll put your 3rd party extensions; I recommend a folder called “_for-Max” in your “Documents” folder).

Some helpful examples:

audio streaming related:

Class resources:

Max Resources: