Final Project: Ziyun Peng

FACE YOGA

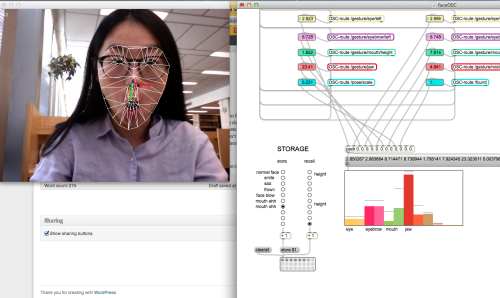

Idea

There’s something interesting about the unattractiveness that one goes through on the path of pursuing beauty. You put on the facial mask to moisturize and tone up your skin while it makes you look like a ghost. You do face yoga exercises in order to get rid of some certain lines on your face but at the mean time you’ll have to many awkward faces which you definitely wouldn’t want others to see. The Face Yoga Game aims at amplifying the funniness and the paradox of beauty by making a game using one’s face.

Face Yoga Game from kaikai on Vimeo.

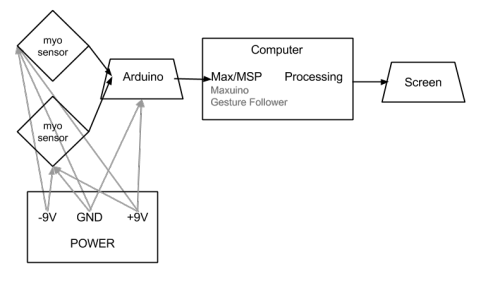

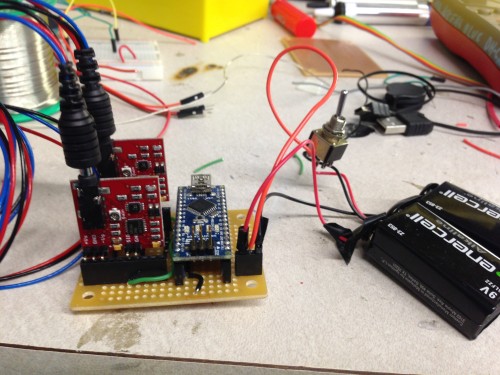

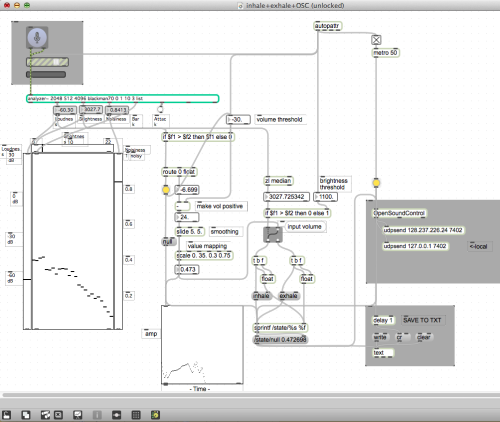

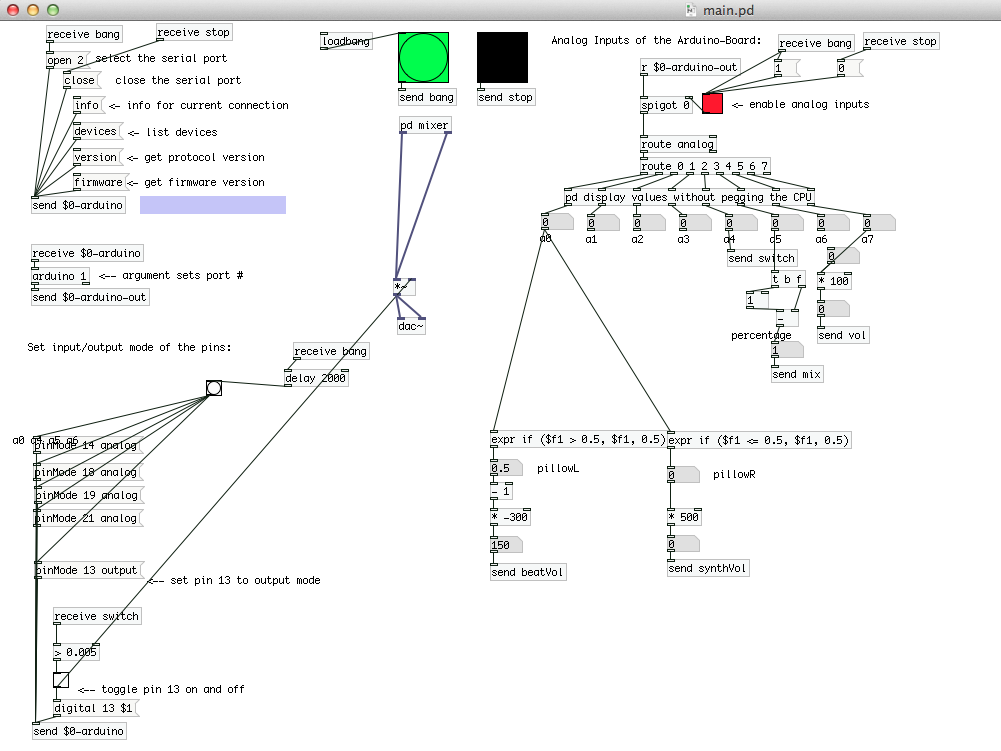

Setup

Learnings

– Machine learning tools: Gesture Follower by IRCAM

This is a very handy tool and very easy to use. There’re more features worth digging into and playing with in the future such as channel weighing and expected speed, etc. I’m glad that got to apply some basics of machine learning in this project and I’m certain that it’ll be helpful for my future projects too.

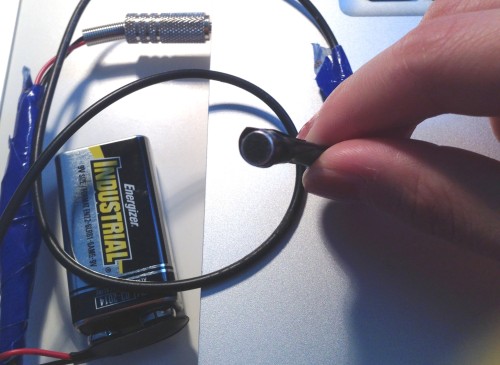

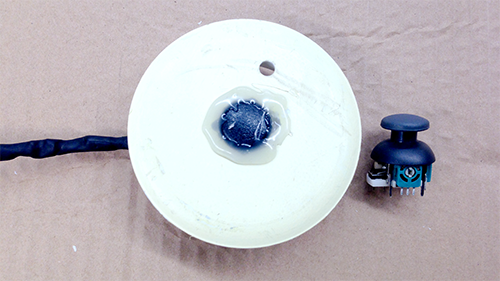

– Conductive fabrics

This is another thing that I’ve had interest in but never had an excuse to play with. In this project the disappointment is that I had to apply water to the fabrics every time I want to use it but that might be a special case for the myoelectric sensor that I was using. And the performance was not as good with the medical electrodes, possibly due to the touching surface, and since it’s fabric (non-sticky), it moves around while you’re using it.

Obstacles & Potential Improvements

– Unstable performance with the sensors

Although part of this project is to experiment with the possibilities not to use computer vision to detect facial movements, given the fact that the performance wasn’t as good as had been expected, using combination of the both might be the better solution for the future use. One possible alternative I’ve been imagining is that I can use a transparent mask instead of the current one I’m using so that people can see their facial expressions through that and on which I can stick on some color mark points for the computer vision to track with. Although better lightings would be required, vanity lights still stands for this setting.

– User experience and calibration

My ultimate goal is to let everyone involved in the fun, however, opening to all people to play meaning the gestures that I trained myself before hand may not work for everyone, and this was proved on the show day. I was suggested to do a calibration every time at the start of the game play which I think is a very good idea.

– Vanity light bar