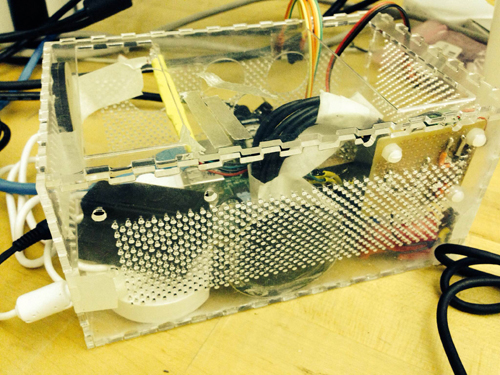

We live in a world surrounding by different environments. Since music could have mutual influence with human’s emotions, environments also embrace some parameters which could get involved in that process. In certain content, environment may be a good indicator to generate certain “mood” to transfer into music and affect people’s emotions. Basically, the purpose of this project is to build a portable music player box which could sense the surrounding environment and generate some specific songs to users.

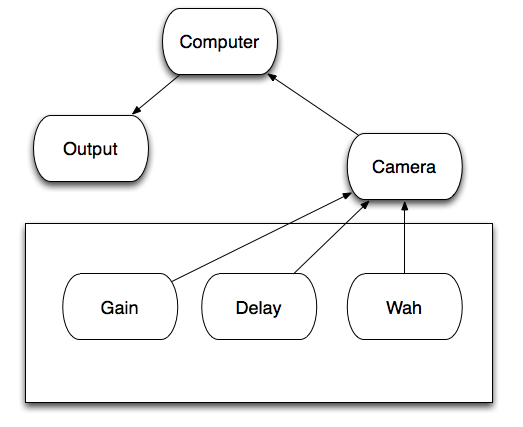

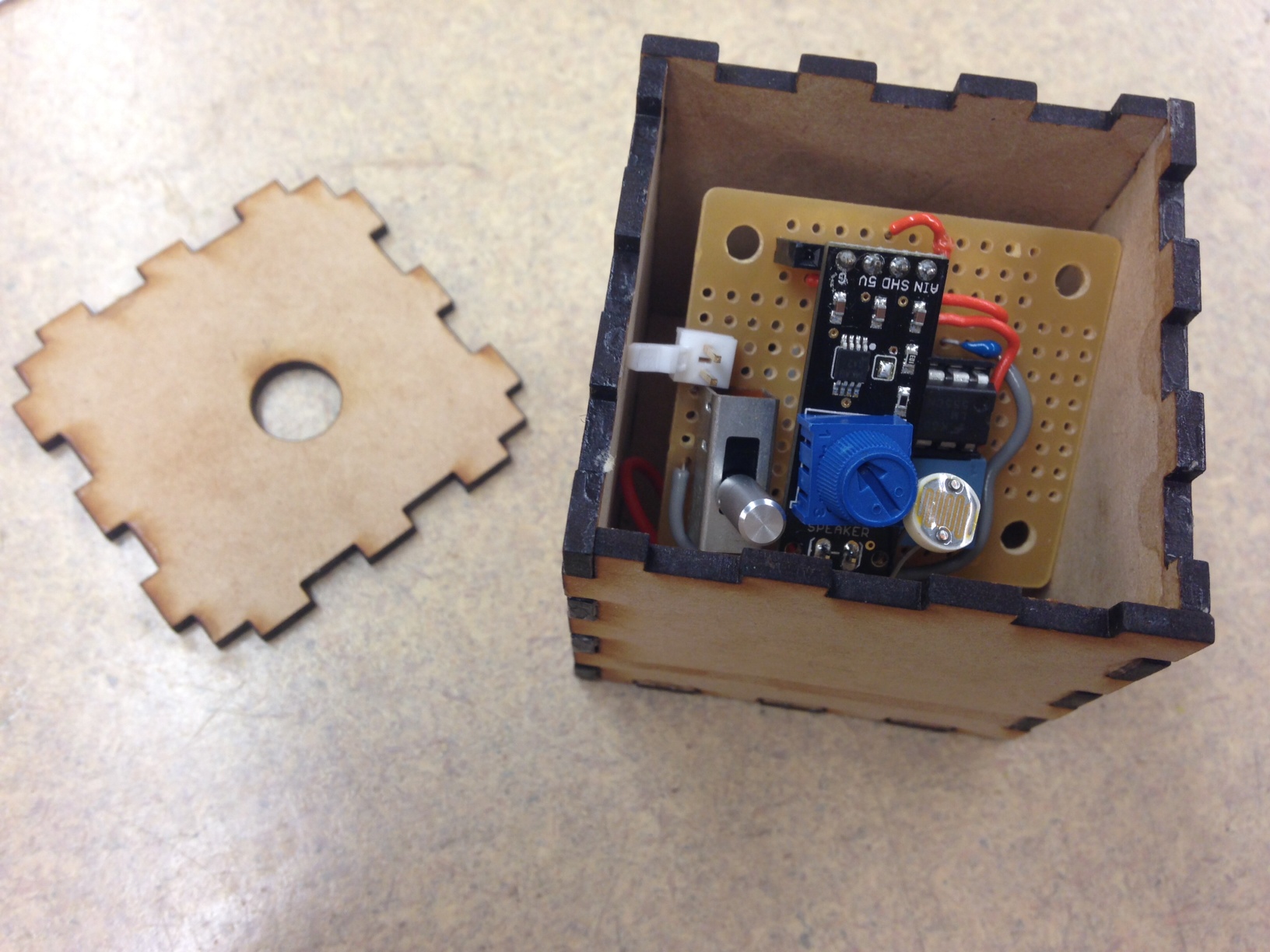

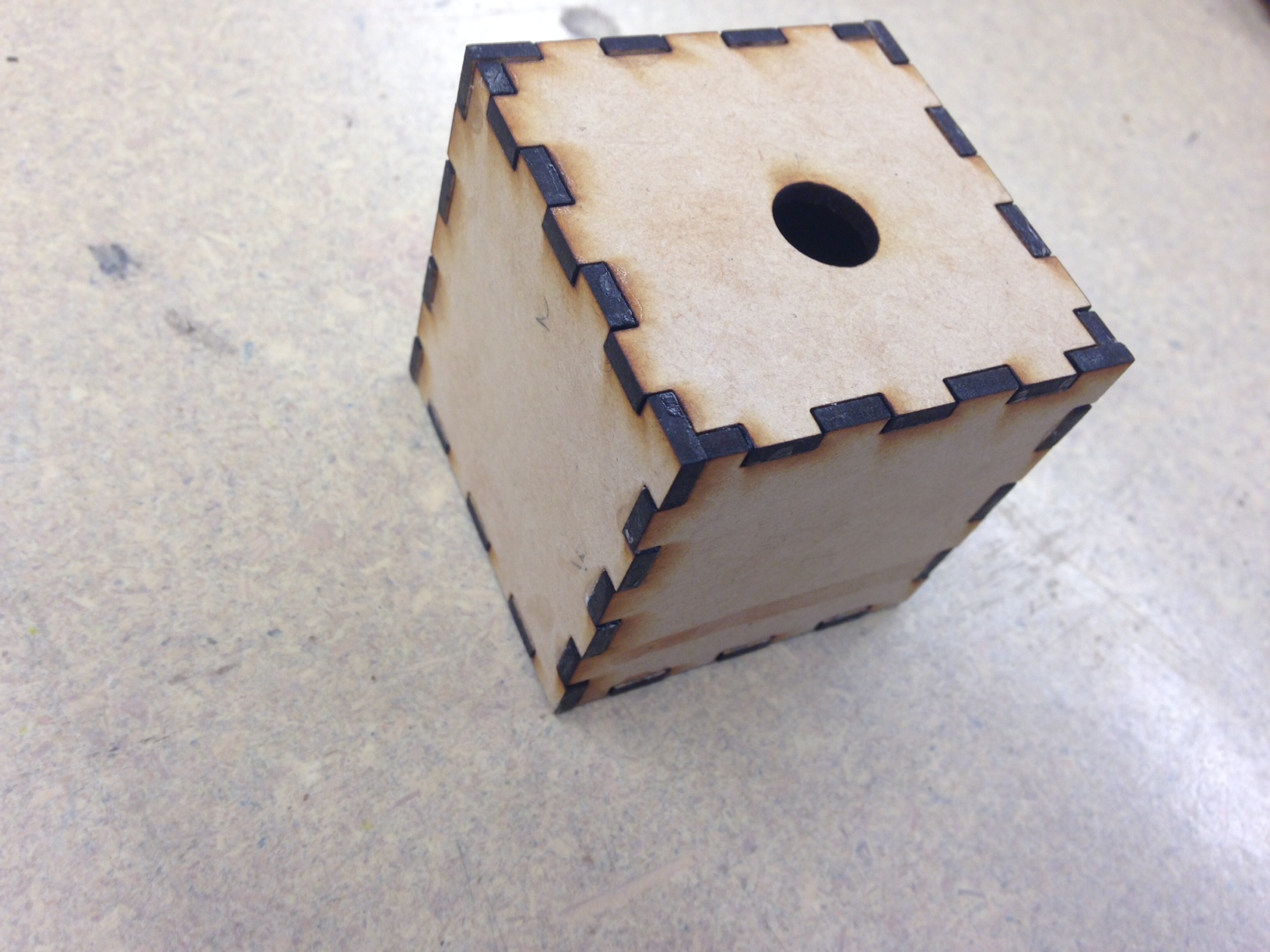

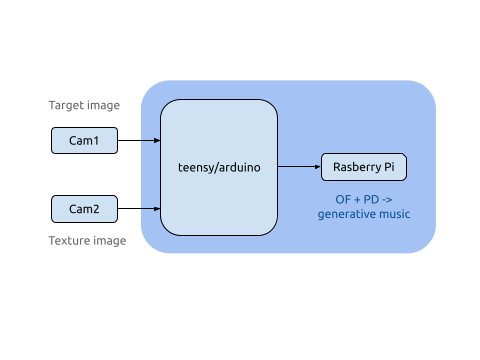

This device has two modes: the search mode and generate mode. In the first mode, input data will be transformed into some specific tags according to its type and value; then these tags are used to described a series of existing songs to users. In the second mode, two cameras are used to capture ambien images to create a piece of generative music. I will finish the second mode in this class.

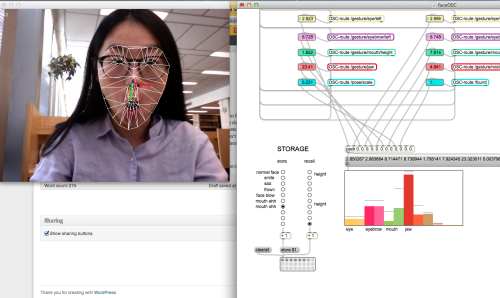

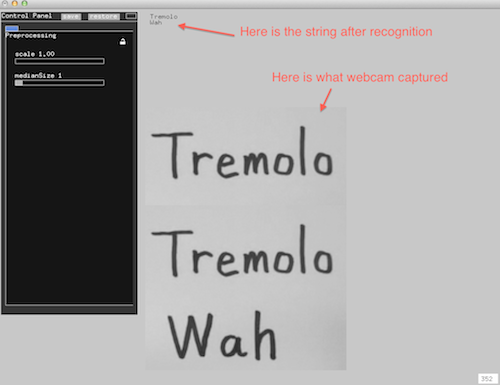

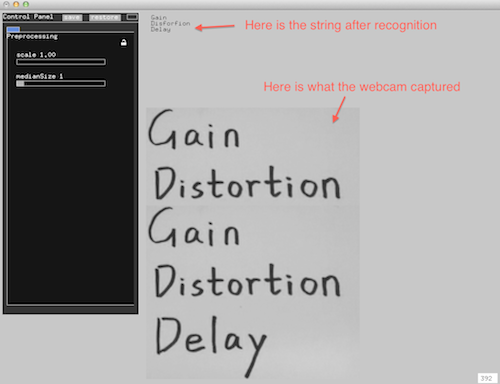

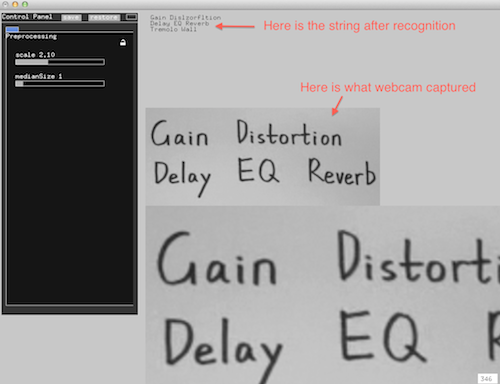

In mode 2, for each piece of song, two types of images will be used. The first one is a music image captured by an adjustable camera and the content of this image will be divided into several blocks according to their edge pixels and generate several notes according to their color. The second image is a texture one captured by a camera with a pocket microscope. When the device is placed on the different materials, corresponding texture will be distinguished and attach different instrument filter to music.

Below is a list of things I finished in the first week:

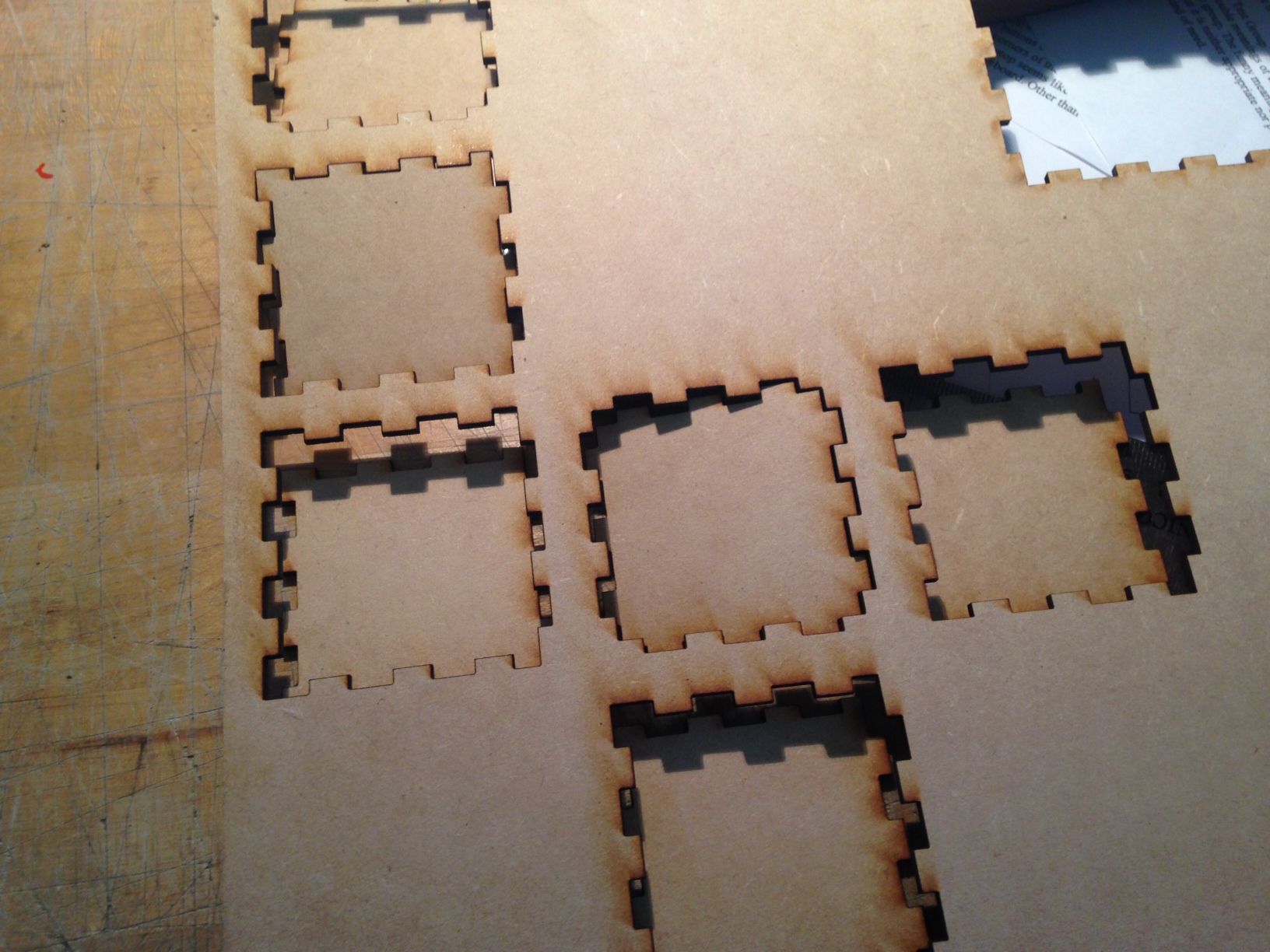

- System design

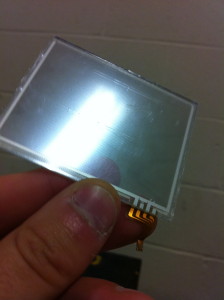

- platform test and select specific hardware and software for project

- Be familiar with raspberry pi OS and Openframeworks

- Experiment about data transmission among gadgets

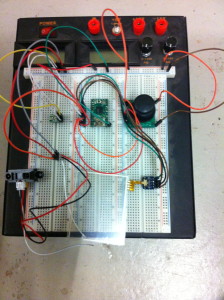

- Serial communication between arduino/teensy and Openframeworks

- get a video from camera and play sound in openframeworks

Platform test:

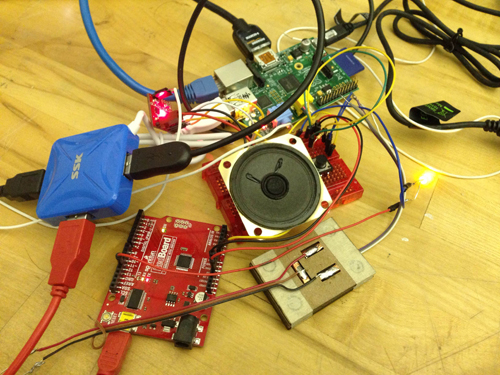

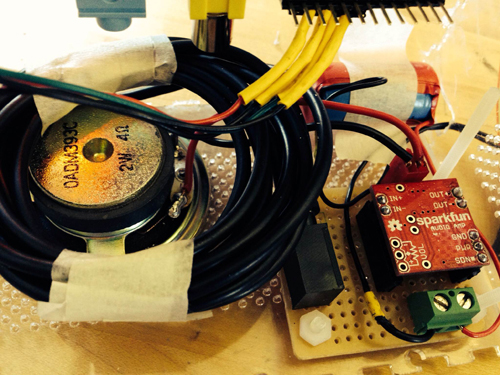

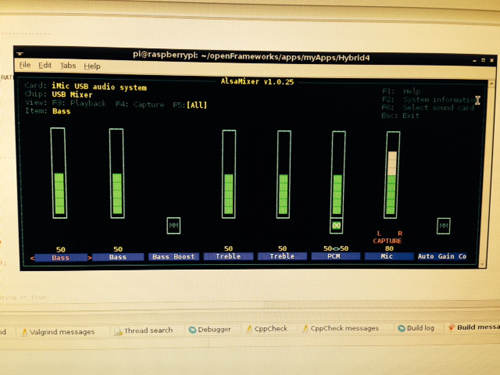

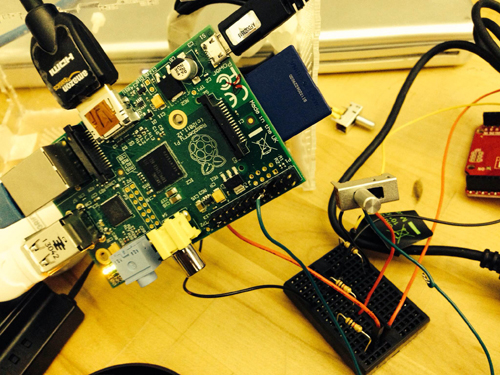

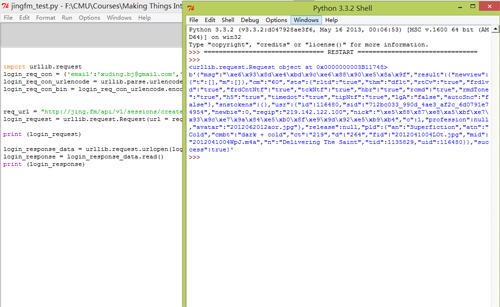

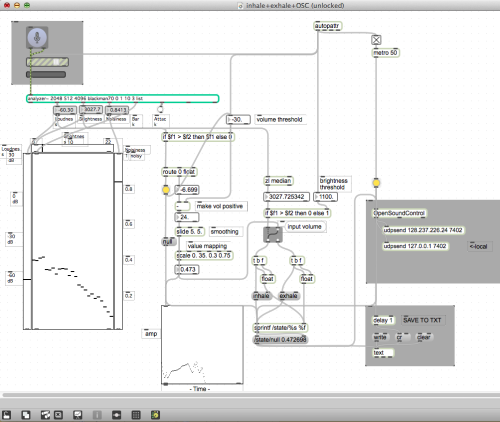

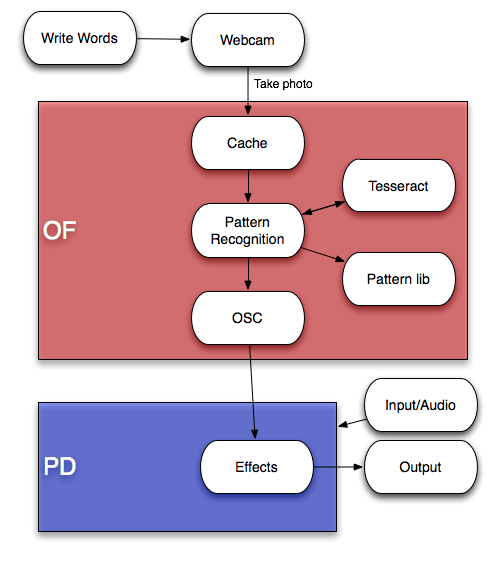

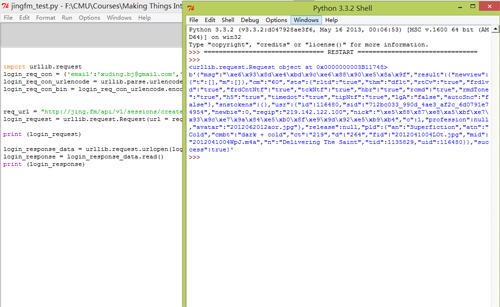

Since this device is a portable box which need to be played without supporting of a PC, therefore, I want to use raspberry pi, arduino and sensors (including camera and data sensors) to do this project. As for the software platform, I chose openframeworks as the maor IDE and plan to use PD to generate sound notes, connecting it with openframeworks for control. Moreover, it’s more comfortable for me to use C++ rather than python, since I spent much more time to achieve some a HTTP request to log into Jing.FM in python.

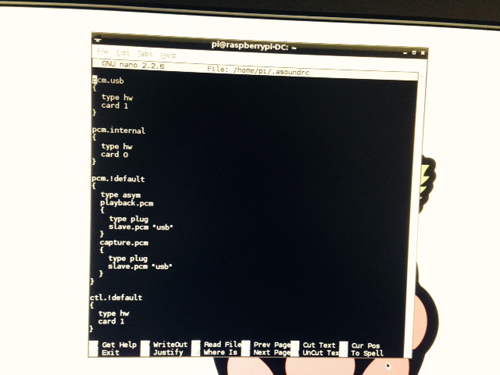

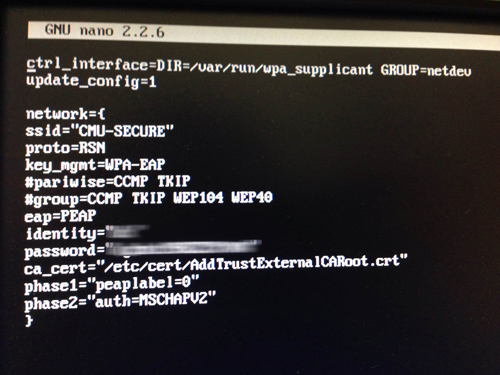

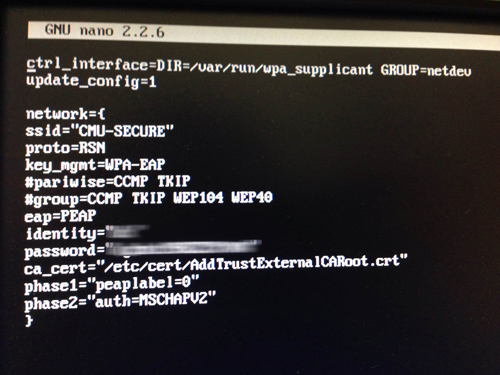

Raspberry Pi Network setting

As a fresh hand for Raspberry Pi without any knowledge about Linux, I got some problems when accessing into the CMU-secure wifi with Pi since the majority of tutorial is about how to connect the Pi with a router, and CMU-secure is not the case. Finally, I figured it out with the help of a website explaining the very specific parameters in wpa_supplicant.conf, the information provided by CMU computing service website and copying the certificate file into Pi. I also want to mention that each hardware which use CMU wifi need to register the machine in netreg.net.cmu.edu/ and this process takes effect after 30 minutes.

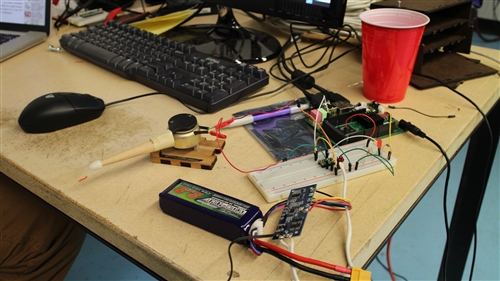

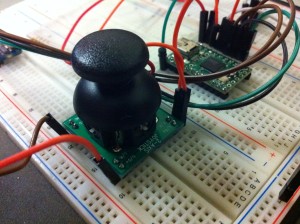

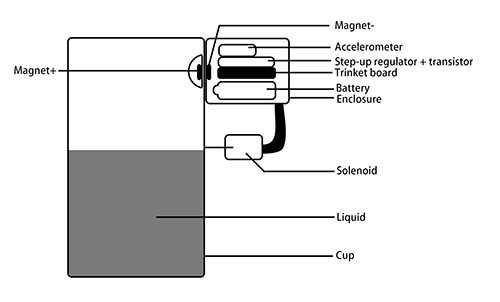

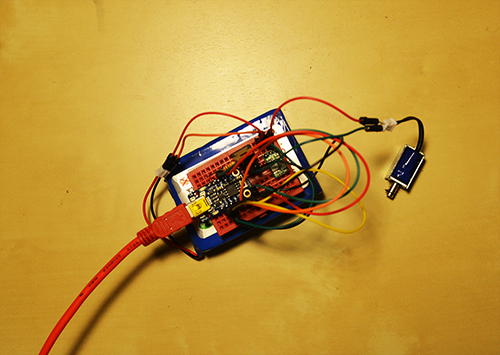

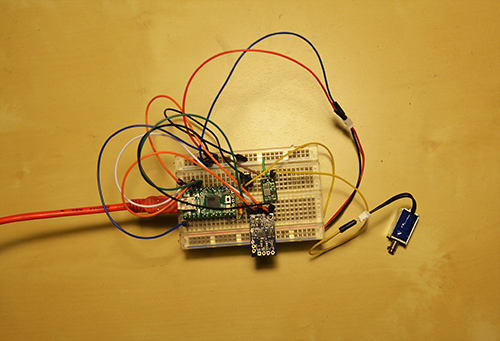

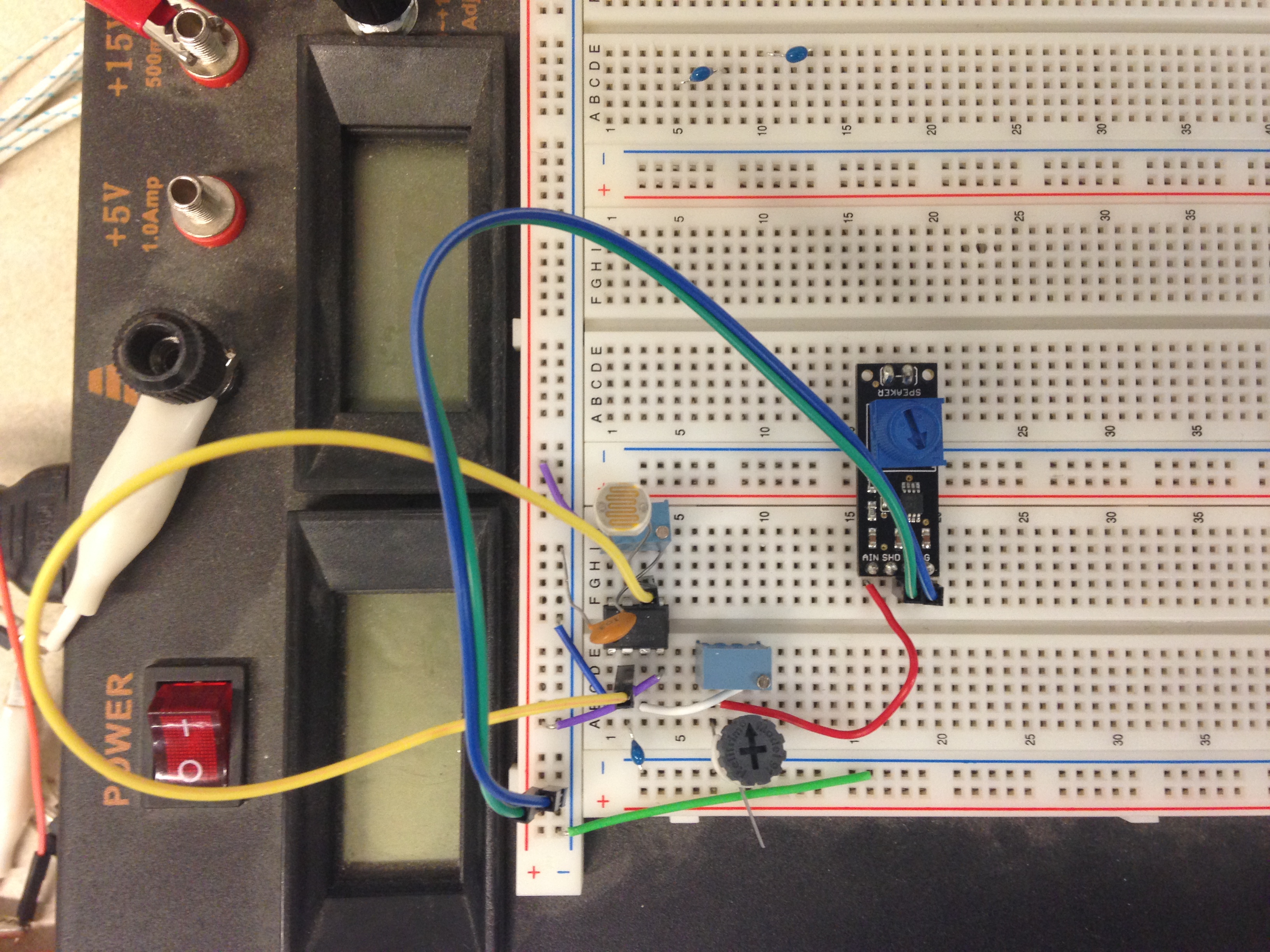

Data Transmission between gadget and arduino

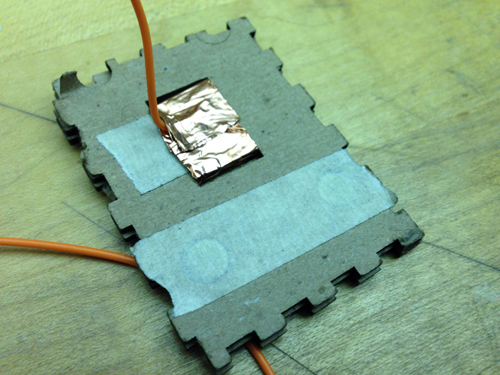

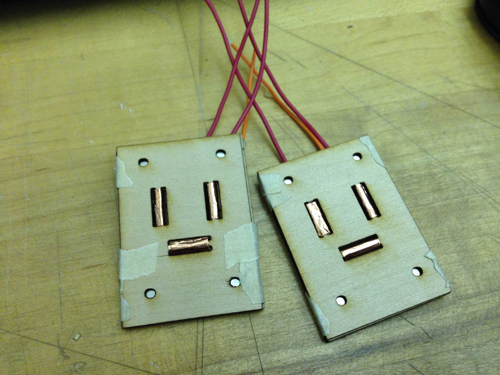

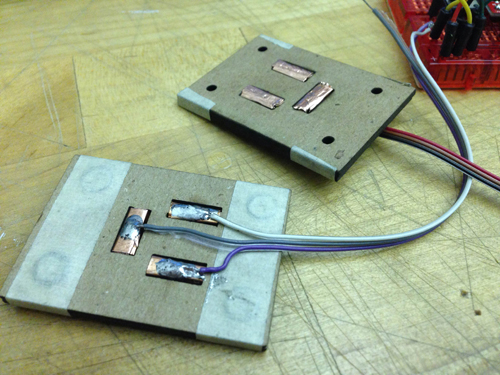

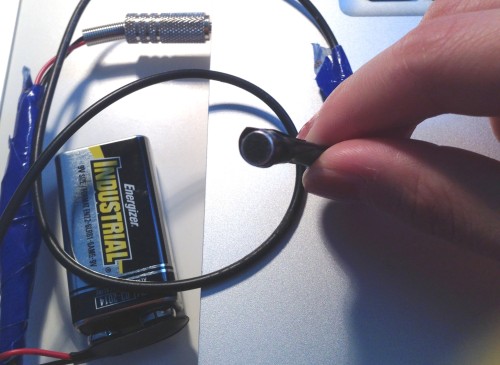

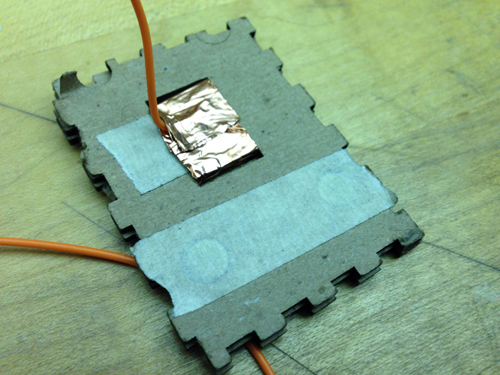

Experiment 1: Two small magnets attached in the gadget with the height of 4 mm

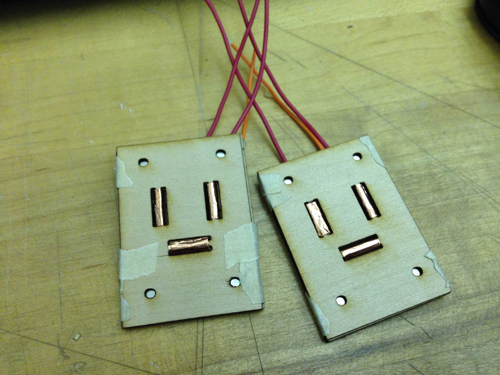

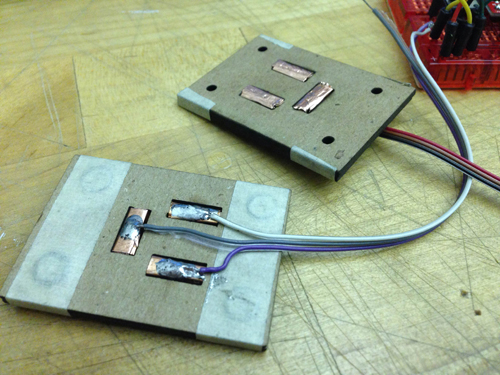

Experiment 2: Four magnets attached in the gadget with the height of 2.9 mm and 3.7 mm

the prototype 2 is easier to attach the gadgets together but all of these gadgets fail to transmit accurate data if not pressing the two their sides. A new structure is required to build to solve this problem.

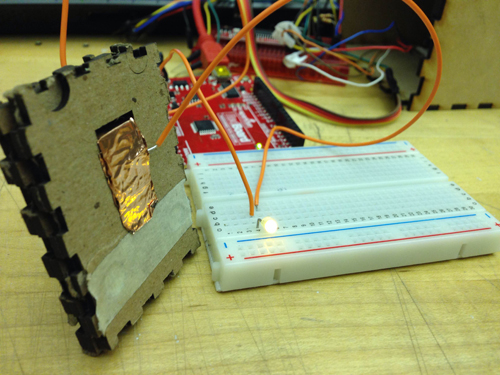

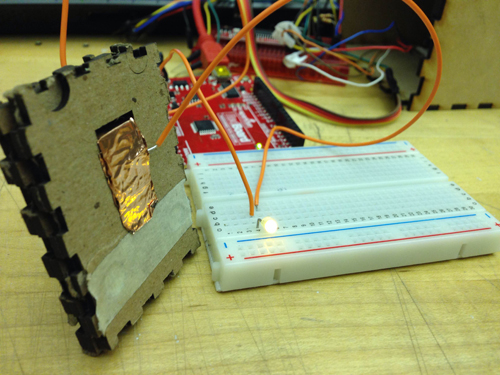

Connecting arduino with openframeworks with serial communication

Mapping 2 music to different sensor input data value