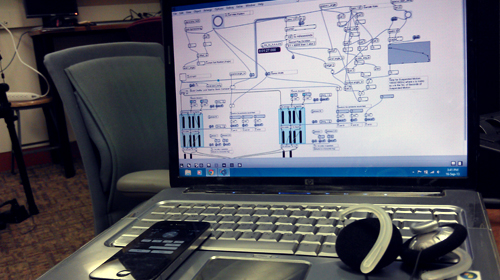

Group Project: Multi-Channel Sound System (part 1)

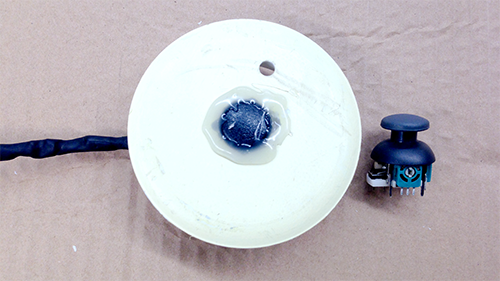

- A mobile disk that can be worn (as a hat, etc) – “Ambiance Capture Headset/Scenes from a Memory”

Every day, we move about from place-to-place to spend our time as driven by our motivations. Home, Road/Car, Office/School, Library, Park, Cafeteria, Bar, Nightclub, Friend’s place, Quite Night- we all experience a different ambiance around us and a change of environment is usually a good thing. It may soothe us, or trigger a certain personal mode we have (like a work mode, a social mode or a party mode). What if we could capture this ambiance, in a ‘personalized’ way and create this around us when we want- introducing the Ambiance Capture Headset. This headset has a microphone array around it and it records all the audio around you- it may catalog this audio using GPS data. You come home, connect the headset to your laptop & an 8-speaker circle and after processing audio (extracting ambiance only, using differences in amplitude and correlation in time etc.), the system lets you choose the ambiance you’d want. You can quickly recall your day by sweeping through and re-experiencing where you’ve been.

- Head-sized disk with speakers positioned evenly around the disk. Facing inward – “Circle of Confusion”

In this section, our ideas tend to fall in two categories, either using the setup to confuse a listener’s perception of the world around them, or to enhance it in some way. In the first scenario, one idea is to amplify sounds that are occurring at 180 degrees from the speaker, in other words, experiencing a sonic environment that is essentially reversed from reality.

- Speakers hanging from the ceiling in arbitrary shapes – “EARS”

Sometimes you just need someone to listen to you, like a few ears to hear you out maybe? A secret, a desire, an idea, a confession. This is a setup that connects with people, and let’s them express what they want. It’s a room you walk into which has speakers suspended from the ceiling. You raise your hand towards one, and when that speaker senses you coming near, it descends to your mouth level so you may talk/whisper into it (speakers can act as microphones as well! or we may attach a mic to each). You may tell different things to the different speakers, and once you’ve said all you want, you hear what the speakers have heard before. This is chosen by the current position of speakers, as all speakers start to descend if you try to touch them. All voices are coded, like through a vocoder to protect identities of people. Hearing some more wishes, problems, inspirations, hopes you probably feel lighter than you did before.

- A 3D setup (perhaps a globe-shaped setup) – “World Cut”

You enter into an 8 speaker circle, having a globe in front. You spin the globe and input a particular planar intersection of the world- this planar intersection ‘cuts’/intersects a number of countries/locations. These intersected locations map to a corresponding direction in our circle, so you hear music/voices/languages from the whole ‘cut’ at a go in out 8 speaker circle. You can spin the globe and explore the world in the most peculiar of ways.