Final Project “TAPO”: Liang

TAPO: Speak Rhythms Everywhere

Idea Evolution:

This project comes from the original idea that people can make rhythms through the resonant property and material of cups and interacting with cups. However, as the project progresses, it is more interesting and proper for people to input the rhythms by speaking than do gestures on cups. It also extends the context from cups to any surface because of the fact that each object has resonant property and specific material. So, the final design and function of TAPO have a significant change from the very raw idea. The new story here is:

“Physical objects have resonance property and specific material. Tap object gives different sound feedback and percussion experience. People are used to making rhythms by beating objects. So, why not provide a tangible way not only allowing people to make rhythms with physical objects around she/he, but also enriching the experience by some computational methods. The ultimate goal for this project is that ordinary people can make and play rhythms with everyday objects, even perform a piece of percussion performance.”

Design & Key Features:

TAPO is an autonomous device that generates rhythms according to people’s input (speech, tapping, making noise). TAPO can be placed on different surfaces, like desk, paper, ground, wall, window… With different material and the object’s resonant property, it is able to create different quality of sound. People’s input gives the pattern of rhythm.

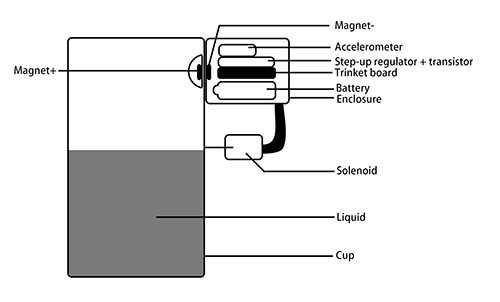

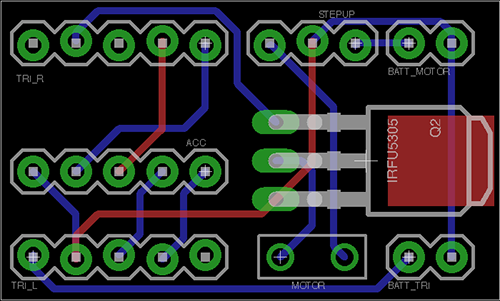

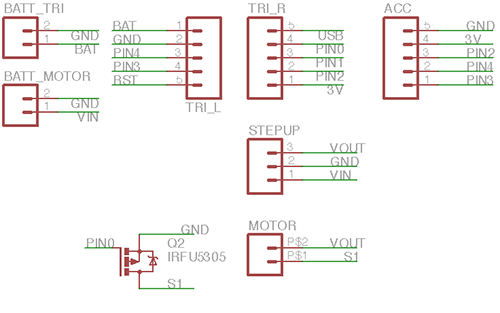

System diagram

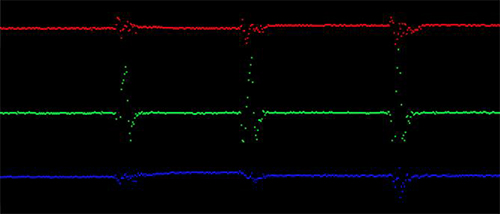

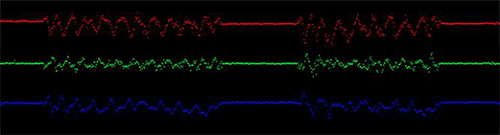

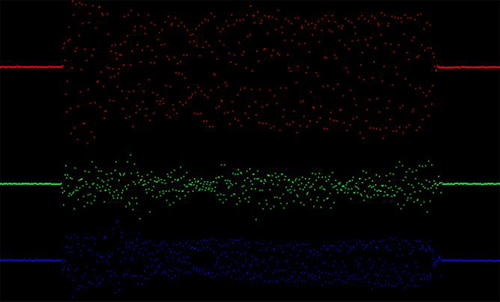

a) voice, noise, oral rhythm, beat, kick, knock, oral expression… can be the user input

b) using photo resistor to trigger recording

c) get rid of accelerometer, add led to indicate the state of recording and rhythm play

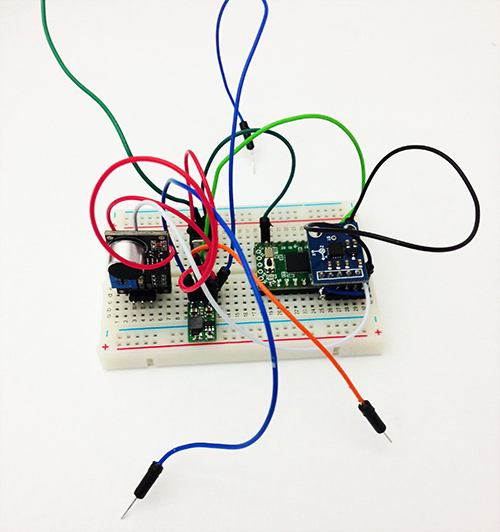

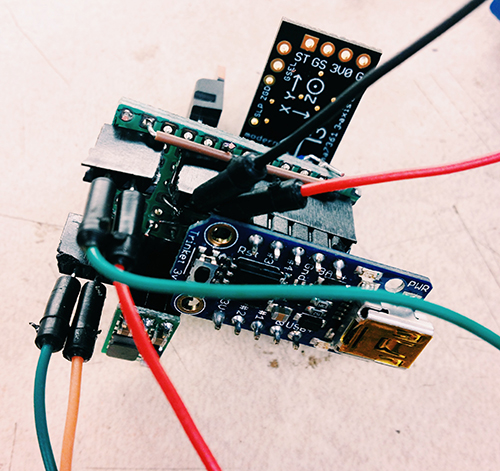

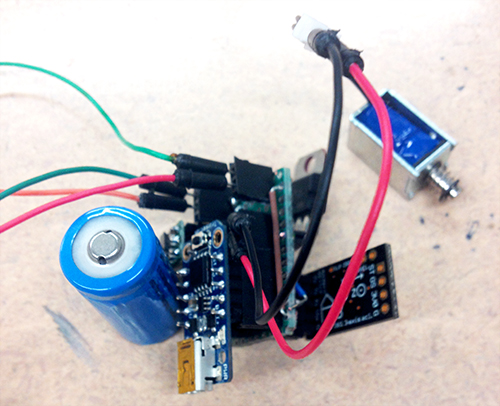

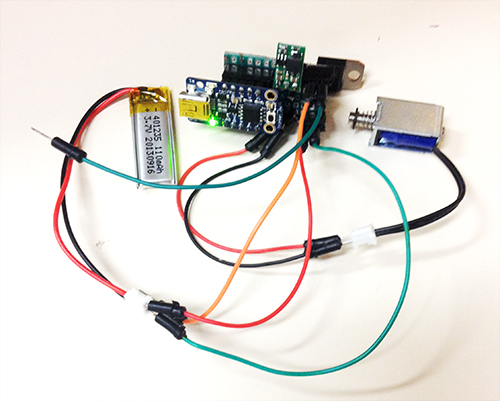

Hardware

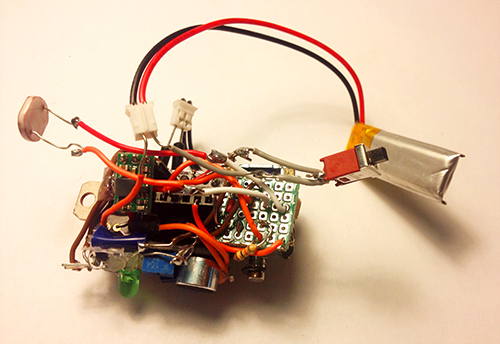

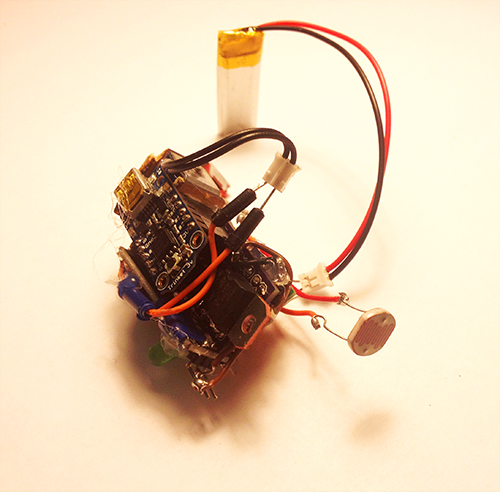

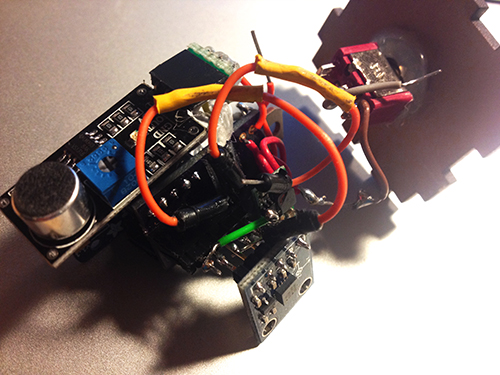

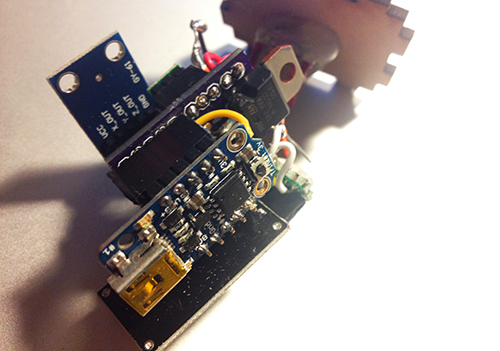

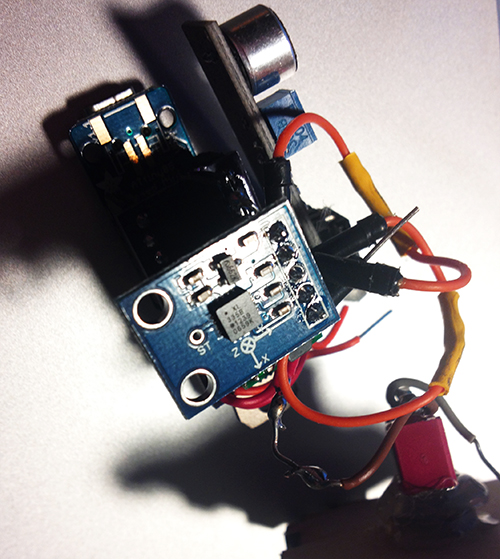

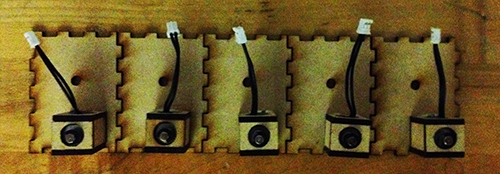

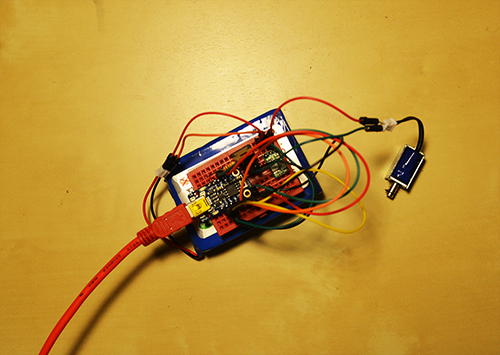

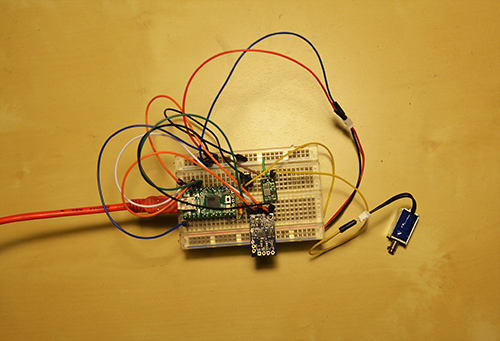

It is composed of several hardware components: a solenoid, a microphone electret, a transistor, a step-up voltage regulator, a Trinket board, a colourful LED, a photocell, a switch and a battery.

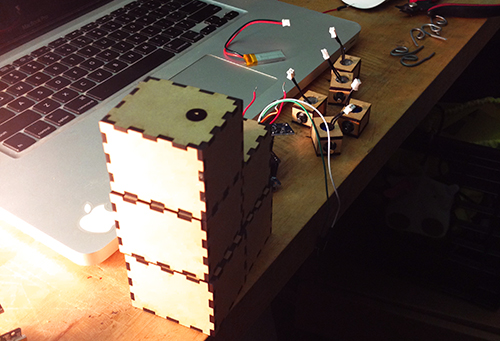

Fabrication

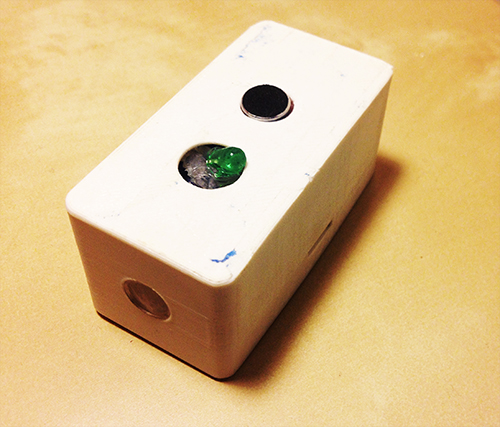

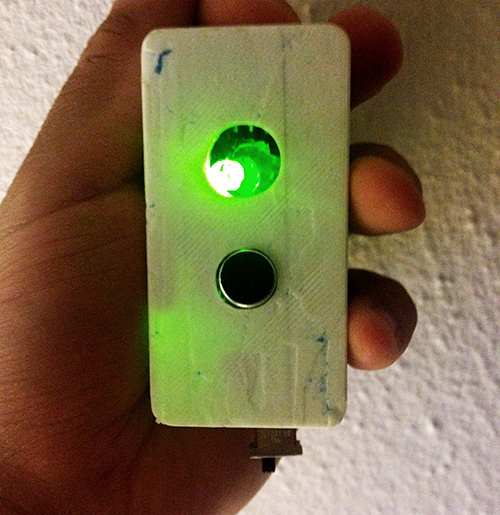

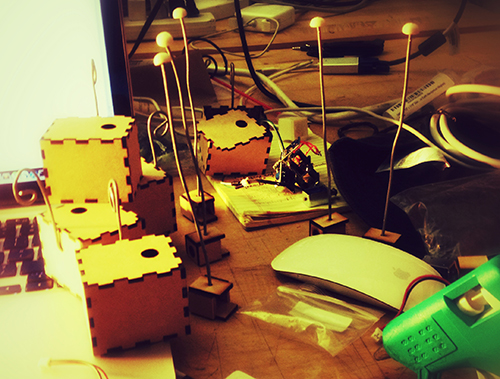

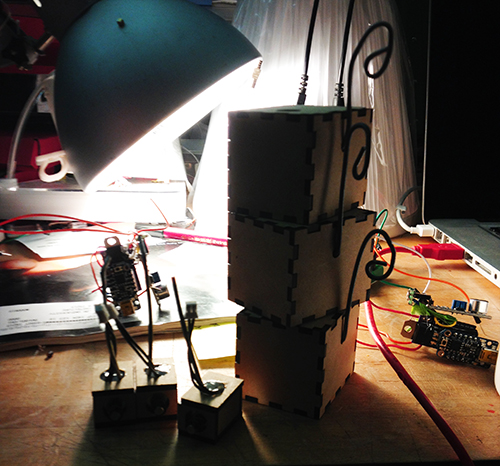

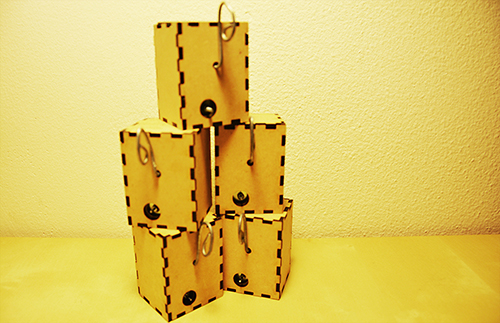

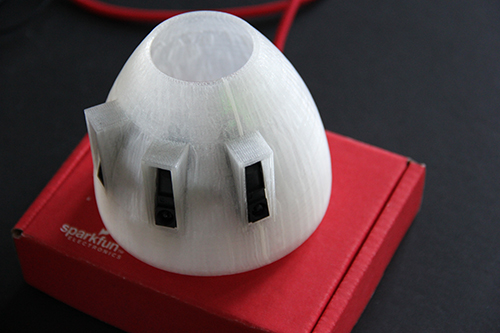

I used 3D printing enclosure to package all parts together. The holes with different sizes on the bottom are used for different usage, people can mount a hook or a suction. With these extra tools, it can be places on any surfaces. The other big hole is used for solenoid to beat the surface. The two holes on the top side are used to show microphone and LED light separately. On each side, there is a hole for photo resistor and switch.

TAPO finally looks like this:

Demostration:

Final introduction video:

Conclusion & Future Work:

This project gives me a lot more than technology. I learn about how to design and develop a thing from a very raw idea, and keeping thinking about its value, target users, and possible scenarios in a quick and iterative process. I really enjoy the critique session, even though it is tough and sometimes makes me feel disappointed. The positive suggestions are always right and lead me to a high level and more correct direction. I realise my problems on motivation, design, and stroytelling from these communications. Fortunately, it gets much more reasonable from design thinking to value demonstration. I feel better when I find something more valuable and reasonable comes up in my mind. It also teaches me the significance of demonstrating my work when it is hard to describe and explain. In the public show on Dec. 6th, I found people would like to play with TAPO and try different inputs, they are curious about what kind of rhythm TAPO could generate. In the following weeks, I will refine the hardware design and rich the output (some control and digital outputs).

Acknowledge:

I would like to thank very much Ali Momeni for his advices and support on technology and idea development, and all the guest reviewers who gave me many constructive suggestions.