‘CMU View from Afar’ is a remote viewing tool for spaces at Carnegie Mellon University.

Why

In our initial research process we spoke with many current CMU students who had taken a campus tour prior to their arrival at school. They all mentioned that the tour took place outside of the buildings on campus, so they were unable to see inside the some of the labs, lecture halls, and eating areas in an adequate fashion. Many international students also mentioned that they were unable to visit CMU for a campus tour before their academic career here began, due to an inability to travel to pittsburgh just for a short visit. With this information, we attempted to create a tool for potential students, or anyone interested for that matter, to view the inside of rooms at CMU without leaving the comfort of their home, wherever that may be.

How

The project aims to provide an ‘inside’ view of Carnegie Mellon’s facilities. Our website, cmuviewfromafar.tumblr.com, provides this experience. It is a three step process:

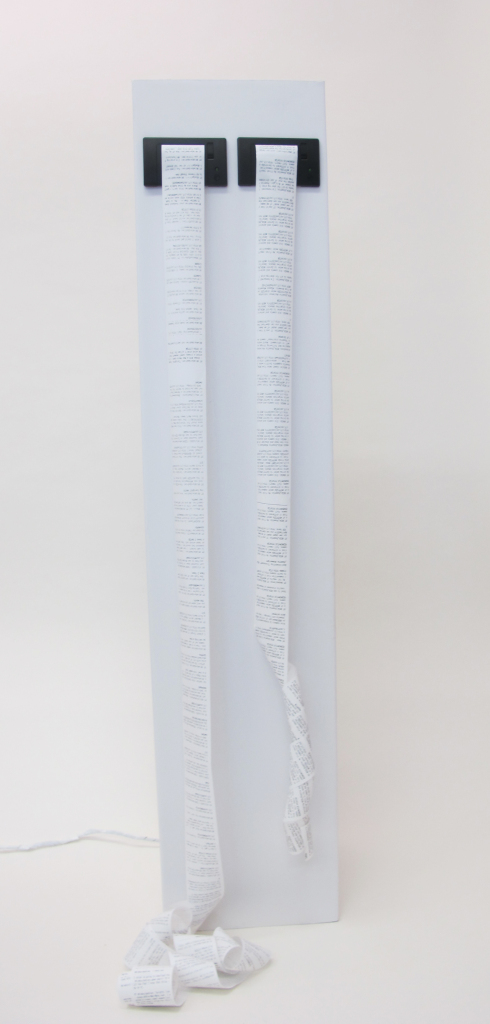

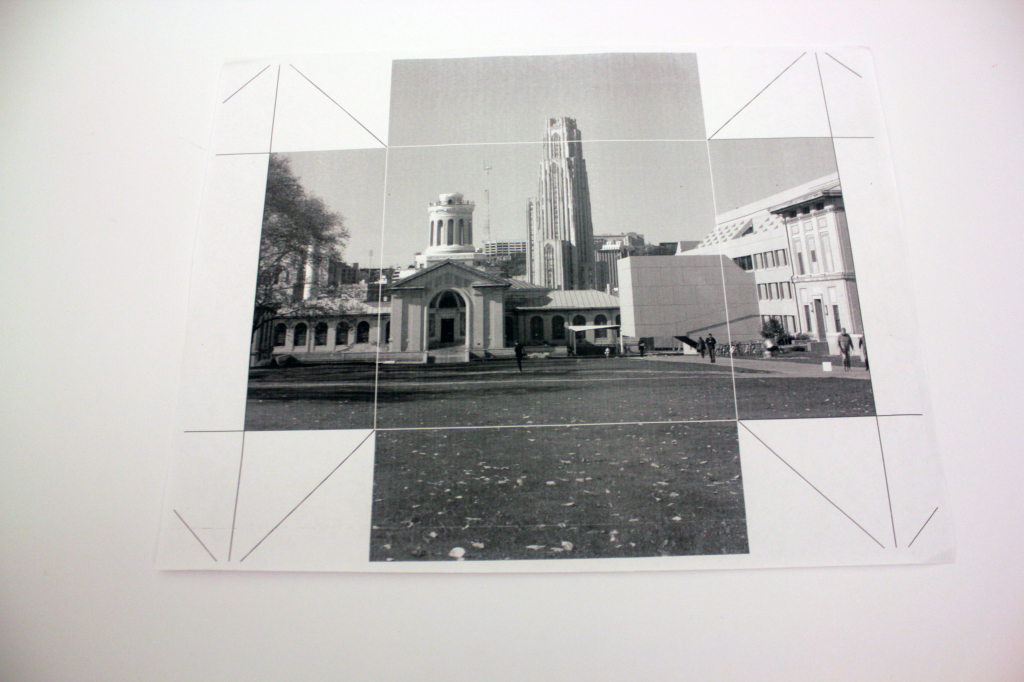

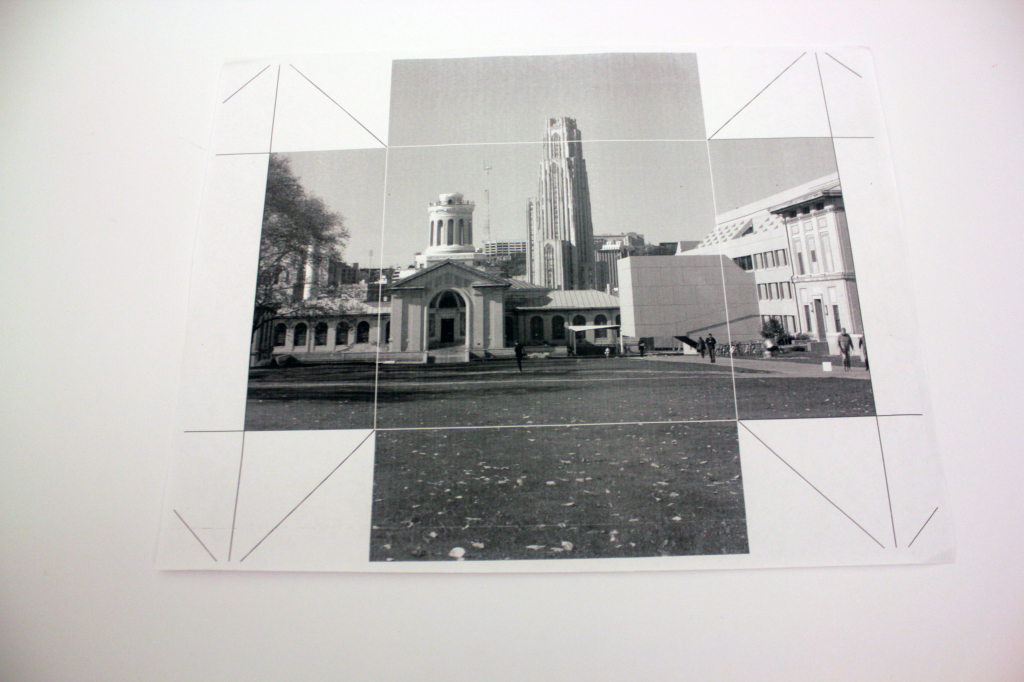

1. A PDF is downloaded and printed out. This becomes the interface for the tool. (see fig. 1)

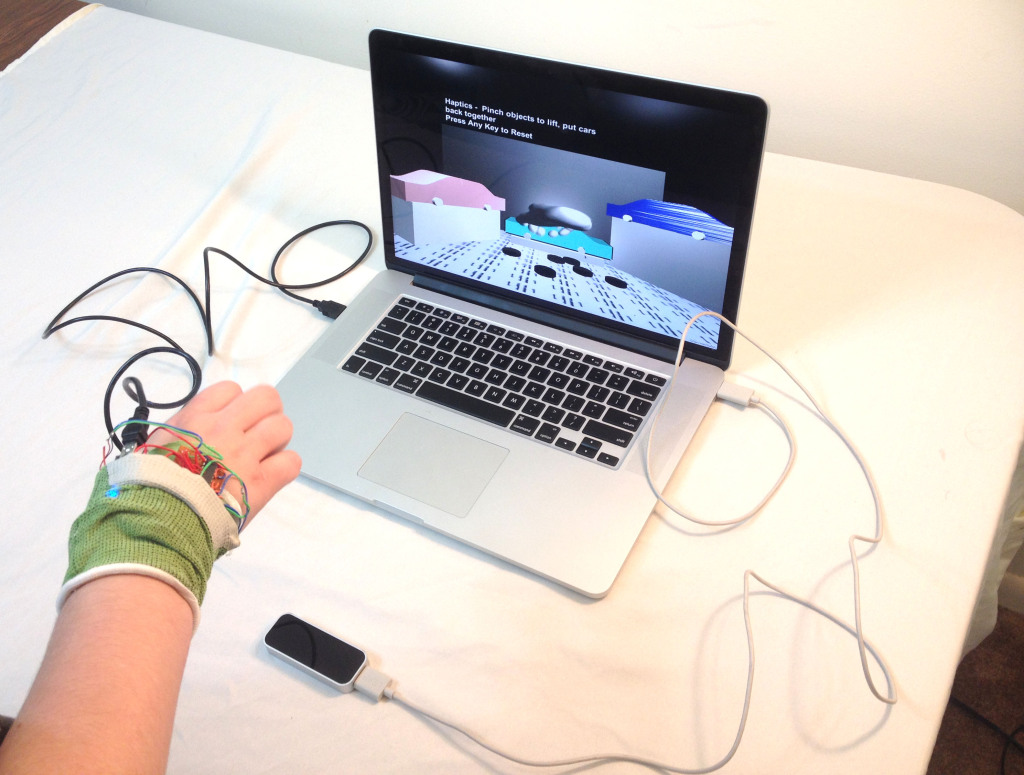

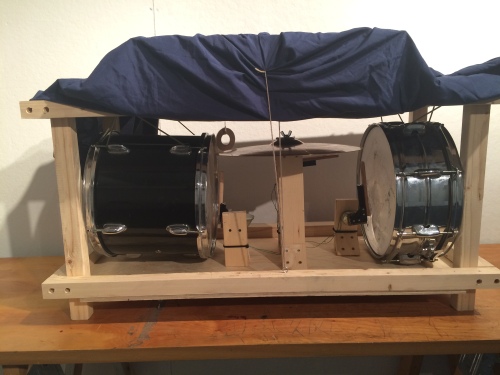

2. An instructional video can be watched on the website, explaining how the printed PDF is then folded into a paper box. (See fig. 2)

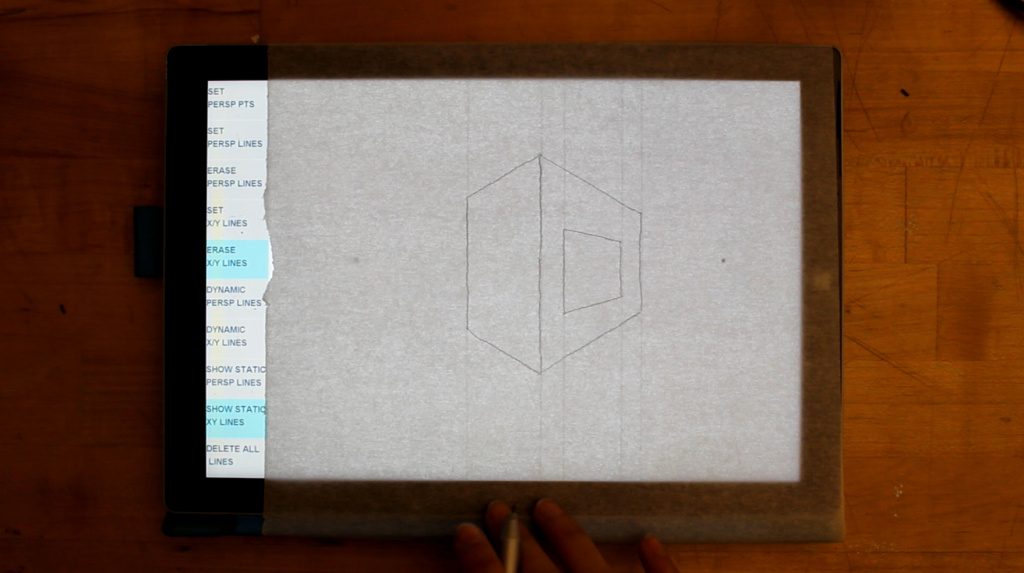

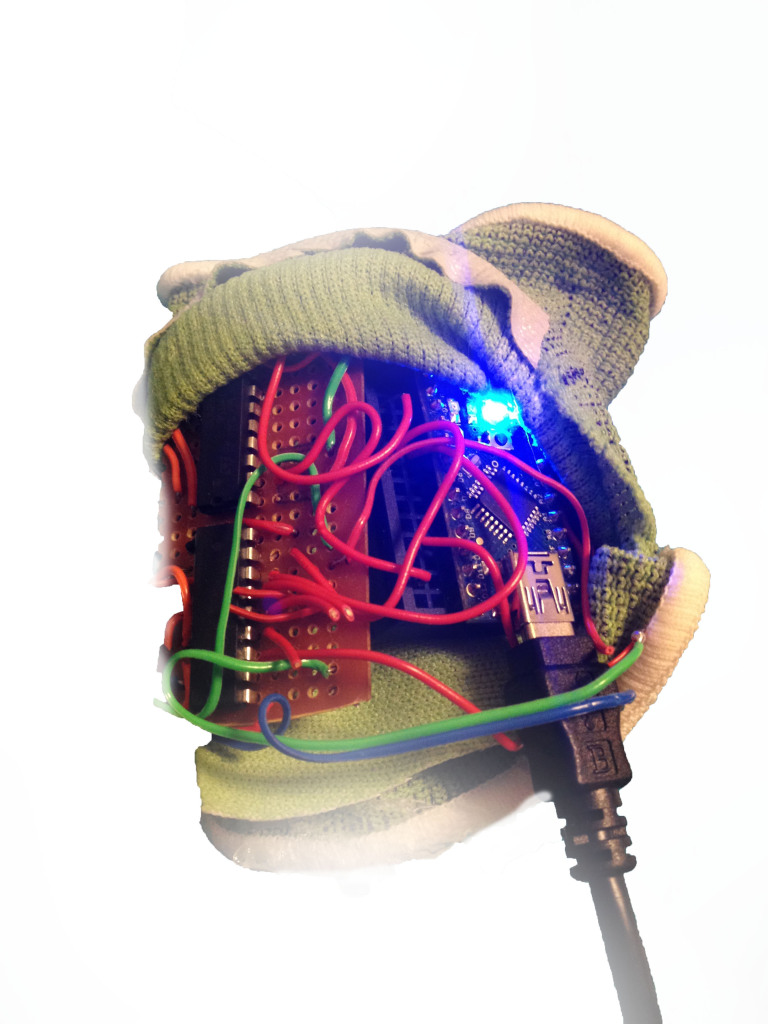

3. A .apk file, an application for android devices, can be downloaded from the website, and installed on a desired android smart device. (see fig. 3)

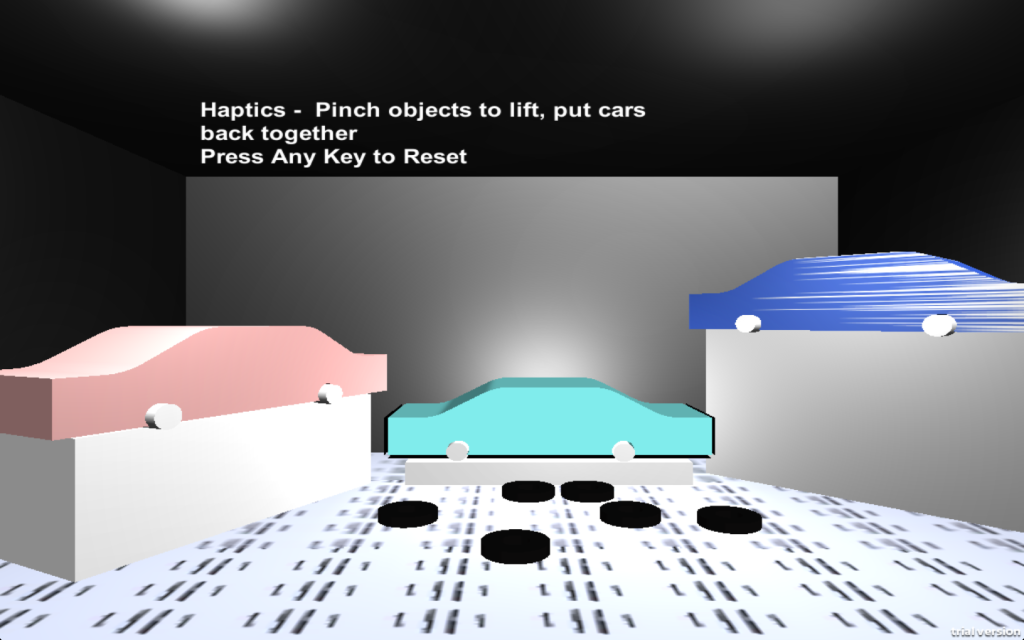

Now, when the application is opened a camera view is held over the box and a [glitchy but recognizable] room within Carnegie Mellon University is augmented onto the box, on the screen of the device. Different rooms can be toggled between, using a small menu located on the side of the screen.

Fig. 1

Fig. 2

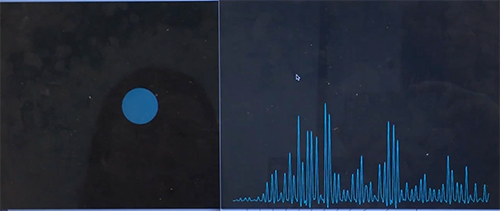

fig. 3

In this prototype, three rooms are available within the menu. An art’s Fabrication lab located in Doherty, a standard lecture hall and Zebra Lounge Cafe, located within the School of Art.

Video

Looking Ahead

For future iterations, we hope to incorporate a process for recording 3D video that will allow animated 3D interactions to occur within the box in real time. This is possible using the Kinect and custom made plugin from Quaternion Software.

The Big Picture

CMU View From Afar is a tangible interface for remotely navigating a space, using Augmented Reality technology. Our goal was to make this process as accessible and simple as possible for the user to implement. We set up the website and decided to have a single folded piece of paper to act as the image target because internet access and a printer are the only things needed to begin using this tool. The .apk file can also be continuously updated by us, and downloaded by a user, while the box as an image target remains the same. This can allow for the same box to function as a platform for many methods of information distribution, and all that has to happen is updating the .apk file.