“Rehab Touch” By Meng Shi (2014)

rehab touch from Meng Shi on Vimeo.

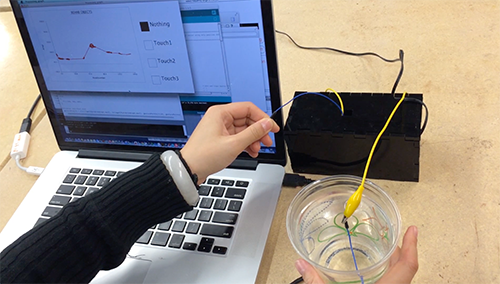

The idea of the final project is to explore some possible way to detect people’s gesture when they touch something.

Background:

Stroke affects the majority survivors’ abilities to live independently and their quality of life.

The rehabilitation in hospital is extremly expensive and cannot be covered by medical insurance.

What we are doing is to provide them possible low cost solution, which is a home-based rehabilitation system.

Touche:

www.disneyresearch.com/project/touche-touch-and-gesture-sensing-for-the-real-world/

Touch and activate:

dl.acm.org/citation.cfm?id=2501989

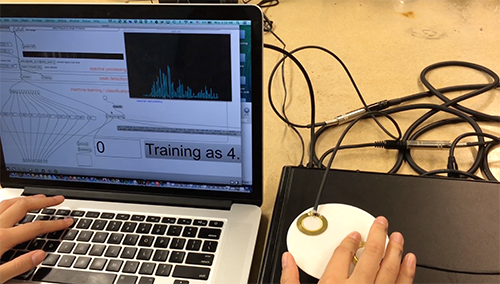

These two are using different way to realize gesture detection, touche is using capacitor change in the circuit while Touch and Activate is using frequency to detect frequency change.

My explore of these two based on two instructions:

Instructable: www.instructables.com/id/Touche-for-Arduino-Advanced-touch-sensing/

Ali: http://artfab.art.cmu.edu/touch-and-activate/

At first I explored “Touche”, and show it in critique, after final critique, move to “touch and activate”.

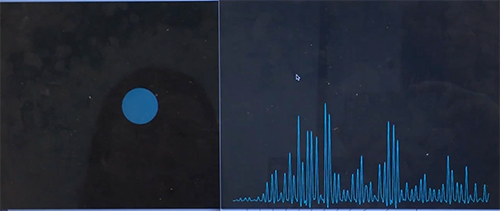

In order to realize feedback, I connect Max and Processing to do visualization in processing, since it seems Max is not very good at data visualization part. (I didn’t find good lib to do data visualization in Max, maybe also because my limitation knowledge of Max.)

Limitation of system:

“Touch and Activate” seems not work very well in noising environment even it just use high frequency (like the final demo environment).

The connection between Max and Processing is not very strong. So if I want to do some complicated pattern, the signal is too weak to do that.