Kyle Verrier’s Midsemester Report

My two best friends always allow me to take center stage, metaphorically speaking. So why not return the kindness by introducing them to each other? Silly personal thoughts like this created a catalyst that led me in the direction of playing with the concept of using the physical control elements on a guitar to connect with the web in a way that it might provide the possibility of musical notes, as well as additional unique sound elements, played through the computer. In other words, “How could I use my guitar as a type of pseudo keyboard to feed sound information into my computer?” This initial questioning required me to figure out how to go about creating an augmented guitar performance instrument that could in fact interact with a web browser. It quickly became obvious that I needed to employ the MAX software because of its ability to rapidly prototype sonic explorations. I had to narrow my focus towards what would best resonate with me to accomplish such a possibility for reinventing the use of the guitar. These thoughts led to my dabbling into a series of initial experiments with the notion of developing a solid sensibility in imagining a feasible application to control the use of the web browser as a musical instrument.

The following is a collection of narratives behind the thought process on my related experiments. The outcomes of these runs can all be found here on my Github page under Max-Experiments. Feel free to download if interested, ask me questions, critique, fix, improve, etc.

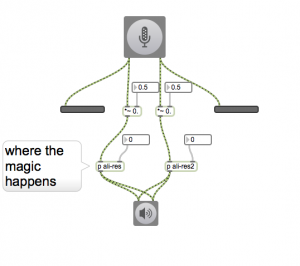

Modeling A Delay Pedal, Pedal, Pedal, …

As an exercise to familiarize myself with MAX, I decided to port something from the guitar realm that I felt familiar with into the software world. I have always had a fascination with delay effects, more commercially referred to as an “echo”. Creating such a ‘pedal’ in MAX was my first goal. Fortunately, there are a lot of built in objects within the software (particularly tapin~ & tapout~) for the more complex audio systems processing required to create this effect. However, the main takeaway from this was establishing a workflow (the power of hotkeys) and trying to find the best practice for modularizing patches/using the “bpatcher” for GUI elements.

The Charlie Brown Sound

After the inclass demonstration using a photoreceptor as a controller into MAX, I quickly becoming enamored with interaction beyond clicking and dragging an “int box” within MAX. I wanted to further explore the capabilities of the light controller. Using various mappings to various parameters based on how much light was being picked up, I was able to create a range of different sounds based on the gesture. Some particular standouts were frequency, frequency with pitch constraints (scales, arpeggios, etc), filter cutoff frequency and LFO filter rate. Oddly enough, my favorite sound out of these experiments was from a relatively odd mix of everything. The final result is only describable in my estimation as “that sound the adults make in Charlie Brown”. Here’s a quick video taken on my phone to get an idea:

In reflection, I felt this medium was somewhat limiting with a pure 1-to-1 gesture that I wanted to get away from. The few to many mapping ideas was something I wanted to explore further.

“Kyle! Stop Tapping!” -Love, Mom

Taking inspiration from another class demo using the resonance filtering, I wanted to explore a basic drum synth with contact mics. This was done by taking the resonator patch and connecting a contact mic input into the filtering signal. Instant satisfaction was achieved by striking a contact mic secured down by blue painters tape onto a table, resulting in my headphones delivering a rich complex sound of a full bodied drum. This brought me to when I was first studying drums and using my hands and lap, or any and every other surface for that matter, in replacement for actual drums and sticks.

Image Repainter

The visual power built into MAX through Jitter was something I suspected would be worth my becoming familiar with in an aggressive way. I had a simple idea in mind: repaint an existing image. This task is surprising more difficult than what one might think. I started off by taking a black & white image and paint onto a new image canvas in a where the black pixels positions of the original image get colored in randomly. This process of a black pixel coloring would be repeated very quickly and give the right impression I was going for of an image materializing itself before your eyes. I had bigger ideas in my head for some kind of sonic experience to go along with it but I lacked a clear connection with purpose, so I put this experiment on the backburner.

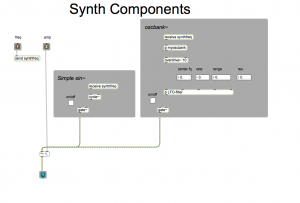

Guitar-tron

In line with my initial intention, I wanted to bring the guitar into MAX. Fortunately, there was a great pre existing patch for ‘traditional’ guitar effects (amp modeling, distortion, delay, etc). Next on my list was to bring the computational brain power of the computer to do more high level analysis of the incoming audio. Pitch detection was an obvious start. Using the “fiddle~” and later “yin~” object provided a quick and dirty “pitch detector”. Taking the pitch of the guitar and synthesizing new tones lead to some fun ‘circa 80’s style’ guitar synths. I ran into some classic issues such as latency and ambiguous pitches on the lower end of the guitar frequency spectrum. This was somewhat averted with mixing the original signal and messing around with the FFT window size, but these could definitely stand some more tweaking. In addition, I would love to further push the computational brain power to do more complex modern analysis, such as “tone” profiles, rhythmic detection, or “something a line6 product can’t do” as Professor Ali put it.

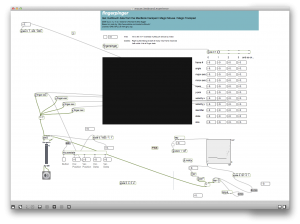

A Human Computer Dialogue

Cue the second act of my exploration. I became fascinated with more human computer interaction and wanted to sonify the computer experience particularly with the web browser. The structure of a web browser in many ways lends itself to a ‘song’ form. Each page could contain a set of attributes perhaps with some natural language processing, actual structure of the document tree, visual elements (clutter, color, content). The user has a sense of temporal control for how long they stay on the page. The mouse provides a natural interface for different sounds.

Singing Multi Touch Pad

If I was going to make the web browsing experience a musical instrument, there was no getting around the mouse and keyboard as sources of input. Fortunately there is a great max patch out there that can take advantage of Apple’s multi touch pad and provide all of the data associated with it (find it here). Wanting to get away from the 1-to-1 mapping limitations as discovered in previous experiments, my first thought was use mouse velocity to control amplitude of a sound source. The result was pleasantly surprising as I was instantly reminded of the ‘humming wine glass’; whereby, you wet your finger and rub the edge of a fine wine glass to get the resonating frequency of the glass to vibrate or ‘sing a hum’ (example). After that, I panned the sound to where the mouse is so that if you placed the mouse on the left side of the screen, the sound would be in the left ear. This gave a nice sense of space within the computer to the user.

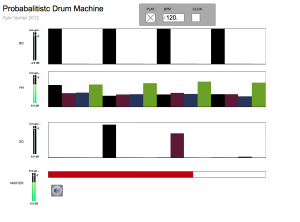

Bernoulli Beats

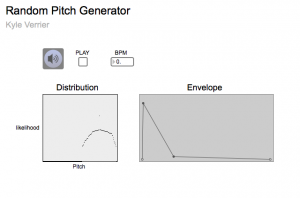

Exploring the concept of algorithmic composition, I was inspired to explore the realm of ‘controlled randomness’ through drum beats that were generated through probabilities. Inspired by the intricacies of traditional drums where a beat pattern structure is taken as a framework to create subtle tweaks for each iteration, I wanted to see if this could be emulated through a probability distribution. My thought process said, “Hey, every once in awhile the drummer does this big crash on 2, that could equate to a low probability.” Of course the limitation of this was that it was simply a fun interest. This was done by creating a multi-slider for each ‘synthesized drum, where each slider represented a subdivision of the beat. The user can control the likelihood that a bang is sent to a line function representing an envelope. These multi-sliders were connected to a counter controlled by a metro object querying constantly to see if it should play the instrument based on the likelihood inputted

I wanted to get even further away from was the traditional sense of a “beat” or ‘defined pitch classes’. The next patch I created would generate random frequencies based on a controlled probability. In MAX this equated to a metro object continuously sending bangs into a table object where the likelihood of frequencies could be controlled interactively. This form of audio sensory feedback instantly brought me into the realm of imagining science fiction computer generated haunting noises. I had an attraction to this sound, but was trying to connect how it might best play into what I was trying to create.

Do Androids Dream of Electric Sheep (Noises)?

Science Fiction, or Sci-Fi, film sound design holds its own fascination in my estimation. Create an airy type sound for something that doesn’t exist and your mind starts believing it could be real and just might hold some form of life. WHAT IS IT? WHERE IS IT? WHAT WILL IT DO? In attempt to become one with this plot, I went to a more traditional DAW environment (Ableton Live) and closed myself in a room with the lights turned off and tried to identify with drifting in an isolated futuristic ship in space, completely void of any additional human life form for trillions of kilometers (fingers crossed the future will use the metric system). The first sounds that came up in my head represented an ambient hum challenged by manic machine noises. Taking the Sci-Fi theme further, I aimed to emulate the somewhat ‘cheesy’ randomly jumping oscillating frequencies to signify the computer performing computations or in essence suggesting its own existence as a life form, a facade for ‘thinking’.

Sci-Fi Sound Design Example by K_V

Here’s a film clip from the cult classic Sci-Fi film “Blade Runner” where Harrison Ford’s character interacts with an interface for analyzing a photo. Awesome mix of mechanical and electronic beeps made by the machine and the ambient drone of hover cars in the background.

On a side note, this is supposedly the reference for those CSI scenes where the investigators take photos and yell at the computer to “enhance that license plate” to miraculously get the right numbers to capture the killer. Guess it is good to believe that some of today’s miracles will be better realized through tomorrow’s computer science applications.

A Clockwork Orange

Another concept I become enamored with was isolation while maintaining an interaction with the computer. Contrary to what most marketing companies have been engaging in as of late, working on a computer offers far more than the limitations of a ‘social’ experience. Of course while it is great that you can maintain a conversation and even be screened face to face with someone who is across the globe in another country, this will always be a weak substitute for actually having a conversation with someone in person. It seemed to me that one way to bring this bold reality to a more cognizant level was to attempt to make the computer user more aware of themselves through a sonic experience. I began to experiment with ambient microphones picking up the little ‘clicks and clacks’ of the keyboard, as well as any other isolated lonely sound the user’s room might present. Putting the live room audio into headphones had provided powerful signals that the user was alone with no real friend in immediate site, a potentially rather unsettling experience. To further drive the impact of the ‘in actuality you are alone’ message, I wanted to check out noise canceling technology. Exploration involving the concept of a sound void environment is indicated to be somewhat of a very guarded secret by big companies and lacks any go-to MAX objects. I am still weighing what all of this represents at a larger level. Luckily, there is a simple enough way to block sound by utilizing a pair of cheap earmuffs, as commonly employed for protecting ears with gun use. After informally trying the headphones with processed room noises and earmuffs on some test subjects (thank you guys & gals), they each had a similar emotional reaction voicing their discomfort with the affected sound.

Alice Through the Looking Glass

As of now, I have a lot of different components that I am excited about and feel could really add an interesting dynamic into the realm of web browsers, but they need to be all put together in a meaningful manner.

After partaking in the selection of experiments noted thus far that have been driven by my personal curiosities, I find myself still very influenced to play with sound as it relates to the computer. I have segued from what was initially imagined as the guitar controlling input to the web, to now a more direct use of the computer keyboard being the instrument to manipulate and control sound effects while the user browses the web. The evolution for me was that I came to realize I am captured and grounded by the emotional aspect related to sound or the lack thereof. As I entertained the idea of a project I would be interested in exploring, I immediately imagined that my love of music and the computer should be combined in a new way. That led me to a somewhat limited perspective of the predictability of a guitar creating sound. I branched away from that limitation when I came to the realization that it is the grounding of the noise or sound element that I am wanting to isolate or group in a creative way while an individual is engaged on the web and the guitar instrument itself is actually irrelevant. The essence of my noise exploration is to not play with predictable sound outcomes that are already well supplied on the web through words and music. Rather, I am trying to see if there is a more creative use of sounds painting an auditory picture on the computer screen and potentially keeping the user well grounded while venturing into the world wide web. Because the computer can be a trickster with its own deceit of imagined social circles, I weigh whether using a form of familiar noises eliciting a reminder of isolation might provide an advantage for the user entering a potential dangerous field. Prior research has been done on the emotional aspect of depression reported by individuals feeling as if they are falling short from other ‘friends’ on facebook.

If vulnerable web users are reminded that this is a social illusion as imaginary as the pleasantries of Disneyland or as dangerous as Alice’s Wonderland, a healthier self esteem for many might be better protected. I have experimented with a different form of discomfort in terms of certain sounds could be unwelcome reminders that in reality one is all alone when they enter the computer web world. Perhaps there should be a choice button of some sort for those who want to remain in a delusional game in order to survive unbearable real world pain and those who would favor to be grounded in reality with the idea that it is becoming to be one’s own best company?