so many data points, so many challenges…

As a refresher, my initial project plan was to use biosensing (in particular, EEG sensors) on people in some unspecified setting for some unspecified purpose and to sonify that data as a means of being able to immerse strangers in an intimate view into another person’s experience. My project has shifted a lot since discovering that commercially available EEG sensors either 1) don’t interface well with MAX or 2) don’t work on Mac computers without a lot of signal processing.

Moving forward I decided to settle with GSR, which made the most sense in terms of also indirectly measuring emotional reaction but having more scaffolding than EEG since it seems much easier to deal with and more people have worked with GSR for projects. I spoke with the psych professor I’m working with and he confirmed that GSR was probably my best bet in terms of reliable data.

I’m also more settled on the form of how this final project will take. One person will be sitting in the center of the room, hooked up to diy GSR sensor and listening to a song that is personally meaningful to them. The data will be used in real time to transform the song they’re listening to, that is then played back to the rest of the room.

SO yeah, actual progress post now, in chronological order…

1. The psych professor I’m working with for research unrelated to this class turns out to have a GSR sensor in his lab! He graciously allowed me a pilot session with his fancy lab grade GSR sensor (I’ll talk more about this later). He also gave me the name of a professor in HCI who contacted him before with questions about GSR with plans to build one herself for her own research, though when I sent her an email she didn’t respond. Welp. I also did a lot of Max tutorials in this timeframe. I still don’t really feel comfortable in Max.

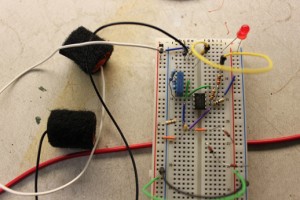

2. Built a little DIY GSR sensor. Wow I haven’t read a circuit diagram/assembled a breadboard in such a long time that it took me way way way longer than expected. I don’t even want to talk about it, it was embarassingly long haha. Currently it operates with a 1-2 second delay, but I’m planning on comparing it to the prototype of Data Garden’s DIY GSR sensor and seeing what the differences are in terms of data. I’m concerned that with the delay that it’s not “real-time” enough… This process taught me a lot about circuit building and gave me a taste of how to google when I don’t know what something is/does.

3. Attended a workshop by DataGarden on biosensing. They’re a Philadelphia-based art group that hooks GSR sensors they built to various plants in order to detect plants’ responses to the people in the room or other interactions. Sam (the guy who works doing the engineering bits for the group) was really really supportive of my project, gave me his card and offered to even send me his PureData patch. The session was encouraging, helpful and spiritual push from the universe to keep at this project even though working with all this knowledge I don’t know is intimidating and frustrating at times.

4. Held my pilot session where three of my friends (self proclaimed as people who get “really emotional” about their music) came in to my professor’s lab and listened to a couple songs that they deemed very personally significant or conjures a lot of emotions for them. I borrowed nice, stereo headphones and they all requested to dim the lights and they closed their eyes. They were required to not move while getting their GSR recorded (would’ve given an artificial spike in the GSR). It was interesting just getting to watch them get really into it.

5. I had a lot of hours of googling Max things relevant to opening csv files and cursory audio manipulation. Max forums are pretty great.

5. Trying to work with the data wasn’t really seamless… There are too many data points per file for Max to handle (~200-300,000) and it actually just crashes Max when I try to convert these notes into MIDI values or to just visualize them plotted on a graph. I spent a few hours trying other workarounds to manipulate my data for prototyping purposes (ie: Excel and SPSS, a statistics software) which, as software, just took a really long time to analyze my data (ie: 5-10 minutes to generate one graph in SPSS; Excel straight up refused to plot that many points) but ultimately allowed me to at least visualize what was happening in people’s responses to different parts of the songs.

6. I downloaded SoundHack to convert my enormous csv files into audio that Max could then interpret them, but the problem is that the sampling rate for GSR is 1/1000 ms which is far less than audio so SoundHack truncates my csvs into a ~30 sec clip for a 3 minute song (Max would’ve been nice and friendly and filled out the numbers I’m missing) so that’s a problem I need to resolve. Additionally, because GSR ranges vary but are from about .6 to .9, when converted into audio it sounds like straight up awful screeching. I’ve written some python code that takes in a csv file and converts the numbers into a range from -1 to 1 in order to better the way it sounds once sonified. I don’t really know how this will work in real time though.

WHAT’S NEXT?

– um figure out what to do with this data in a more seamless way

– how can I meaningfully alter the song with my GSR data? and how can I do that in Max?

– prototype DataGarden’s GSR

– hook up my built GSRs to my computer, collect live data/figure out how to work with live data

– basically, link all the parts eveything together

PRESENTER: Minnar

skeptic:

-i wanna hear what it feels like

-why do mapping in python as opopsed to in max?

-the authenticity of this data?

-Candice Breitz’s King: vimeo.com/2109620

-Adrian Piper “Funk Lessons”: www.adrianpiper.com/vs/video_fl.shtml

teaching white people how to dance to Funk music

Alex:

–What form does the final piece take?

–Max sketches! Testing how to effect music of data.

–French Film, breaks normal trajectory of how to score a movie. It is the contradictory choices that are influencial. Ex) Werner Herzog choice of movie in Aguirre (flute sound)

—

//My critique of emotional response work is what your running into. Getting a ‘legitament quantifiable” data set. I’m interested to think how you could use this to your advantage. “Authenticity”–well said Ali

Kyle: Might want to check out “R” for statistics software processing. Songs vs. sounds? To me (despite mechical background), a gunshot might have more emotional impact than a pop tune. The Listening Machine

Nicole: Concerned about if you will get enough response from your homemade sensor. Worried if it is not sensitive enough your feedback will be inherently random, and your audio will not be modifed in a significant way. Why not use lab grade data?

I feel it will be difficult to get your sensor to record the data, not just get a live reading, and because it is a different sensor it will be a different format than what you are doing with your lab grade samples you will have to entirely rewrite your program to modify it. If you want to use a DIY sensor I think making that as soon as possible is crucial.

Unclear what sort of output you are actually getting. How soon can you get audio samples? Modifying song vs creating output just based on data?

Anyway you can simplify this? The concept is simple, anyway you can strip this down a little.

If it is too technical it becomes innaccessible.

Does the data need to be real? If it is not accurate, what is the point. I feel like you only get like 10% of the effect if the data is not accurate. I feel like authenticity is crucial here.

What if you make audio of their emotional response to audio, and then repeat the process but using your output as the new input? Could be interesting. FEELINGCEPTION.

Henry: Aaaaa, “To Hear What It Feels Like” Is a very good and succinct goalphrase that encompasses the whole feel and idea of things and it is good.

– Hm! Big graphs sound scary. I would programmatically it? Unless there’s a thing. But like, yes. Do a running average maybe. Or somehow get rid of the info and compression and everything like that Hm. Oh wait you are doing some of that. Python 😀

-Max is such a bully sometimes.

– The best musicians rose out of poverty so that is a plus, actually.

– Re: Play it back to the rest of the room, their emotions will be affected by the knowledge that it is a public thing? But that might be good. Sharing your emotional songs make them more emotional, I find. Or worse. But. There are things you should test try.

– Ooh. I think it is important to take advantage of the fact that the song being played will be one which is emotionally linked to whatever the viewer would expect to feel, etc. Like, if someone is sad, they are probably playing a sad song. So maybe have the song not show up until certain feelings emerge or etc, and the rest be something else.

– Alex said “Where are we human?”. This gives me fuzzies.

Matt:

Installation setup?

In order to collect the data would you have a base line setup in-case ? – i also may of misunderstood you

but would you a number that is happy/ sad/ mad to demo?

Storing information: Playback display chart would be nice to see: sound numbers

Maybe a written a form that allows individuals to give you sound/song ideas / information you can track

as you collect sensor information.

AeonX8: I’m immediately thinking Blade Runner and the Voight-Kampf test as a possible reference. !!!!

Would like to know more about why Josh and Alec’s readings were so completely different…

Felipe: it makes me think about affect theory right away. have you read Brian Massumi’s essay “the Authonomy of Affect”? How can you capture and then re-enact the stimuli? Maybe braking the emotional narrative we impose on sensations (affect) would be a more challenging approach. Using sounds that contradict the experience (i.e dance music for bad sensations) or other forms of interacting with the data could help??.