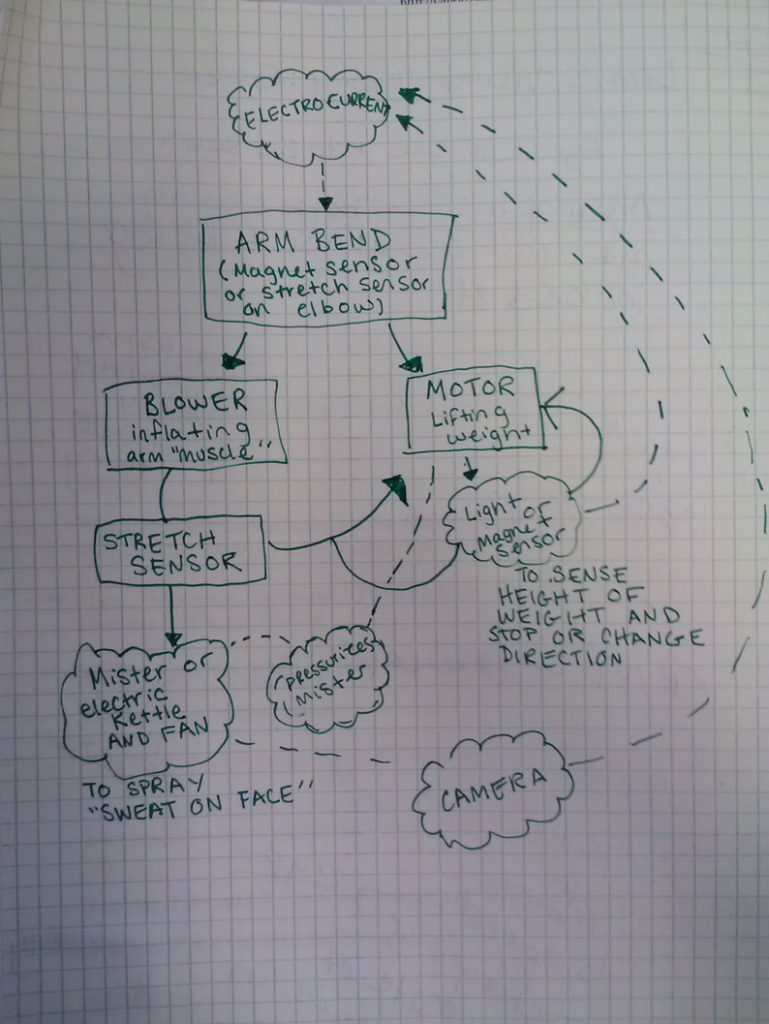

The complexities of vague simplicity

Through the implementation of simple rules, elagant humanist behaviors appear to emerge in the behavior of Braitenberg’s little robots.

His notion that artificially implementing natural selection on a table full of robots might yield better robots than careful design got my attention. I think that in cases of simple insect-like behavior this would be an ideal and elegant way of solving ones problem. With the goal of survival in certain conditions and the limited resources of simple logic, it would seem best to use this randomly seeded naturally culled robot group as a starting point. The tremendous amount of time and resources it would consume to build and allow to die a sufficient quantity of robots would be disadvantageous. Also, you would end up with a robot who could survive the conditions the table indefinitely, but you would have no way of knowing how or why, nor would you be certain it could survive in similar conditions. Mother nature had all the time in the world to get it wrong again and again. Humans are in a hurry. Humans should probably stick to engineering.

Candidate robot teeters on edge of cliff, hoping to be fit for habitation of infinite white plane.

Image is stolen clip art, Blur is watermark.

As far as the emotive qualities of lifelike things, there is a lot to be gleaned from insects and other simple organisms. Their nervous systems may be low level enough to practically replicate with current robotic technology. Persson outlines the frank notion that we are way behind being able to actually recreate intelligence in robots, we may as well get good at tricking humans. I find the practicality of this stance somewhat refreshing in a world of missed expectations and lofty aspirations. We’ll get there, relax everybody.

This lil roach bot sure does capture the gesture of the desperate clawing of an insect. Despite the fact that it is being controlled by a human, I instantly ascribe desire and desperation to the mechanical device. Curious.