Cyborg Foundation

“I started hearing colours in my dreams”

Neil Harrison(b. 1982 in Belfast, Northern Ireland)

CYBORG FOUNDATION | Rafel Duran Torrent from Focus Forward Films on Vimeo.

“I started hearing colours in my dreams”

Neil Harrison(b. 1982 in Belfast, Northern Ireland)

CYBORG FOUNDATION | Rafel Duran Torrent from Focus Forward Films on Vimeo.

Eric Singer (b. 19.. in ..)

Godfried-Willem Raes (b. 1952 in Gent, Belgium)

Ajay Kapur (b. 19.. in ..)

This robot created by Festo listens to a piece of music breaking each note down into pitch, duration, and intensity. It then plugs that information into various algorithms derived from Conway’s “Game of Life” and creates a new composition while listening to one another producing an improv performance. Conway’s “Game of Life” put simply is a 2d environment where cells(pixels) react to neighboring cells based on rules.

They are:

Any live cell with fewer than two live neighbours dies, as if caused by under-population.

Any live cell with two or three live neighbours lives on to the next generation.

Any live cell with more than three live neighbours dies, as if by overcrowding.

Any dead cell with exactly three live neighbours becomes a live cell, as if by reproduction.

This algorithm tends to evolve as time passes and created in an attempt to simulate life.

This robot essentially mimics how composers take a musical motif and evolve it over the life of the piece. The robot sets the sensory information from the music played to it as the initial condition or motif and lets the algorithm change it. Since western music is highly mathematical, robots are naturals. I would say this robot has more characteristics human/animal behavior in Wiener’s example of the music box and the kitten. Unlike the music box this robot performs in accordance with a pattern yet this pattern is directly effected by its past.

[pardon my screenshot bootleg, sound is pretty bad… go to the link!]

“First ‘chatbot’ conversation ends in argument”

www.bbc.co.uk/news/technology-14843549

This is an interesting example of robot interaction. Two chatbots, having learned their chat behavior over time (1997 – 2011 !) from previous conversations with human “users” are forced to chat with each other. This BBC video probably highlights what we might consider the “human-interest” element of the story, such as the bots’ discussion of “god” and “unicorns” as well as their so-called “argumentative” sides, supposedly developed from users. With these highlights as examples, it does seem fairly convincing proof that learning from human behavior… makes you sort of human-like! This type of “artificial” learning or evolution is really interesting, as it reflects back what we choose to teach the robots we are using: we really can see that these chatbots have had to live most of their lives on the defensive. I would like to see unedited footage of the interaction. I am sure some of their conversation is a lot more boring. I noticed that the conversation tends towards confusion or miscommunication, almost exemplifying the point about entropy that Robert Weiner makes (p. 20-27): that information carried by a message could be seen as “the negative of its entropy” (as well as the negative logarithm of its probability). And yet, just as it seems the conversation might spiral into utter nonsense (and maybe it does, who knows, this might be some clever editing), the robots seem to pick up the pieces and realize what the other is saying, sometimes resulting in some pretty uncanny conversational harmony about some pretty human-feeling topics. Again, if we saw more of this chat that didn’t become part of a news story, I wonder if this conversation might slip more frequently into moments of entropic confusion. (I think those moments of entropy can tell us as much about the bots’ system of learning as their moments of success (as Heidegger / Graham Harman might say, we only notice things when they’re not working… though I kinda like lil wayne’s version from We be steady mobbin: If it ain’t broke, don’t break it)….

If we view chatbots as an analogue to the types of outside-world-sensing robots we are trying to build, only with words as both their input and output, this seems to show that they really are capable of the type of complex feedback-controlled learning that Weiner suggests (p.24) and that Alan Turing was gearing up for. This experiment is not unlike the really amazingly funny conversation in the Hofstadter reading between “Parry” (Colby), the paranoid robot, and “Doctor” (Weizenbaum), the nondirective-therapy psychiatrist robot (p.595). So, actually, BBC’s claim that this was the “first chatbot conversation” isn’t quite right…

Nonetheless, perhaps an experiment worth trying again on our own time?

My Little Piece of Privacy is a robotic art piece where a small curtain is maneuvered in a window to only block those outside form looking in. Cameras with body tracking detect people and motor drives a belt that has the curtain on it. I believe the strength of this piece is the fact that security and privacy are things that humans and robots mutually understand. Computer systems are designed from the ground up to be secure in regards to attempts to steal data or hijack processes. Security intrusions are one of the biggest threats computers face. The robot helping the human keep his privacy demonstrates a level of relate ability and understanding the robot must have for the human.

However, the problems seems a little forced as one commenter put it “But why you don’t use the large one??.” I wish the person inside was actually more exposed and dependent on the curtain for security. The actually result of a moving curtain is that passersby interacted with it more in a playful way probably decreasing the level of security inside. I think there is a lot of potential for robotic art where a robots try to defend their privacy from viewers.

In general, the dryness of this text left me frustrated with the notions of utility and purposefulness. Was the article useful? Yes, I guess. Is its view that robots should serve as usefully as this text? Yes, perhaps even more so. So I came up with my own criteria for a robot that might un-service us as the antithesis. I was hoping to dig up an example of something really silly, but well made — without purpose, but suggestive of or referential to something interesting, critically or philosophically. And last criteria: aesthetically pleasing? (Or at least perhaps visually referential to something outside robotics?) I was pretty excited by the predator slugbot (p.49) and the ecobot that converts dead flies to electrical energy (p.53), both cited in the text. But damn, they look awful. And they suggest future improvement to humanity! Yikes, that sounds useful. So I’m not sure I’ve found any artist-made useless robots that really strike the note I’m looking for.

Instead of following the assignment exactly, per se, let me drag you through the mud that was my search tonight:

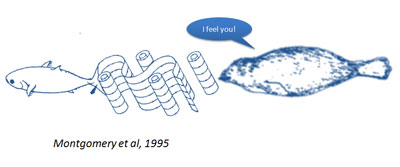

1) Non-aesthetic, utilitarian end of the spectrum: Seal Whisker Sensors

web.mit.edu/towtank/www/sealWhiskers.html

Oooo, sensor development! Obviously this is more on the straight science side, using biomimetic approach to robot design, and at this stage is purely utilitarian in order for AUVs to feel wakes…. Not unrelated to the Bristol Robotics Lab Scratchbot (p.55). But, hey, maybe it gives you underwater camera ideas, doesn’t it, Garret Brown?

But, NOT WHAT I’M LOOKING FOR.

4) Douglas Repetto’s “Nearly Human” project

music.columbia.edu/~douglas/portfolio/nearly_human/

“Nearly Human (one billionth of a human brain) is a deeply flawed physical metaphor for a human brain.

Like many brain (and other biological) metaphors, it is much too simple and mostly wrong. But it’s also an attempt at being a little bit right in ways that are non-typical for popular representations of brains.”

This seems pretty far on the “Art” side of the spectrum, and arguably might not be a robot, because I am not so sure there are any sensors in that mess. However, I think this project deals with some of the things discussed in the article as subject matter in an interesting way, since as you will see in Repetto’s notes, he is thinking about the role of metaphor in human-intelligence research, as well as the absurdity of the idea of even coming close to replicating the human brain.

Okay, weird. Cool. BUT NOT WHAT I’M LOOKING FOR.

1) Bartending robot

“Silly?” Check minus (not quite silly ENOUGH). Well made? Check. Without purpose? Uncheck, check, uncheck, check, whatever. If we debate this, we may be debating if alcohol truly serves a purpose. This ambiguity makes it all the more interesting. So, is it referential / suggestive of something interesting, critically speaking? Yeah, sure. An alcohol-serving machine / “companion” references the complexities of the questionable utility of recreational drugs, etc, and could be said, as you purchase your drink from it, to reiterate the numbing virtual structures of internet-era capitalism, eliminating the job of a grungy bearded or breasted barista who would stand in its place otherwise (whoa! but I think I’m giving it too much credit)… After all, who better to question what the model might be for the perfect human companion than the bartender?

However, do I find this bartender aesthetically interesting or pleasing? Rehhh. No. Falls into all the tropes of Robot-servitude and the aesthetic of plasticized human-features that I find fatiguing. The bowtie even throws it back to the butler-bots of science fiction, the analogue slave days of robotics! This stuff is amusing, but not what I’m looking for. The aesthetic and style of interaction seems to harken the text’s reference to conversational bots (In the humanoid robot companion section in the Robotic Futures chapter), which is written with a level of utility in mind that seems to pull the whole idea back into the realm of medicine or assembly line production. Maybe I don’t want a good lookin’ or a nice talkin’ bartender?

So, nice bartender? NOT WHAT I’M LOOKING FOR.

ALTHOUGH, this might be closer: www.gizmag.com/inebriator-robot-bartender/23974/

2) (Not unrelated to above) : Robotic Chefs

www.huffingtonpost.com/2012/06/18/robot-chefs_n_1601634.html

But equally problematic, in my humble opinion (from the point of view of “as art project,” perhaps)….

NOT WHAT I’M LOOKING FOR.

Okay, mission failed. But there’s some okay reverse-inspiration here.

Reading about robotic gardeners reminded me of my Uncle Rodger. He farms over 80,000 acres out in Kansas and purchases new equipment every few years. Last summer he and I had a conversation about his process and he mentioned various robotic equipment including this combine harvester. The machinery uses GPS and various sensors to guide the combine along the planted rows, turn around, and align itself again harvesting in the opposite direction. An employee still rides along to monitor the behavior of the machine. Rodger said that employing these robots they are able to yield more of the crop otherwise missed by human error. After poking around the web I found this post on arduino forum where a DIY’er modified his combine to behave in the same why with relatively cheap components.

Sony AIBO—The symbol of the future

Electric Shock Wind Reprieve

A low-voltage electric shock is delivered to my arm.

When I blow on the wind sensor, I am granted a temporary reprieve from the pain. The duration of the break lasts as long as I blow times two.