Final Project Documentation: The Wobble Box

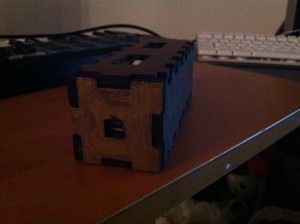

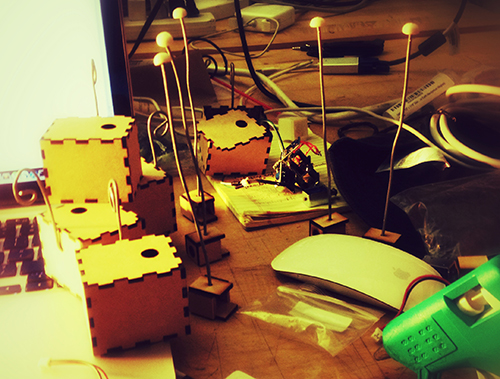

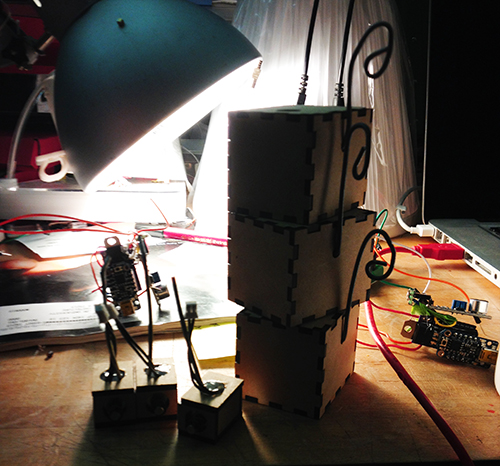

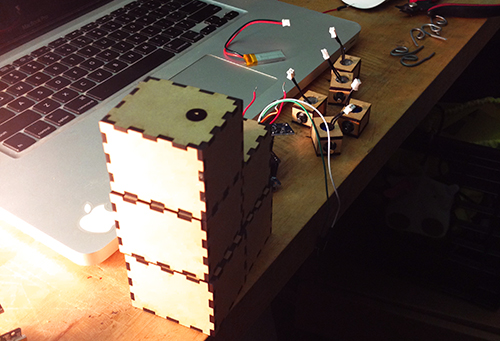

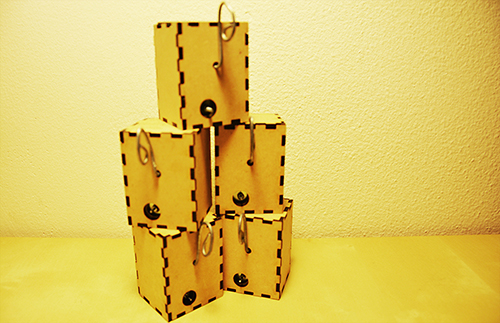

After taking time to consider exactly what I hope to accomplish with my device, the aim of of my project has somewhat shifted. Rather than attempt to build a sound-controller of some kind that includes everything I like about current models while implementing a few improvements, I’ve decided to focus only on the improvements I’d like to see. Specifically, the improvements I’ve been striving for are simplicity and interesting sensors, so I’ve been spending all of my time trying to make small devices with very specific intentions. My first success has been the creation of what I’m calling the “Wobble Box.”

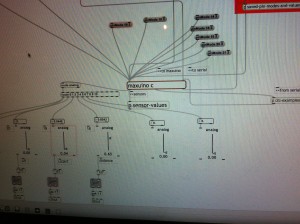

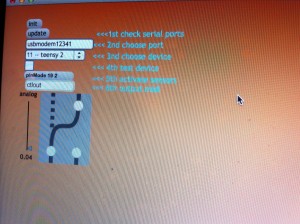

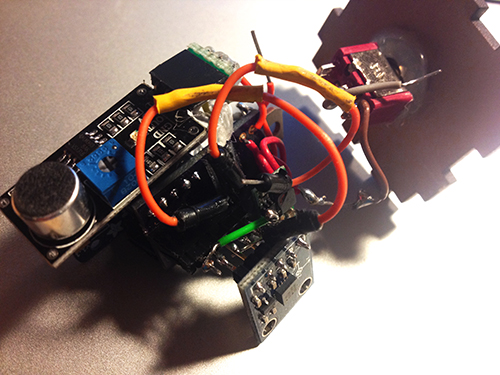

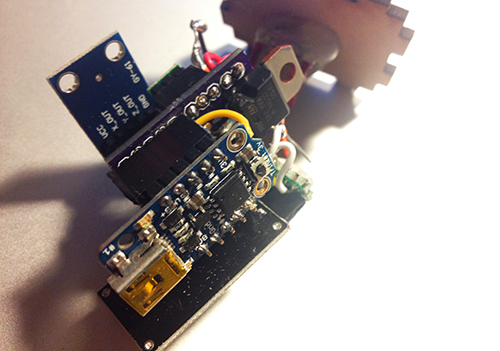

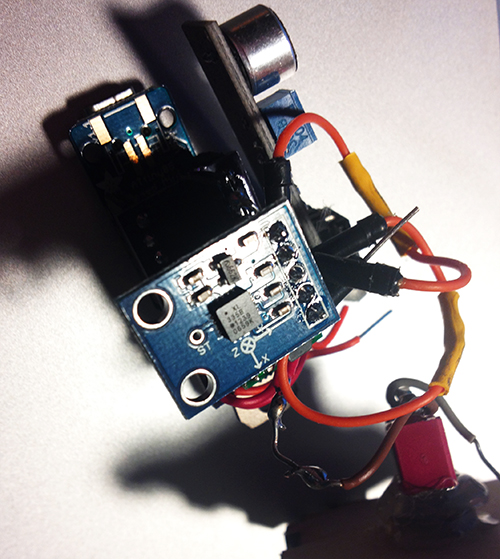

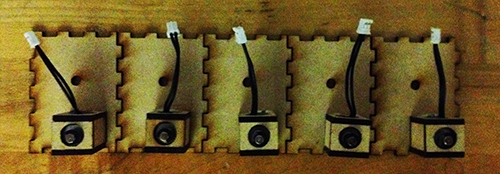

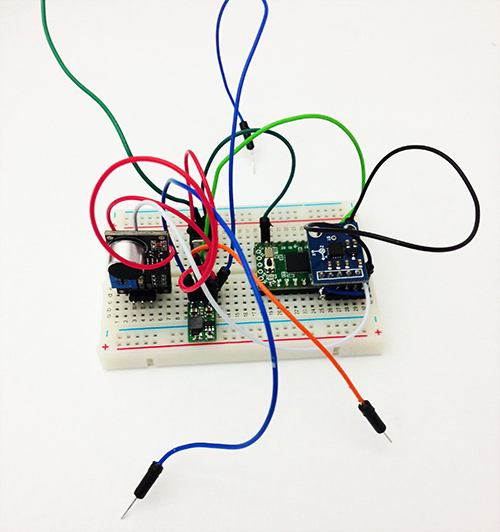

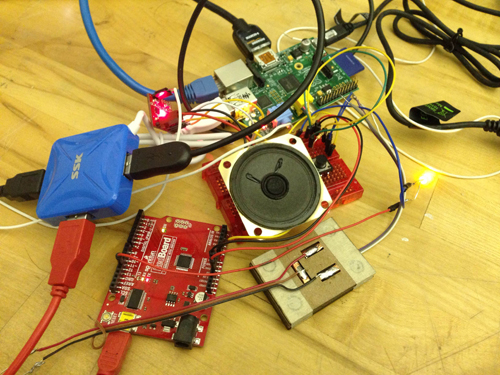

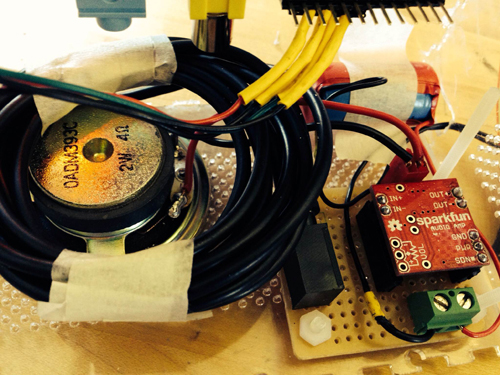

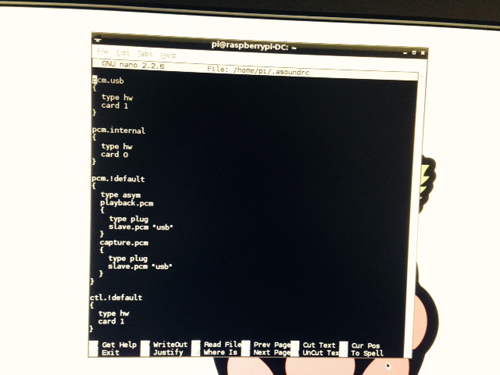

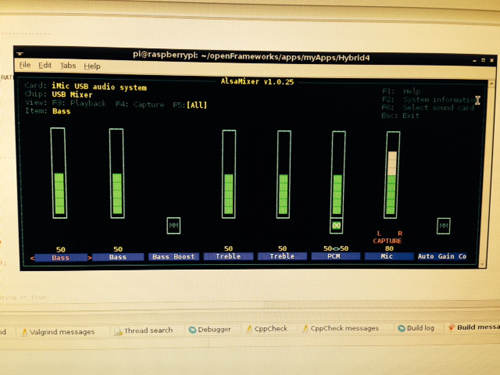

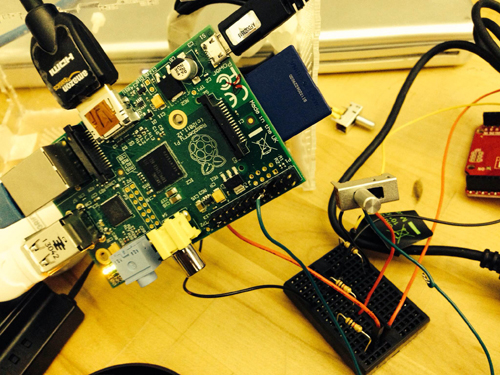

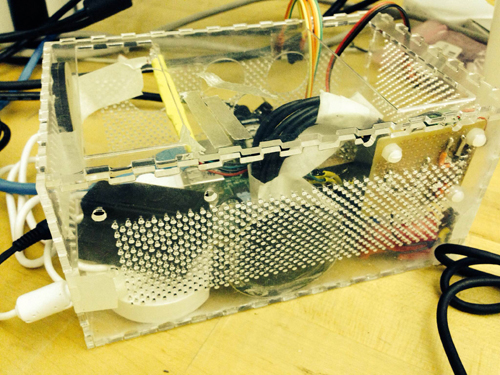

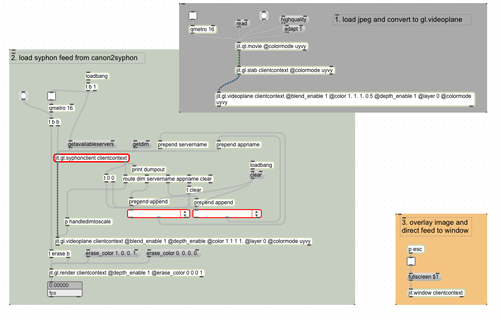

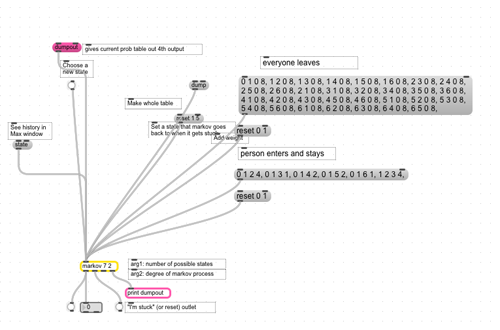

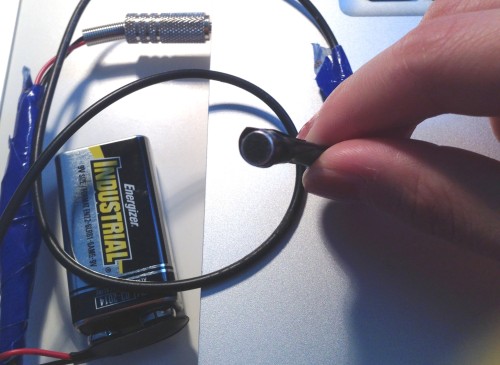

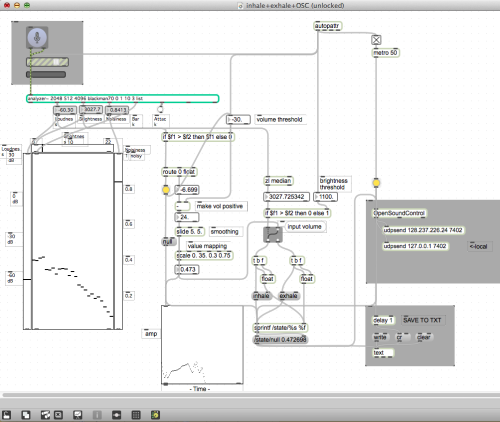

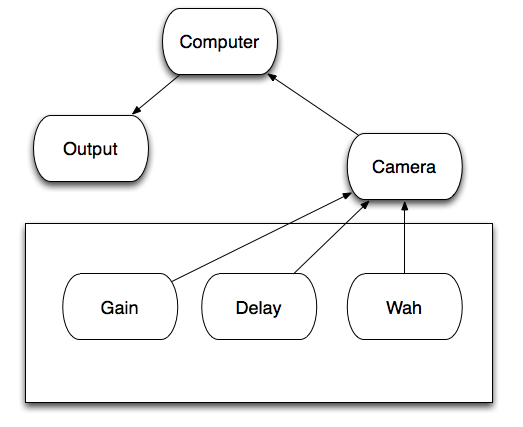

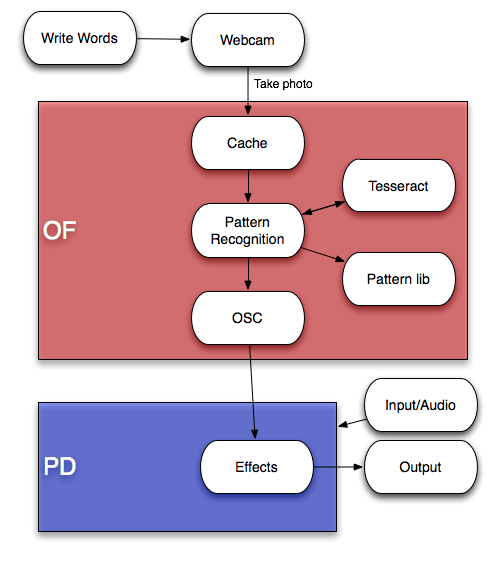

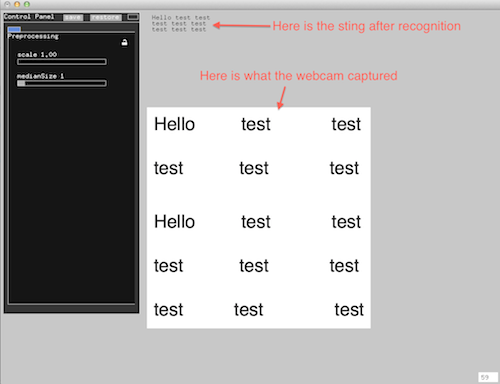

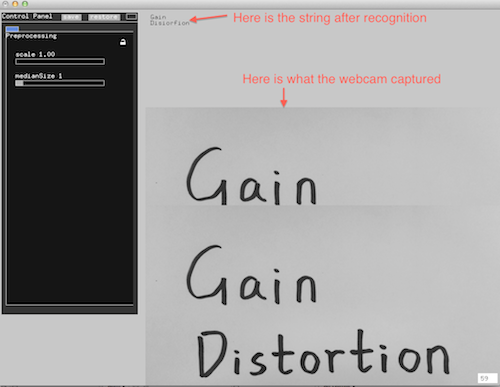

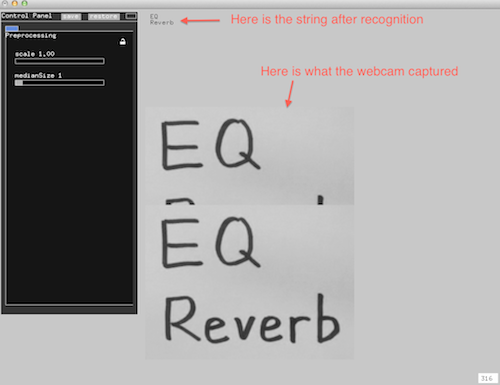

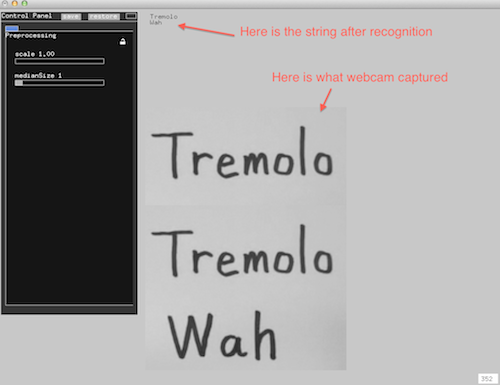

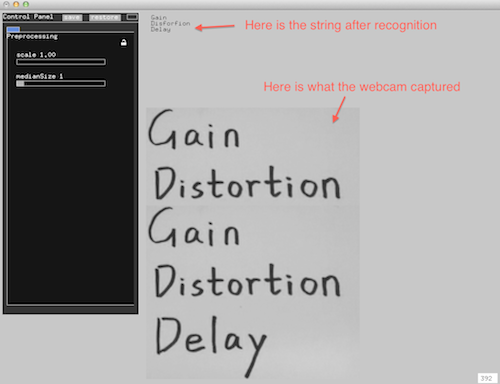

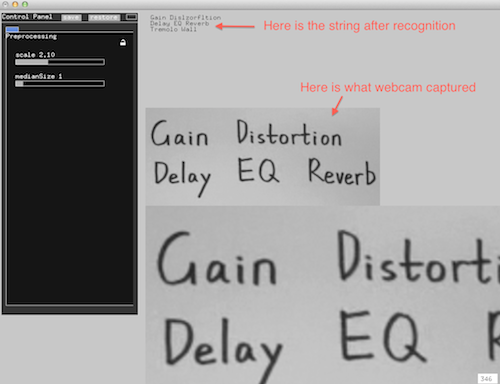

Simply stated, the box contains two distance sensors which are each plugged into a Teensy 2.0. I receive data from the sensors within Max, where I scale it and “normalize” it to remove peaks, making it more friendly to sound modulation. While running Max, I can open Ableton Live and map certain audio effects to parameters in Max. Using this technique I assigned the distance from the box to the cutoff of a low-pass filter, as well as a slight frequency modulation and resonance shift. These are the core elements of the traditional Jamaican/Dubstep sound of a “wobble bass,” hence the name of the box. While I chose this particular sound, the data from the sensors can be used to control any parameters within Ableton.

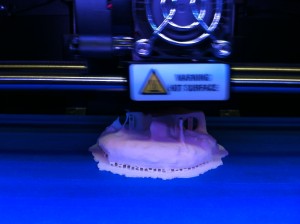

Designing this box was a challenge for me because of my limited experience with hardware; soldering the distance sensors to the board was difficult to say the least, and operating a laser-cutter was a first for me. However, it forced me to learn a lot about the basics of electronics and I now feel confident in my ability to design a better prototype that is smaller, sleeker, and more compatible with similar devices. I’ve already begun working on a similar box with joysticks, and a third with light sensors. I plan to make the boxes connectible with magnets.

For my presentation in class, I will be using my device as well as a standard Akai APC40. The wobble box is not capable or meant to produce its own melodies, but rather change effects on existing melodies. Because of this, I will be using a live-clip launching method to perform with it, making a secondary piece of hardware necessary.

For my presentation in class, I will be using my device as well as a standard Akai APC40. The wobble box is not capable or meant to produce its own melodies, but rather change effects on existing melodies. Because of this, I will be using a live-clip launching method to perform with it, making a secondary piece of hardware necessary.